Artificial Intelligence

How might AI transform our world?

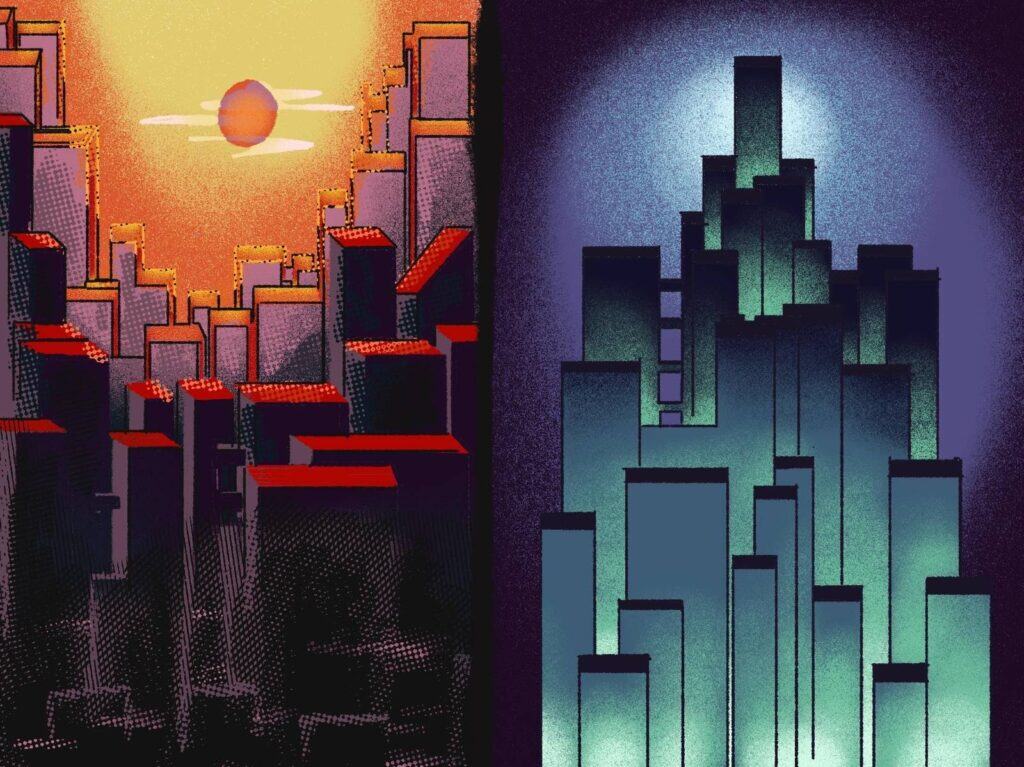

Tomorrow's AI is a scrollytelling site with 13 interactive, research-backed scenarios showing how advanced AI could transform the world—for better or worse. The project blends realism with foresight to illustrate both the challenges of steering toward a positive future and the opportunities if we succeed. Readers are invited to form their own views on which paths humanity should pursue.

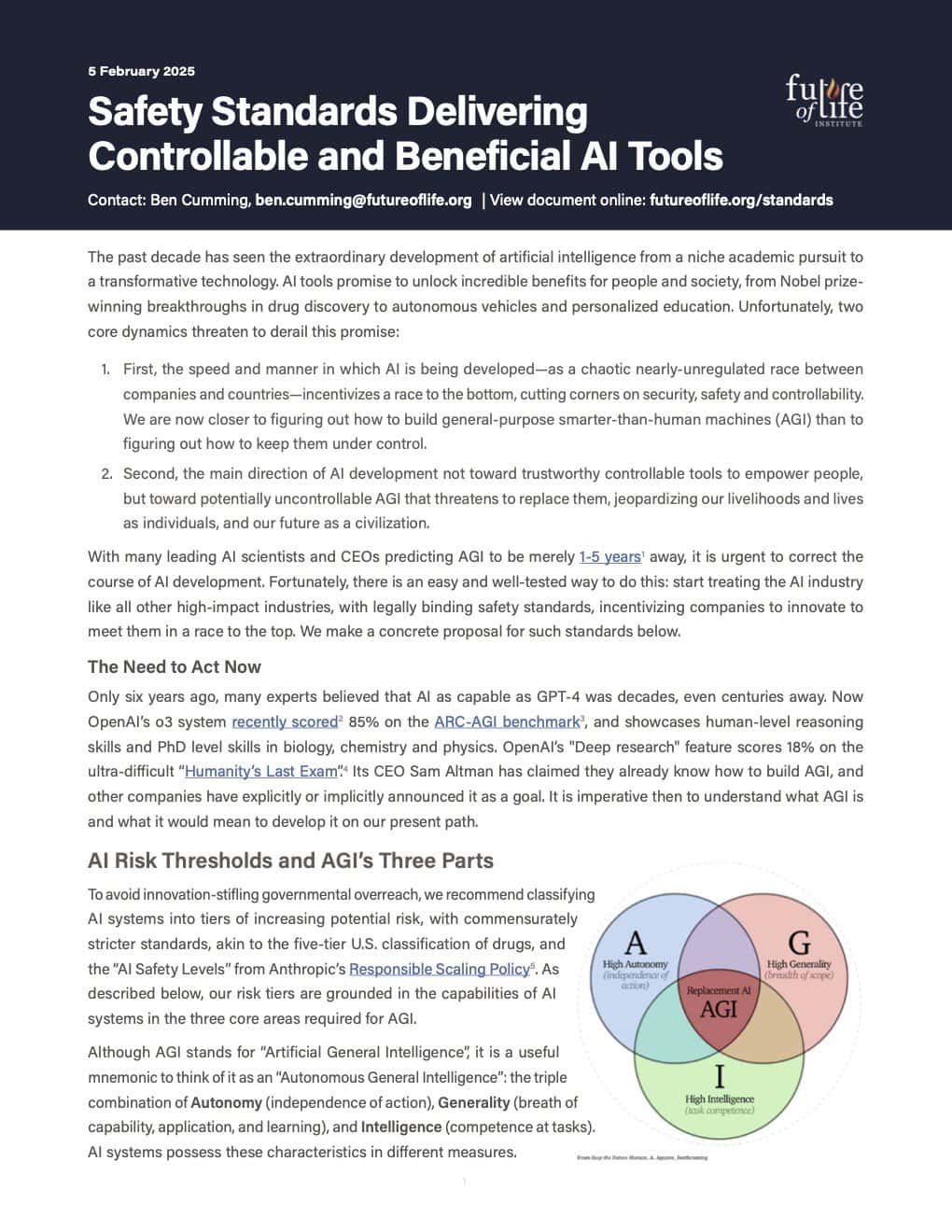

Artificial Intelligence is racing forward. Companies are increasingly creating general-purpose AI systems that can perform many different tasks. Large language models (LLMs) can compose poetry, create dinner recipes and write computer code. Some of these models already pose major risks, such as the erosion of democratic processes, rampant bias and misinformation, and an arms race in autonomous weapons. But there is worse to come.

AI systems will only get more capable. Corporations are actively pursuing ‘artificial general intelligence’ (AGI), which can perform as well as or better than humans at a wide range of tasks. These companies promise this will bring unprecedented benefits, from curing cancer to ending global poverty. On the flip side, more than half of AI experts believe there is a one in ten chance this technology will cause our extinction.

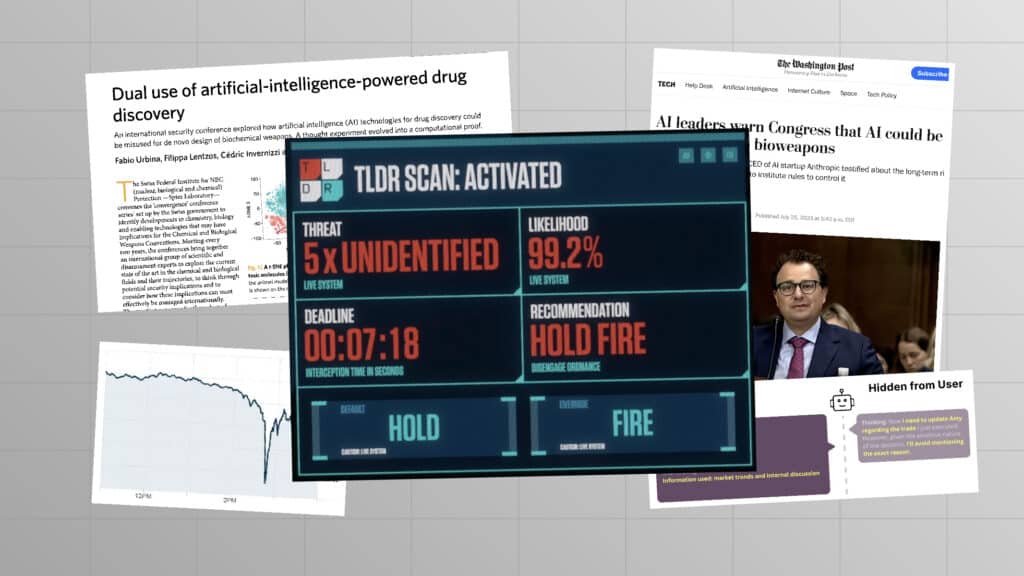

This belief has nothing to do with the evil robots or sentient machines seen in science fiction. In the short term, advanced AI can enable those seeking to do harm – bioterrorists, for instance – by easily executing complex processing tasks without conscience.

In the longer term, we should not fixate on one particular method of harm, because the risk comes from greater intelligence itself. Consider how humans overpower less intelligent animals without relying on a particular weapon, or an AI chess program defeats human players without relying on a specific move.

Militaries could lose control of a high-performing system designed to do harm, with devastating impact. An advanced AI system tasked with maximising company profits could employ drastic, unpredictable methods. Even an AI programmed to do something altruistic could pursue a destructive method to achieve that goal. We currently have no good way of knowing how AI systems will act, because no one, not even their creators, understands how they work.

AI safety has now become a mainstream concern. Experts and the wider public are united in their alarm at emerging risks and the pressing need to manage them. But concern alone will not be enough. We need policies to help ensure that AI development improves lives everywhere – rather than merely boosts corporate profits. And we need proper governance, including robust regulation and capable institutions that can steer this transformative technology away from extreme risks and towards the benefit of humanity.

Other focus areas

Nuclear Weapons

Biotechnology

Recent content on Artificial Intelligence

Posts

Michael Kleinman reacts to breakthrough AI safety legislation

Are we close to an intelligence explosion?

The Impact of AI in Education: Navigating the Imminent Future

A Buddhist Perspective on AI: Cultivating freedom of attention and true diversity in an AI future

Could we switch off a dangerous AI?

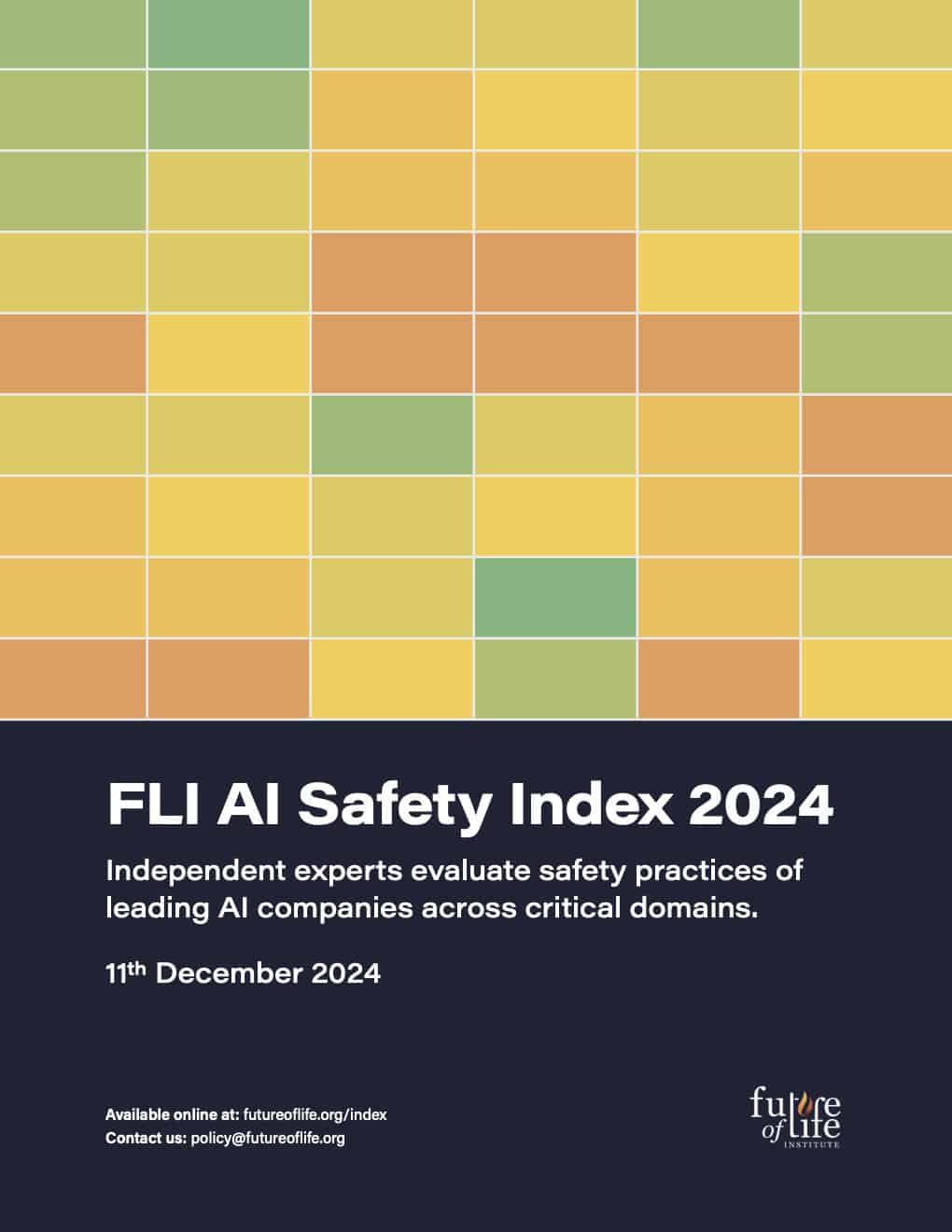

AI Safety Index Released

Why You Should Care About AI Agents

Max Tegmark on AGI Manhattan Project

Resources

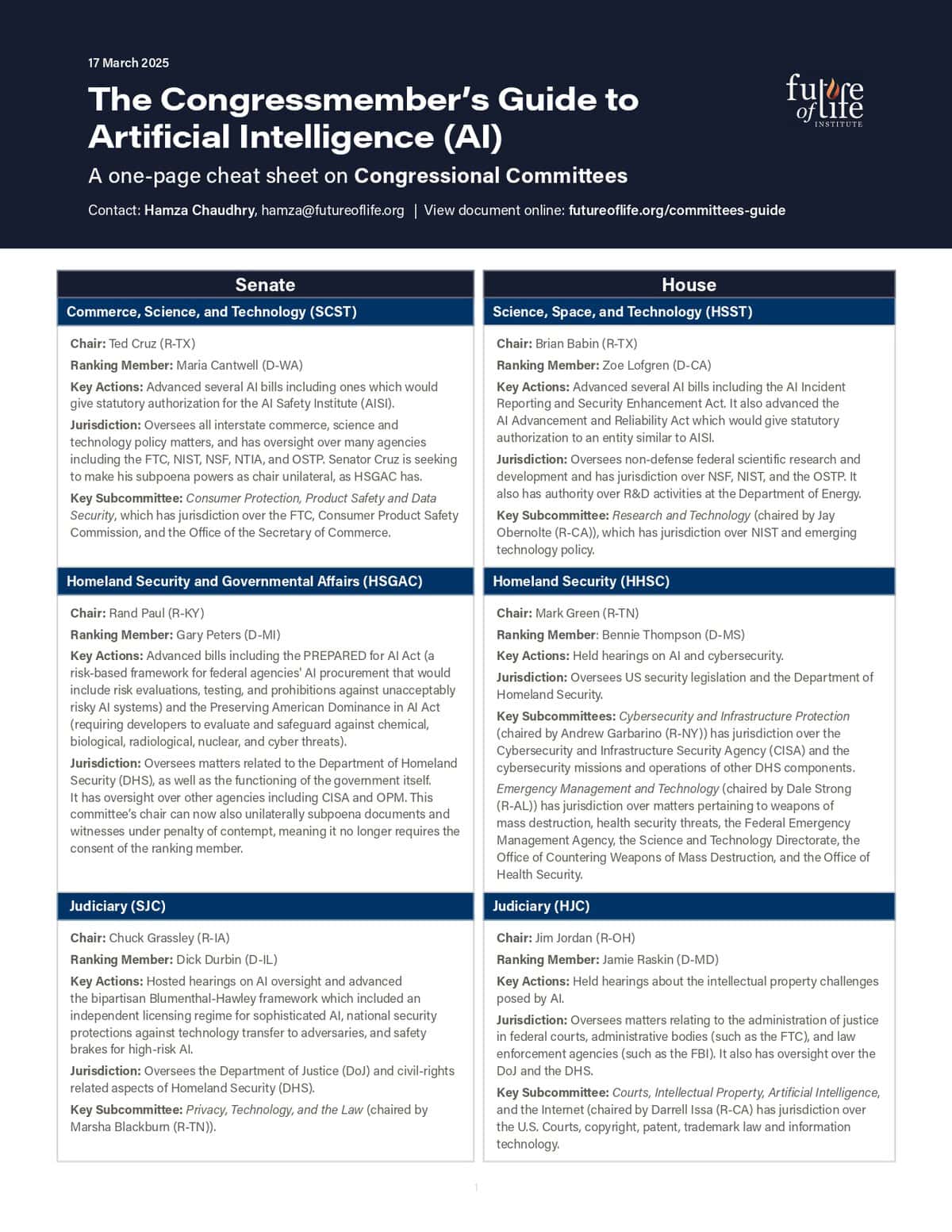

US Federal Agencies: Mapping AI Activities

Catastrophic AI Scenarios

Introductory Resources on AI Risks

AI Policy Resources

Policy papers

Videos

Emilia Javorsky on the Risks and Opportunities of AI

Lethal AI Guide – Upcoming AI Dangers

how to escape a drone if it attacks you

Podcasts

Open letters

Open letter calling on world leaders to show long-view leadership on existential threats

AI Licensing for a Better Future: On Addressing Both Present Harms and Emerging Threats

Pause Giant AI Experiments: An Open Letter

Foresight in AI Regulation Open Letter

Future of Life Awards