Our content

The central hub for all of the content we have produced. Here you can browse many of our most popular content, as well as find our most recent publications.

Essentials

Essential reading

We have written a few articles that we believe all people interested in our cause areas should read. They provide a more thorough exploration than you will find on our cause area pages.

Benefits & Risks of Biotechnology

Over the past decade, progress in biotechnology has accelerated rapidly. We are poised to enter a period of dramatic change, in which the genetic modification of existing organisms -- or the creation of new ones -- will become effective, inexpensive, and pervasive.

14 November, 2018

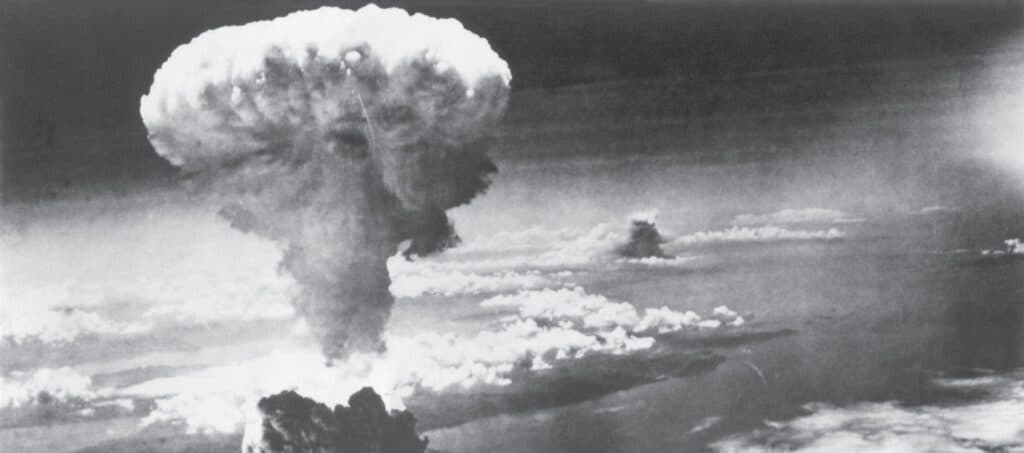

The Risk of Nuclear Weapons

Despite the end of the Cold War over two decades ago, humanity still has ~13,000 nuclear weapons on hair-trigger alert. If detonated, they may cause a decades-long nuclear winter that could kill most people on Earth. Yet the superpowers plan to invest trillions upgrading their nuclear arsenals.

16 November, 2015

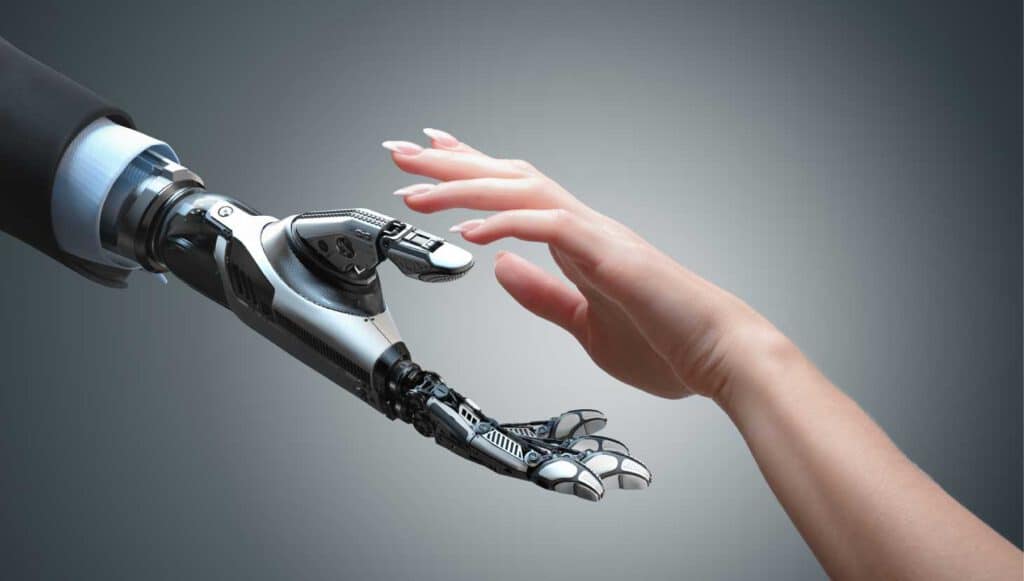

Benefits & Risks of Artificial Intelligence

From SIRI to self-driving cars, artificial intelligence (AI) is progressing rapidly. While science fiction often portrays AI as robots with human-like characteristics, AI can encompass anything from Google's search algorithms to IBM's Watson to autonomous weapons.

14 November, 2015

Archives

Explore our library of content

Most popular

Our most popular content

Posts

Our most popular posts:

Benefits & Risks of Artificial Intelligence

From SIRI to self-driving cars, artificial intelligence (AI) is progressing rapidly. While science fiction often portrays AI as robots with human-like characteristics, AI can encompass anything from Google's search algorithms to IBM's Watson to autonomous weapons.

14 November, 2015

Exploration of secure hardware solutions for safe AI deployment

This collaboration between the Future of Life Institute and Mithril Security explores hardware-backed AI governance tools for transparency, traceability, and confidentiality.

30 November, 2023

Benefits & Risks of Biotechnology

Over the past decade, progress in biotechnology has accelerated rapidly. We are poised to enter a period of dramatic change, in which the genetic modification of existing organisms -- or the creation of new ones -- will become effective, inexpensive, and pervasive.

14 November, 2018

90% of All the Scientists That Ever Lived Are Alive Today

Click here to see this page in other languages: German The following paper was written and submitted by Eric Gastfriend. […]

5 November, 2015

Existential Risk

Click here to see this page in other languages: Chinese French German Russian An existential risk is any risk that […]

16 November, 2015

Artificial Photosynthesis: Can We Harness the Energy of the Sun as Well as Plants?

Click here to see this page in other languages : Russian In the early 1900s, the Italian chemist Giacomo Ciamician recognized that […]

30 September, 2016

The Risk of Nuclear Weapons

Despite the end of the Cold War over two decades ago, humanity still has ~13,000 nuclear weapons on hair-trigger alert. If detonated, they may cause a decades-long nuclear winter that could kill most people on Earth. Yet the superpowers plan to invest trillions upgrading their nuclear arsenals.

16 November, 2015

How Do We Align Artificial Intelligence with Human Values?

Click here to see this page in other languages: Chinese German Japanese Russian A major change is coming, over unknown timescales […]

3 February, 2017

Resources

Our most popular resources:

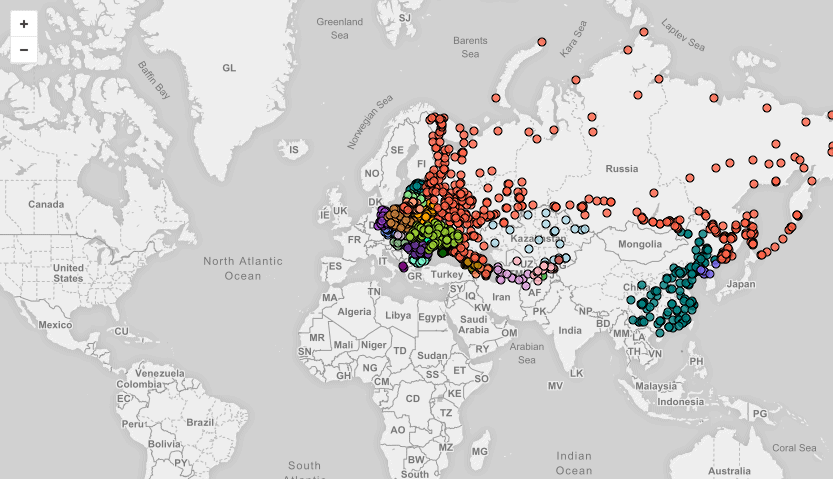

1100 Declassified U.S. Nuclear Targets

The National Security Archives recently published a declassified list of U.S. nuclear targets from 1956, which spanned 1,100 locations across Eastern Europe, Russia, China, and North Korea. The map below shows all 1,100 nuclear targets from that list, and we’ve partnered with NukeMap to demonstrate how catastrophic a nuclear exchange between the United States and Russia could be.

12 May, 2016

Responsible Nuclear Divestment

Only 30 companies worldwide are involved in the creation of nuclear weapons, cluster munitions and/or landmines. Yet a significant number […]

21 June, 2017

Global AI Policy

How countries and organizations around the world are approaching the benefits and risks of AI Artificial intelligence (AI) holds great […]

16 December, 2022

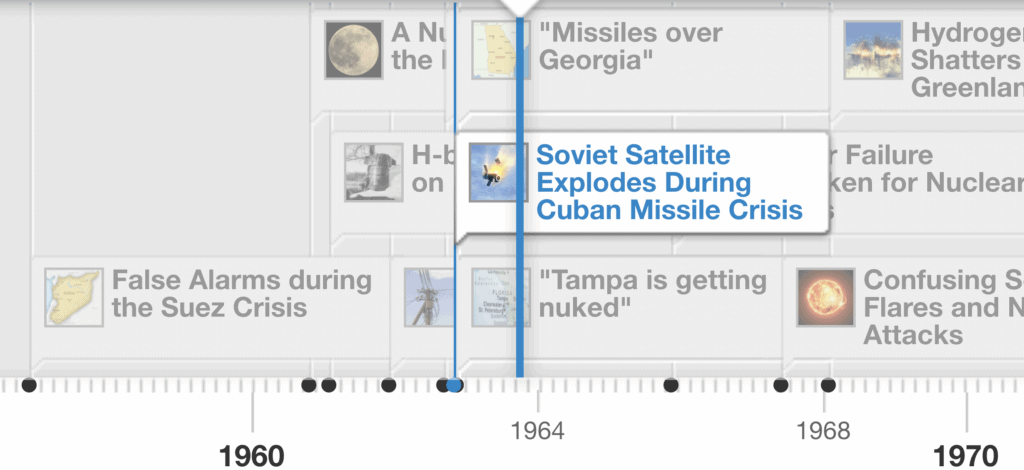

Accidental Nuclear War: a Timeline of Close Calls

The most devastating military threat arguably comes from a nuclear war started not intentionally but by accident or miscalculation. Accidental […]

23 February, 2016

Life 3.0

This New York Times bestseller tackles some of the biggest questions raised by the advent of artificial intelligence. Tegmark posits a future in which artificial intelligence has surpassed our own — an era he terms “life 3.0” — and explores what this might mean for humankind.

22 November, 2021

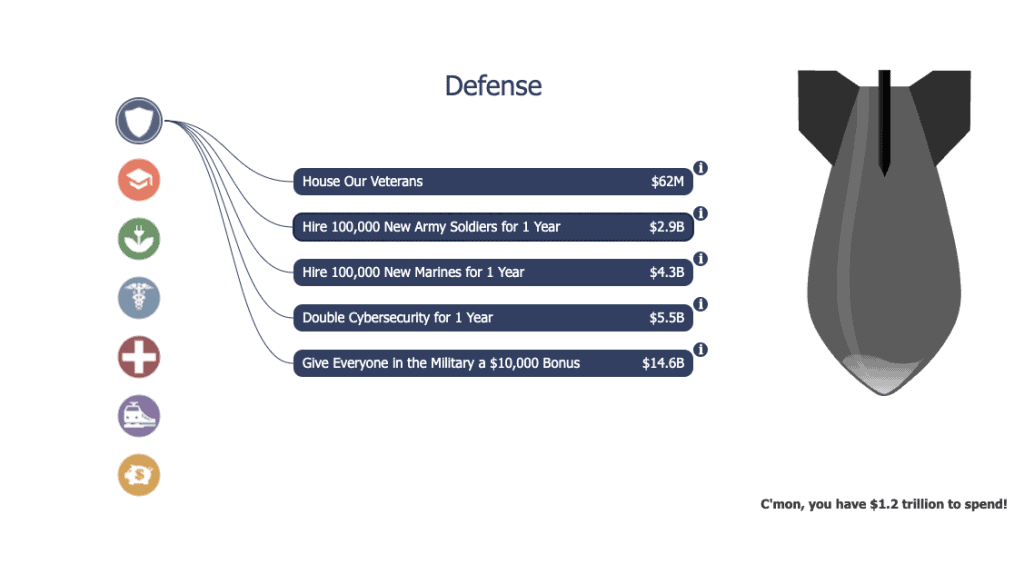

Trillion Dollar Nukes

Would you spend $1.2 trillion tax dollars on nuclear weapons? How much are nuclear weapons really worth? Is upgrading the […]

24 October, 2016

The Top Myths About Advanced AI

Common myths about advanced AI distract from fascinating true controversies where even the experts disagree.

7 August, 2016

AI Policy Challenges

This page is intended as an introduction to the major challenges that society faces when attempting to govern Artificial Intelligence […]

17 July, 2018

Recently added

Our most recent content

Here are the most recent items of content that we have published:

1 December, 2025

Congress Considers Banning State AI Laws… Again…

newsletter

1 December, 2025

newsletter

1 November, 2025

Over 65,000 Sign to Ban the Development of Superintelligence

newsletter

1 November, 2025

newsletter

19 October, 2025

The U.S. Public Wants Regulation (or Prohibition) of Expert‑Level and Superhuman AI

post

19 October, 2025

post

View all latest content

Latest documents

Here are our most recent policy papers:

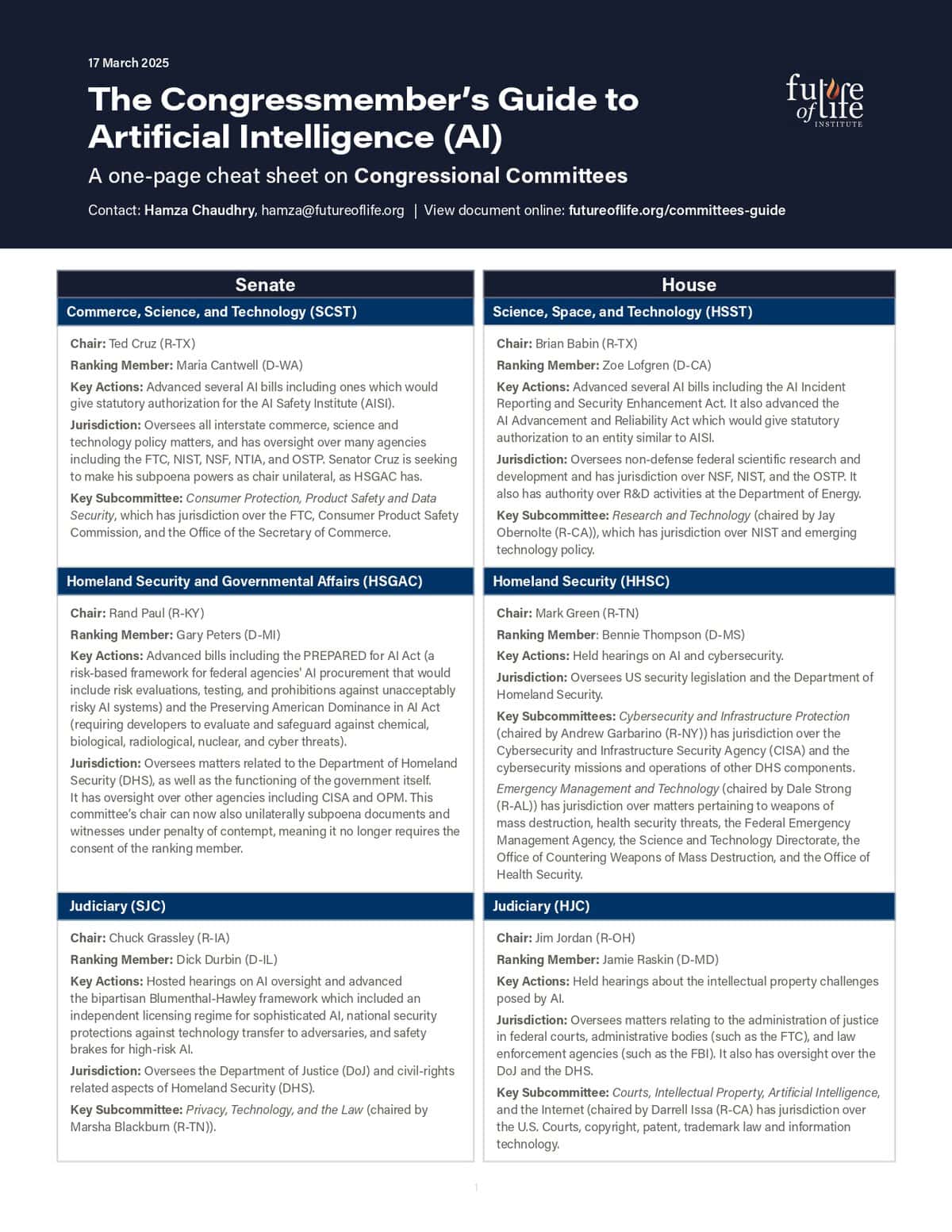

Staffer’s Guide to AI Policy: Congressional Committees and Relevant Legislation

March 2025

Recommendations for the U.S. AI Action Plan

March 2025

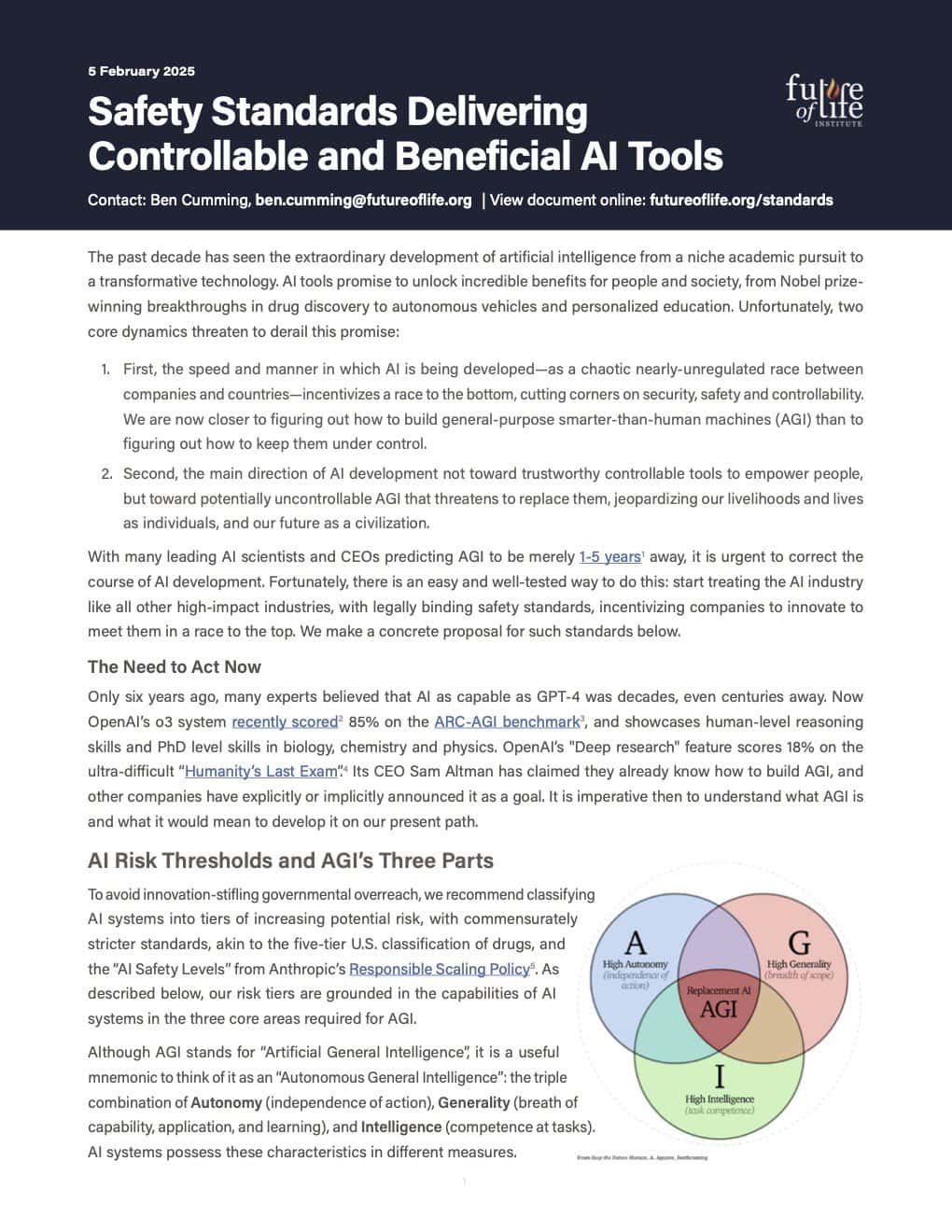

Safety Standards Delivering Controllable and Beneficial AI Tools

February 2025

Framework for Responsible Use of AI in the Nuclear Domain

February 2025

View all policy papers

Videos

Latest videos

Here are some of our recent videos:

How two films saved the world from nuclear war

13 November, 2023

Regulate AI Now

28 September, 2023

The AI Pause. What’s Next?

22 September, 2023

Artificial Escalation

17 July, 2023

Our YouTube channel

Future of Life Institute Podcast

Conversations with far-sighted thinkers.

Our namesake podcast series features the FLI team in conversation with prominent researchers, policy experts, philosophers, and a range of other influential thinkers.

newsletter

Regular updates about the technologies shaping our world

Every month, we bring 41,000+ subscribers the latest news on how emerging technologies are transforming our world. It includes a summary of major developments in our cause areas, and key updates on the work we do. Subscribe to our newsletter to receive these highlights at the end of each month.

Congress Considers Banning State AI Laws… Again…

Plus: The first reported case of AI-enabled spying; why superintelligence wouldn't be controllable; takeaways from WebSummit; and more.

Maggie Munro

1 December, 2025

Over 65,000 Sign to Ban the Development of Superintelligence

Plus: Final call for PhD fellowships and Creative Contest; new California AI laws; FLI is hiring; can AI truly be creative?; and more.

Maggie Munro

1 November, 2025

AI at the Vatican

Plus: Fellowship applications open; global call for AI red lines; new polling finds 90% support for AI rules; register for our $100K creative contest; and more.

Maggie Munro

1 October, 2025

Read previous editions

Open letters

Add your name to the list of concerned citizens

We have written a number of open letters calling for action to be taken on our cause areas, some of which have gathered hundreds of prominent signatures. Most of these letters are still open today. Add your signature to include your name on the list of concerned citizens.

106385

Statement on Superintelligence

We call for a prohibition on the development of superintelligence, not lifted before there is (1) broad scientific consensus that it will be done safely and controllably, and (2) strong public buy-in.

22 September, 2025

2672

Open letter calling on world leaders to show long-view leadership on existential threats

The Elders, Future of Life Institute and a diverse range of co-signatories call on decision-makers to urgently address the ongoing impact and escalating risks of the climate crisis, pandemics, nuclear weapons, and ungoverned AI.

14 February, 2024

Closed

AI Licensing for a Better Future: On Addressing Both Present Harms and Emerging Threats

This joint open letter by Encode Justice and the Future of Life Institute calls for the implementation of three concrete US policies in order to address current and future harms of AI.

25 October, 2023

31810

Pause Giant AI Experiments: An Open Letter

We call on all AI labs to immediately pause for at least 6 months the training of AI systems more powerful than GPT-4.

22 March, 2023

All open letters