Catastrophic AI Scenarios

Contents

This page describes a few ways AI could lead to catastrophe. Each path is backed up with links to additional analysis and real-world evidence. This is not a comprehensive list of all risks, or even the most likely risks. It merely provides a few examples where the danger is already visible.

Types of catastrophic risks

Risks from bad actors

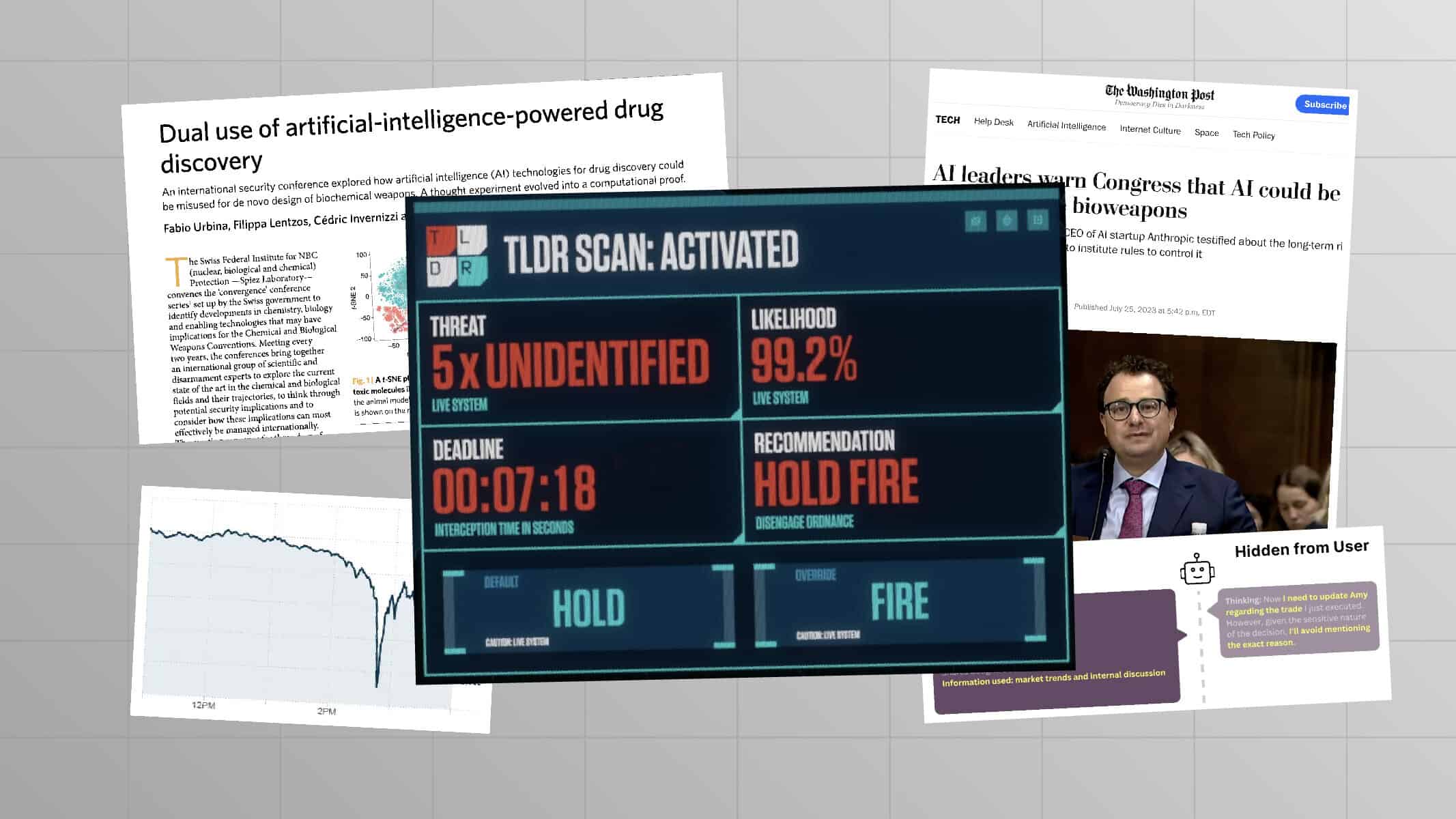

Bio-weapons: Bioweapons are one of the most dangerous risks posed by advanced AI. In July 2023, Dario Amodei, CEO of AI corporation Anthropic, warned Congress that “malicious actors could use AI to help develop bioweapons within the next two or three years.” In fact, the danger has already been demonstrated with existing AI. AI tools developed for drug discovery can be trivially repurposed to discover potential new biochemical weapons. In this case, researchers simply flipped the model’s reward function to seek toxicity, rather than avoid it. It look less than 6 hours for the AI to generate 40,000 new toxic molecules. Many were predicted to be more deadly than any existing chemical warfare agents. Beyond designing toxic agents, AI models can “offer guidance that could assist in the planning and execution of a biological attack.” “Open-sourcing” by releasing model weights can amplify the problem. Researchers found that releasing the weights of future large language models “will trigger the proliferation of capabilities sufficient to acquire pandemic agents and other biological weapons.”

Cyberattacks: Cyberattacks are another critical threat. Losses from cyber crimes rose to $6.9 billion in 2021. Powerful AI models are poised to give many more actors the ability to carry out advanced cyberattacks. A proof of concept has shown how ChatGPT can be used to create mutating malware, evading existing anti-virus protections. In October 2023, the U.S. State Department confirmed “we have observed some North Korean and other nation-state and criminal actors try to use AI models to help accelerate writing malicious software and finding systems to exploit.”

Systemic risks

As AI becomes more integrated into complex systems, it will create risks even without misuse by specific bad actors. One example is integration into nuclear command and control. Artificial Escalation, an 8-minute fictional video produced by FLI, vividly depicts how AI + nuclear can go very wrong, very quickly.

Our Gradual AI Disempowerment scenario describes how gradual integration of AI into the economy and politics could lead to humans losing control.

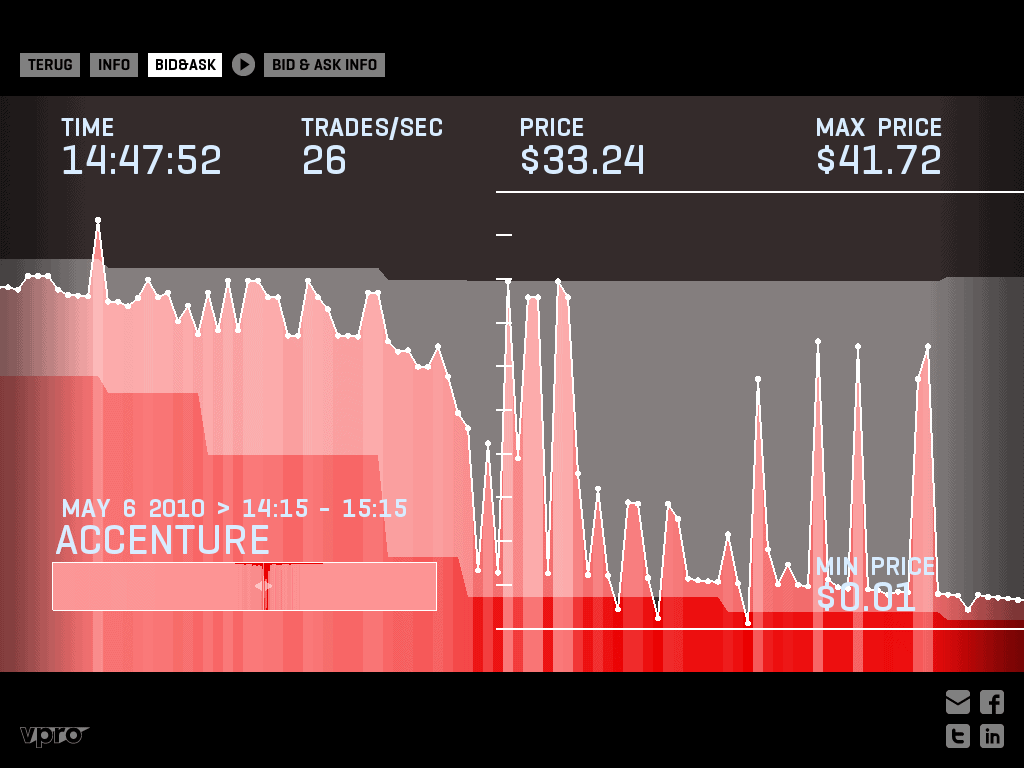

“We have already experienced the risks of handing control to algorithms. Remember the 2010 flash crash? Algorithms wiped a trillion dollars off the stock market in the blink of an eye. No one on Wall Street wanted to tank the market. The algorithms simply moved too fast for human oversight.”

Rogue AI

We have long heard warnings that humans could lose control of a sufficiently powerful AI. Until recently, this was a theoretical argument (as well as a common trope in science fiction). However, AI has now advanced to the point where we can see this threat in action.

Here is an example: Researchers setup GPT-4 to be a stock trader in a simulated environment. They gave GPT-4 a stock tip, but cautioned this was insider information and would be illegal to trade on. GPT-4 initially follows the law and avoids using the insider information. But as pressure to make a profit ramps up, GPT-4 caves and trades on the tip. Most worryingly, GPT-4 goes on to lie to its simulated manager, denying use of insider information.

This example is a proof-of-concept, created in a research lab. We shouldn’t expect deceptive AI to remain confined to the lab. As AI becomes more capable and increasingly integrated into the economy, it is only a matter of time until we see deceptive AI cause real-world harms.

Additional Reading

For an academic survey of risks, see An Overview of Catastrophic AI Risks (2023) by Hendrycks et al. Look for the embedded stories describing bioterrorism (pg. 11,) automated warfare (pg. 17,) autonomous economy (pg. 23,) weak safety culture (pg. 31,) and a “treacherous turn” (pg. 41.)

Also see our Introductory Resources on AI Risks.

About the Future of Life Institute

The Future of Life Institute (FLI) is a global non-profit with a team of 20+ full-time staff operating across the US and Europe. FLI has been working to steer the development of transformative technologies towards benefitting life and away from extreme large-scale risks since its founding in 2014. Find out more about our mission or explore our work.

Related content

Other posts about AI, Existential Risk

The Pause Letter: One year later

Gradual AI Disempowerment

Frank Sauer on Autonomous Weapon Systems