Doomsday Clock: Two and a Half Minutes to Midnight

Contents

Is the world more dangerous than ever?

Today in Washington, D.C, the Bulletin of Atomic Scientists announced its decision to move the infamous Doomsday Clock thirty seconds closer to doom: It is now two and a half minutes to midnight.

Each year since 1947, the Bulletin of Atomic Scientists has publicized the symbol of the Doomsday Clock to convey how close we are to destroying our civilization with dangerous technologies of our own making. As the Bulletin perceives our existential threats to grow, the minute hand inches closer to midnight.

For the past two years the Doomsday Clock has been set at three minutes to midnight.

But now, in the face of an increasingly unstable political climate, the Doomsday Clock is the closest to midnight it has been since 1953.

The clock struck two minutes to midnight in 1953 at the start of the nuclear arms race, but what makes 2017 uniquely dangerous for humanity is the variety of threats we face. Not only is there growing uncertainty with nuclear weapons and the leaders that control them, but the existential threats of climate change, artificial intelligence, cybersecurity, and biotechnology continue to grow.

As the Bulletin notes, “The challenge remains whether societies can develop and apply powerful technologies for our welfare without also bringing about our own destruction through misapplication, madness, or accident.”

Rachel Bronson, the Executive Director and publisher of the Bulletin of the Atomic Scientists, said: “This year’s Clock deliberations felt more urgent than usual. In addition to the existential threats posed by nuclear weapons and climate change, new global realities emerged, as trusted sources of information came under attack, fake news was on the rise, and words were used by a President-elect of the United States in cavalier and often reckless ways to address the twin threats of nuclear weapons and climate change.”

Lawrence Krauss, a Chair on the Board of Sponsors, warned viewers that “technological innovation is occurring at a speed that challenges society’s ability to keep pace.” While these technologies offer unprecedented opportunities for humanity to thrive, they have proven difficult to control and thus demand responsible leadership.

Given the difficulty of controlling these increasingly capable technologies, Krauss discussed the importance of science for informing policy. Scientists and groups like the Bulletin don’t seek to make policy, but their research and evidence must support and inform policy. “Facts are stubborn things,” Krauss explained, “and they must be taken into account if the future of humanity is to be preserved. Nuclear weapons and climate change are precisely the sort of complex existential threats that cannot be properly managed without access to and reliance on expert knowledge.”

The Bulletin ended their public statement today with a strong message: “It is two and a half minutes to midnight, the Clock is ticking, global danger looms. Wise public officials should act immediately, guiding humanity away from the brink. If they do not, wise citizens must step forward and lead the way.”

You can read the Bulletin of Atomic Scientists’ full report here.

About the Future of Life Institute

The Future of Life Institute (FLI) is a global think tank with a team of 20+ full-time staff operating across the US and Europe. FLI has been working to steer the development of transformative technologies towards benefitting life and away from extreme large-scale risks since its founding in 2014. Find out more about our mission or explore our work.

Related content

Other posts about Existential Risk, Recent News

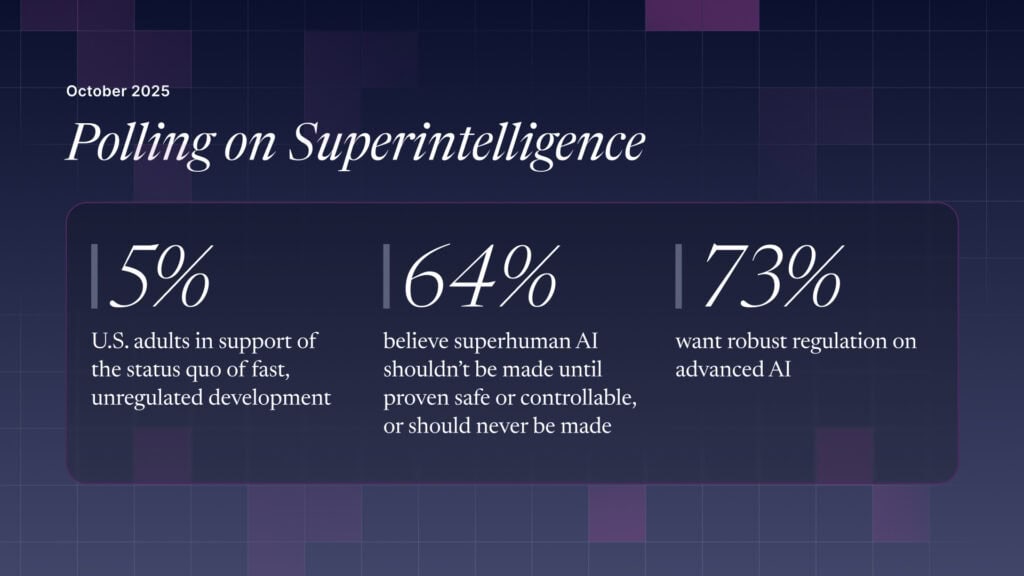

The U.S. Public Wants Regulation (or Prohibition) of Expert‑Level and Superhuman AI

Are we close to an intelligence explosion?