Can We Properly Prepare for the Risks of Superintelligent AI?

Contents

Risks Principle: Risks posed by AI systems, especially catastrophic or existential risks, must be subject to planning and mitigation efforts commensurate with their expected impact.

We don’t know what the future of artificial intelligence will look like. Though some may make educated guesses, the future is unclear.

AI could keep developing like all other technologies, helping us transition from one era into a new one. Many, if not all, AI researchers hope it could help us transform into a healthier, more intelligent, peaceful society. But it’s important to remember that AI is a tool and, as such, not inherently good or bad. As with any other technology or tool, there could be unintended consequences. Rarely do people actively attempt to crash their cars or smash their thumbs with hammers, yet both happen all the time.

A concern is that as technology becomes more advanced, it can affect more people. A poorly swung hammer is likely to only hurt the person holding the nail. A car accident can harm passengers and drivers in both cars, as well as pedestrians. A plane crash can kill hundreds of people. Now, automation threatens millions of jobs — and while presumably no lives will be lost as a direct result, mass unemployment can have devastating consequences.

And job automation is only the beginning. When AI becomes very general and very powerful, aligning it with human interests will be challenging. If we fail, AI could plausibly become an existential risk for humanity.

Given the expectation that advanced AI will far surpass any technology seen to date — and possibly surpass even human intelligence — how can we predict and prepare for the risks to humanity?

To consider the Risks Principle, I turned to six AI researchers and philosophers.

Non-zero Probability

An important aspect of considering the risk of advanced AI is recognizing that the risk exists, and it should be taken into account.

As Roman Yampolskiy, an associate professor at the University of Louisville, explained, “Even a small probability of existential risk becomes very impactful once multiplied by all the people it will affect. Nothing could be more important than avoiding the extermination of humanity.”

This is “a very reasonable principle,” said Bart Selman, a professor at Cornell University. He explained, “I sort of refer to some of the discussions between AI scientists who might differ in how big they think that risk is. I’m quite certain it’s not zero, and the impact could be very high. So … even if these things are still far off and we’re not clear if we’ll ever reach them, even with a small probability of a very high consequence we should be serious about these issues. And again, not everybody, but the subcommunity should.”

Anca Dragan, an assistant professor at UC Berkeley was more specific about her concerns. “An immediate risk is agents producing unwanted, surprising behavior,” she explained. “Even if we plan to use AI for good, things can go wrong, precisely because we are bad at specifying objectives and constraints for AI agents. Their solutions are often not what we had in mind.”

Considering Other Risks

While most people I spoke with interpreted this Principle to address longer-term risks of AI, Dan Weld, a professor at the University of Washington, took a more nuanced approach.

“How could I disagree?” He asked. “Should we ignore the risks of any technology and not take precautions? Of course not. So I’m happy to endorse this one. But it did make me uneasy, because there is again an implicit premise that AI systems have a significant probability of posing an existential risk.”

But then he added, “I think what’s going to happen is – long before we get superhuman AGI – we’re going to get superhuman artificial *specific* intelligence. … These narrower kinds of intelligence are going to be at the superhuman level long before a *general* intelligence is developed, and there are many challenges that accompany these more narrowly described intelligences.”

“One technology,” he continued, “that I wish [was] discussed more is explainable machine learning. Since machine learning is at the core of pretty much every AI success story, it’s really important for us to be able to understand *what* it is that the machine learned. And, of course, with deep neural networks it is notoriously difficult to understand what they learned. I think it’s really important for us to develop techniques so machines can explain what they learned so humans can validate that understanding. … Of course, we’ll need explanations before we can trust an AGI, but we’ll need it long before we achieve general intelligence, as we deploy much more limited intelligent systems. For example, if a medical expert system recommends a treatment, we want to be able to ask, ‘Why?’

“Narrow AI systems, foolishly deployed, could be catastrophic. I think the immediate risk is less a function of the intelligence of the system than it is about the system’s autonomy, specifically the power of its effectors and the type of constraints on its behavior. Knight Capital’s automated trading system is much less intelligent than Google Deepmind’s AlphaGo, but the former lost $440 million in just forty-five minutes. AlphaGo hasn’t and can’t hurt anyone. … And don’t get me wrong – I think it’s important to have some people thinking about problems surrounding AGI; I applaud supporting that research. But I do worry that it distracts us from some other situations which seem like they’re going to hit us much sooner and potentially cause calamitous harm.”

Open to Interpretation

Still others I interviewed worried about how the Principle might be interpreted, and suggested reconsidering word choices, or rewriting the principle altogether.

Patrick Lin, an Associate Professor at California Polytechnic State University, believed that the Principle is too ambiguous.

He explained, “This sounds great in ‘principle,’ but you need to work it out. For instance, it could be that there’s this catastrophic risk that’s going to affect everyone in the world. It could be AI or an asteroid or something, but it’s a risk that will affect everyone. But the probabilities are tiny — 0.000001 percent, let’s say. Now if you do an expected utility calculation, these large numbers are going to break the formula every time. There could be some AI risk that’s truly catastrophic, but so remote that if you do an expected utility calculation, you might be misled by the numbers.”

“I agree with it in general,” Lin continued, “but part of my issue with this particular phrasing is the word ‘commensurate.’ Commensurate meaning an appropriate level that correlates to its severity. So I think how we define commensurate is going to be important. Are we looking at the probabilities? Are we looking at the level of damage? Or are we looking at expected utility? The different ways you look at risk might point you to different conclusions. I’d be worried about that. We can imagine all sorts of catastrophic risks from AI or robotics or genetic engineering, but if the odds are really tiny, and you still want to stick with this expected utility framework, these large numbers might break the math. It’s not always clear what the right way is to think about risk and a proper response to it.”

Meanwhile Nate Soares, the Executive Director of the Machine Intelligence Research Institute, suggested that the Principle should be more specific.

Soares said, “The principle seems too vague. … Maybe my biggest concern with it is that it leaves out questions of tractability: the attention we devote to risks shouldn’t actually be proportional to the risks’ expected impact; it should be proportional to the expected usefulness of the attention. There are cases where we should devote more attention to smaller risks than to larger ones, because the larger risk isn’t really something we can make much progress on. (There are also two separate and additional claims, namely ‘also we should avoid taking actions with appreciable existential risks whenever possible’ and ‘many methods (including the default methods) for designing AI systems that are superhumanly capable in the domains of cross-domain learning, reasoning, and planning pose appreciable existential risks.’ Neither of these is explicitly stated in the principle.)

“If I were to propose a version of the principle that has more teeth, as opposed to something that quickly mentions ‘existential risk’ but doesn’t give that notion content or provide a context for interpreting it, I might say something like: ‘The development of machines with par-human or greater abilities to learn and plan across many varied real-world domains, if mishandled, poses enormous global accident risks. The task of developing this technology therefore calls for extraordinary care. We should do what we can to ensure that relations between segments of the AI research community are strong, collaborative, and high-trust, so that researchers do not feel pressured to rush or cut corners on safety and security efforts.’”

What Do You Think?

How can we prepare for the potential risks that AI might pose? How can we address longer-term risks without sacrificing research for shorter-term risks? Human history is rife with learning from mistakes, but in the case of the catastrophic and existential risks that AI could present, we can’t allow for error – but how can we plan for problems we don’t know how to anticipate? AI safety research is critical to identifying unknown unknowns, but is there more the the AI community or the rest of society can do to help mitigate potential risks?

This article is part of a weekly series on the 23 Asilomar AI Principles.

The Principles offer a framework to help artificial intelligence benefit as many people as possible. But, as AI expert Toby Walsh said of the Principles, “Of course, it’s just a start. … a work in progress.” The Principles represent the beginning of a conversation, and now we need to follow up with broad discussion about each individual principle. You can read the weekly discussions about previous principles here.

About the Future of Life Institute

The Future of Life Institute (FLI) is a global think tank with a team of 20+ full-time staff operating across the US and Europe. FLI has been working to steer the development of transformative technologies towards benefitting life and away from extreme large-scale risks since its founding in 2014. Find out more about our mission or explore our work.

Related content

Other posts about AI, AI Safety Principles, Recent News

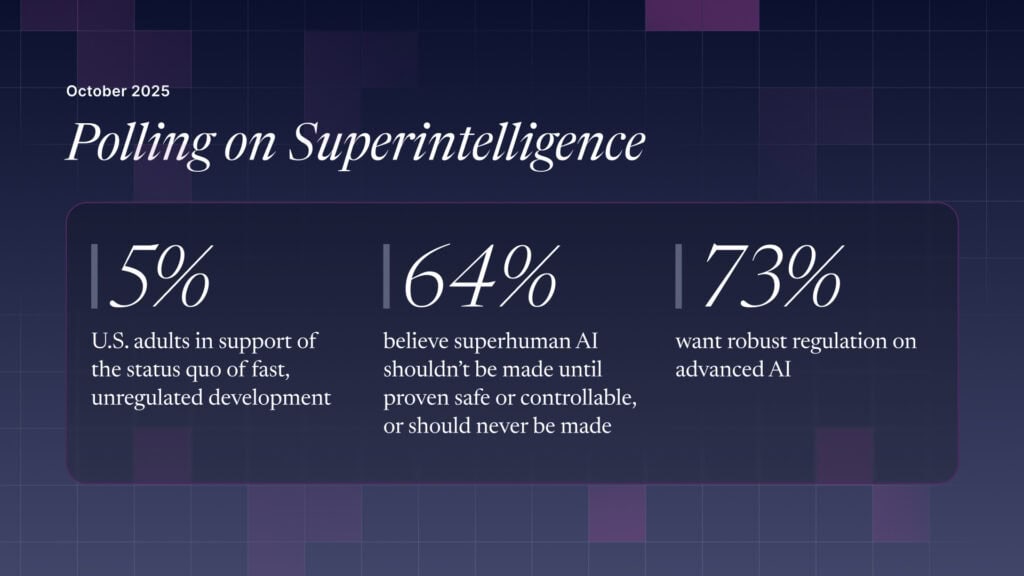

The U.S. Public Wants Regulation (or Prohibition) of Expert‑Level and Superhuman AI