The AI field is abuzz with talk of ‘agents’, the next iteration of AI poised to cause a step-change in the way we interact with the digital world (and vice versa!). Since the release of ChatGPT in 2022, the dominant AI paradigm has been Large Language Models (LLMs), text-based question and answer systems that, while their capabilities continue to become more impressive, can only respond passively to queries they are given.

Now, companies have their sights set on models that can proactively suggest and implement plans, and take real-world actions on behalf of their users. This week, OpenAI CEO Sam Altman predicted that we will soon be able to “give an AI system a pretty complicated task, the kind of task you’d give to a very smart human”, and that if this works as intended, it will “really transform things”. The company’s Chief Product Officer Kevin Weil also hinted at behind-the-scenes developments, claiming: “2025 is going to be the year that agentic systems finally hit the mainstream”. AI commentator Jeremy Kahn has forecasted a flurry of AI agent releases over the next six to eight months from tech giants including Google, OpenAI and Amazon. In just the last two weeks, Microsoft significantly increased the availability of purpose-built and custom autonomous agents within Copilot Studio, in an expansion of what Venturebeat is calling the industry’s “largest AI agent ecosystem”.

So what exactly are AI agents, and how soon (if ever) can we expect them to become ubiquitous? Should their emergence excite us, worry us, or both? We’ve investigated the state-of-the-art in AI agents and where it might be going next.

What is an AI agent?

Defining what exactly constitutes an ‘AI agent’ is a surprisingly thorny task. We could simply call any model that can perform actions on behalf of users an agent, though even LLMs such as ChatGPT might reasonably fall under this umbrella – they can construct entire essays based off of vague prompts by making a series of independent decisions about form and content, for example. But this definition fails to get at the important differences between today’s chat-based systems, and the agents that tech giants have hinted may be around the corner.

Agency exists on a spectrum. The more agency a system has, the more complex tasks it can handle end-to-end without human intervention. When people talk about the next generation of agents, they are usually referring to systems that can perform entire projects without continuous prompting from a human.

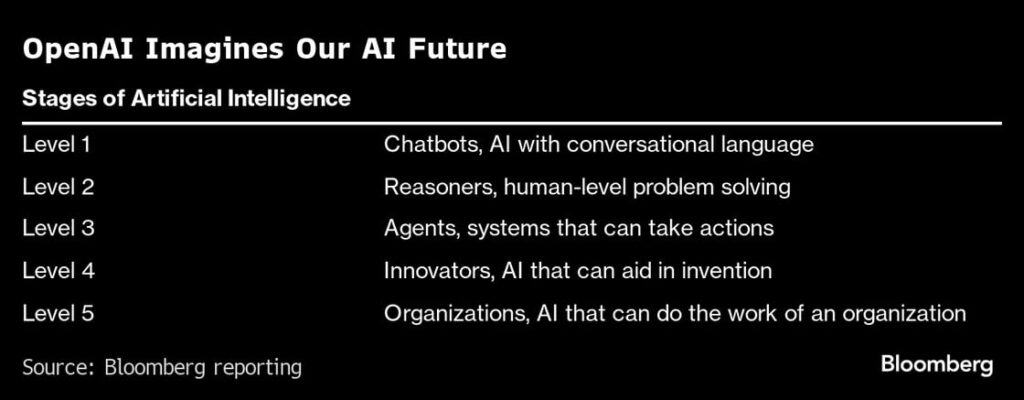

This general trend towards more agentic systems is one of the most important axes of AI development. OpenAI places ‘agents’ at the third rung on a five-step ladder towards Artificial General Intelligence (AGI). They define agents as systems that can complete multi-day tasks on behalf of users. Insiders have disclosed that the company believes itself to be closing in on the second of these five stages already, meaning that systems this transformative be just around the corner.

The difference between the chatbots of today and the agents of tomorrow becomes clearer when we consider a concrete example. Take planning a holiday: A system like GPT-4 can suggest an itinerary if you manually provide your dates, budget, and preferences. But a sufficiently powerful AI agent would transform this process. Its key advantage would be the ability to interact with external systems – it could select ideal dates by accessing your calendar, understand your preferences through your search history, social media presence and other digital footprints, and use your payment and contact details to book flights, hotels and restaurants. One text prompt to your AI assistant could set in motion an automated chain of events that culminates in a meticulous itinerary and several booking confirmations landing in your inbox.

In this video demo, an AI agent is prompted by a single prompt to send an email invitation and purchase a gift:

The holiday-booking and gift-buying scenarios are two intuitive (and potentially imminent) examples, though they are dwarfed in significance if we consider the actions that AI agents might be able to autonomously achieve as their capabilities grow. Advanced agents might be able to execute on prompts that go far beyond “plan a holiday”, able to deliver results for prompts like “design, market and launch an app” or “turn this $100,000 into $1 million” (this is a milestone that AI luminary and DeepMind co-founder Mustafa Suleyman has publicly predicted AIs will reach by 2025). In theory, there is no ceiling to how complex a task AI systems might be able to independently complete as their capabilities and integration with the wider world increase.

We’ve been focusing so far on software agents that are emerging at the frontier of general-purpose AI development, but these are of course not the only type of AI agent out there! There are many agents that already interact with the real world, such as self-driving cars and delivery drones. They use sensors to perceive their environment, complete complex, multi-step tasks without human intervention, and can react to unexpected changes in their environment. Though these agents are highly capable, they are only trained to perform a narrow set of actions (your Waymo isn’t going to start pontificating on moral philosophy in the middle of your morning commute). AI agents that have the ability to generalise across domains, and build on the capabilities of today’s state-of-the-art LLMs, are what will likely be the most transformative – which is why we’re primarily focusing on them here.

The AI agent state-of-the-art

Tech leaders have bold visions for a future that humans share with powerful AI agents. Sam Altman imagines each of us having access to “a super-competent colleague that knows absolutely everything” about our lives. DeepMind CEO Demmis Hassabis envisions “a universal assistant” that is “multimodal” and “with you all the time”. How close are they to realising these ambitions?

Here’s how much progress each of the leading AI companies has made towards advanced agents.

OpenAI

OpenAI has yet to release any products explicitly marketed as ‘agents’, though several of its products have already demonstrated some capacity for automation of complex tasks. For example, its Advanced Data Analytics tool (which is built into ChatGPT and was previously known as Code Inpreter), can write and run Python code on a user’s behalf, and independently complete tasks such as data scrubbing.

Custom GPTs are another OpenAI product that represent an important step on the way to fully autonomous agents. They can already be connected to external services and databases, which means you can create AI assistants to, for example, read and send emails, or help you with online shopping.

According to several reports, OpenAI plans to release an agent named Operator as soon as January 2025, which will have capabilities such as autonomous web browsing, making purchases, and completing routine tasks like scheduling meetings.

Anthropic

Anthropic recently released a demo of its new computer use feature, which allows its model, Claude 3.5 Sonnet, to operate computers on a user’s behalf. The model uses multimodal capabilities to ‘see’ what is happening on a screen, and count the number of pixels the cursor needs to be moved vertically or horizontally in order to click in the correct place.

The feature is still fairly error-prone, but Claude currently scores higher than any other model on OSWorld, a benchmark designed to evaluate how well AI models can complete multi-step computer tasks – making it state-of-the-art in what will likely be one of the most important capabilities for advanced agents.

Google Deepmind

Deepmind has been experimenting with AI agents in the videogame sphere, where they have created a Scalable Instructable Multiworld Agent (SIMA). SIMA can carry out complex tasks in virtual environments by following natural language prompts. Similar techniques could help produce agents that can act in the real world.

Project Astra is DeepMind’s ultimate vision for an universal, personalised AI agent. Currently in its prototype phase, the agent is pitched as set apart by its multimodal capabilities. CEO Demis Hassabis imagines that Astra will ultimately become an assistant that can ‘live’ on multiple devices and will carry about complex tasks on a user’s behalf.

Why are people building AI agents?

The obvious answer to this question is that reliable AI agents are just very useful, and will become more so as their power increases. At the personal level, a personal assistant of the type that DeepMind is attempting to build could liberate us from much of the mundane admin associated with everyday life (booking appointments, scheduling meetings, paying bills…) and free us up to spend time on the many things we’d rather be doing. On the company side, they have the clear potential to boost productivity and cut costs – for example, publishing company Wiley saw a 40% efficiency increase after adopting a much-publicised agent platform offered by Salesforce.

If developers can ensure that AI agents do not fall prey to technical failures such as hallucinations, it’s possible that they could prove more reliable than humans in high-stakes environments. OpenAI’s GPT-4 already outcompetes human doctors at diagnosing illnesses. Similarly accurate AI agents that can think over long enough time horizons to design treatment plans and assist in providing sustained care to patients, for example, could save lives if deployed at scale. Studies have also suggested that autonomous vehicles cause accidents at a lower rate than human drivers, meaning that widespread adoption of self-driving cars would lead to fewer traffic accidents.

Are we ready?

A world populated with extremely competent AI agents that interact autonomously with the digital and physical worlds may not be far away. Taking this scenario seriously raises many ethical dilemmas, and forces us to consider a wide spectrum of possible risks. Safety teams at leading AI labs are racing to answer these questions as the AI-agent era draws nearer, though the path forward remains unclear.

Malfunctions and technical failures

One obvious way in which AI agents could pose risks is in the same, mundane way that so many technologies before them have – we could deploy them in a high stakes environment, and they could break.

Unsurprisingly, there have been cases of AI agents malfunctioning in ways that caused harm. In one high-profile case, for example, a self-driving car in San Francisco struck a pedestrian, and failing to register that she was still pinned under its wheels, dragged her 20 feet as it attempted to pull over. The point isn’t necessarily that AI agents are (or will be) more unreliable than humans – as mentioned above, the opposite may even be true in the case of self-driving cars. But these early, small-scale failures are harbingers of larger ones that will inevitably occur as we deploy agentic systems at scale. Imagine the havoc that a malfunctioning agent could cause if it had been appointed CEO of a company (this may sound far-fetched, but has already happened)!

Misalignment risks

If in the early days of agentic AI systems, we have to worry about AIs not reasoning well enough, increasing capabilities may lead to the opposite problem – agents that reason too well, and that we have failed to sufficiently align with our interests. This opens up a suite of risks in a category that AI experts refer to as ‘misalignment’. In simple terms, a misaligned AI is one that is pursuing different objectives to those of its creators (or pursuing the right objectives, but using unexpected methods). There are good reasons to expect that the default behaviour of extremely capable but misaligned agents may be dangerous. For example, they could be incentivised to seek power or resist shutdown in order to achieve their goals.

There have already been examples of systems that pretend to act in the interests of users while harbouring ulterior motives (deception), tell users what they want to hear even if it isn’t true (sycophancy) or cut corners to elicit human approval (reward-hacking). These systems were unable to cause widespread harm, due to remaining less capable than humans. But if we do not find robust, scalable ways to ensure AIs always behave as intended, powerful agents may be both motivated and able to execute complex plans that harm us. This is why many experts are worried that advanced AI may pose catastrophic or even existential risks to humanity.

Opaque reasoning

We know that language models like ChatGPT work very well, but we have surprisingly little idea how they work. Anthropic CEO Dario Amodei recently estimated that we currently understand just 3% what happens inside artificial neural networks. There is an entire research agenda called mechanistic interpretability, which seeks to learn more about how AI systems acquire knowledge and make decisions – though it may be challenging for progress to keep pace with improvement in AI’s capabilities. The more complex plans AI agents are able to execute, the more of a problem this might pose. Their decision making processes may eventually prove too complicated for us to interpret, and we may have no way to verify whether they are being truthful about their intentions.

This exacerbates the problem of misalignment described above. For example, if an AI agent formulates a complex plan that involves deceiving humans, and we are unable to ‘look inside’ its neural network and decode its reasoning, we are unlikely to realise we are being deceived in time to intervene.

Malicious use

The problem of reliably getting powerful AI agents to do as we say is mirrored by another – what about situations where we don’t want them to follow their user’s instructions? Experts have long been concerned about the misuse of powerful AI, and the rationale behind this fear is very simple: there likely are many more actors in the world who want to do widespread harm than have the ability to. This is a gap that AI could help to close.

For example, consider a terrorist group attempting to build a bioweapon. Currently, highly-specialised, PhD-level knowledge is required to do this successfully – and current LLMs have, so far, not meaningfully lowered this bar to entry. But imagine that this terrorist group had access to a highly capable AI agent of the type that may exist in the not-too-distant future. A purely ‘helpful’ agent would faithfully assist them in acquiring the necessary materials, conducting research, running simulations, developing the pathogen, and carrying out an attack. Depending on how agentic this system is, it could do anything from providing support at each separate stage of this process to formulating and executing the entire plan autonomously.

AI companies apply safety features to their models to prevent them from producing harmful outputs. However, there are many examples of models being jailbroken using clever prompts. More and more powerful models are also being open-sourced, which makes safety features easy and cheap to remove. It therefore seems likely that malicious actors will find ways to use powerful AI agents to cause harm in the future.

Job automation

Even if we manage to prevent AI agents from malfunctioning or being misused in ways that prove catastrophically harmful, we’ll still be left to grapple with the issue of job automation. Though this is a perennial problem of technological progress, there are good reasons to think that this time really is different – after all, the stated goal of frontier companies is to create AI systems that “outperform humans at most economically valuable work”.

It’s easy to imagine how general-purpose AI agents might cause mass job displacement. They will be deployable in any context, able to work 24/7 without breaks or pay, and capable of producing work much more quickly than their human equivalents. If we really do get AI agents that are this competent and reliable, companies will have a strong economic incentive to replace human employees in order to remain competitive.

The transition to a world of AI agents

AI agents are coming – and soon. There may not be a discrete point at which we move from a ‘tool AI’ paradigm to a world teaming with AI agents. Instead, this transition is likely to happen subtly as AI companies release models that are able to handle incrementally more complex tasks.

AI agents hold tremendous promise. They could automate mundane tasks and free us up to pursue more creative projects. They could help us make important scientific breakthroughs in spheres such as sustainable energy and medicine. But managing this transition carefully requires addressing a number of critical issues, such as how to reliably control advanced agents, and what social safety nets we should have in place in a largely automated economy.

About the author

Sarah Hastings-Woodhouse is an independent writer and researcher with an interest in educating the public about risks from powerful AI and other emerging technologies. Previously, she spent three years as a full-time Content Writer creating resources for prospective postgraduate students. She holds a Bachelors degree in English Literature from the University of Exeter.

Susi Snyder is the programme coordinator at ICAN. Her responsibilities include facilitating the development and execution of ICAN’s key programmes, including the management of ICAN’s divestment work and engagement with the financial sector.

For more than a decade, Susi has coordinated the Don’t Bank on the Bomb project. She is an expert on nuclear weapons, with over two decades experience working at the intersect between nuclear weapons and human rights.

Susi has contributed to a number of recent books, including Forbidden (2023), A World Free from Nuclear Weapons (2020), Sleepwalking to Armageddon: The Threat of Nuclear Annihilation (2017) and War and Environment Reader (2018). She has been featured in Project Syndicate, CNBC, 360 Magazine, Quartz, the Intercept, Huffington Post, U.S. News and World Report, the Guardian, and on Deutche Welle, Al Jazeera, Democracy Now (among others).

Susi is a Foreign Policy Interrupted/ Bard College 2020 fellow and one of the 2016 Nuclear Free Future Award Laureates. Susi has worked in PAX’s humanitarian disarmament team, coordinating nuclear disarmament efforts. Susi previously served as the Secretary General of the Women’s International League for Peace and Freedom at their Geneva secretariat.

She was named Hero of Las Vegas in 2001 for her work with Indigenous populations against US nuclear weapons development and nuclear waste dumping. Susi currently lives in Utrecht, the Netherlands with her husband and son. For more about her personal anti-nuclear journey, check out Susi’s Storycorps interview.

Chase is the US Communications Manager for the Future of Life Institute. He drives FLI’s communication initiatives in the United States, from policy communications to media relations. Chase has more than a decade of experience in public affairs and issue advocacy campaigns in the fields of telecommunications, healthcare, higher education, gun violence, civil rights, and tax policy. A proud son of Phoenix, Arizona, Chase graduated from The George Washington University and lives in Washington, D.C.

A new report by the US-China Economic and Security Review Commission recommends that “Congress establish and fund a Manhattan Project-like program dedicated to racing to and acquiring an Artificial General Intelligence (AGI) capability”.

An AGI race is a suicide race. The proposed AGI Manhattan project, and the fundamental misunderstanding that underpins it, represents an insidious growing threat to US national security. Any system better than humans at general cognition and problem solving would by definition be better than humans at AI research and development, and therefore able to improve and replicate itself at a terrifying rate. The world’s pre-eminent AI experts agree that we have no way to predict or control such a system, and no reliable way to align its goals and values with our own. This is why the CEOs of OpenAI, Anthropic and Google DeepMind joined a who’s who of top AI researchers last year to warn that AGI could cause human extinction. Selling AGI as a boon to national security flies in the face of scientific consensus. Calling it a threat to national security is a remarkable understatement.

AGI advocates disingenuously dangle benefits such as disease and poverty reduction, but the report reveals a deeper motivation: the false hope that it will grant its creator power. In fact, the race with China to first build AGI can be characterized as a “hopium war” – fueled by the delusional hope that it can be controlled.

In a competitive race, there will be no opportunity to solve the unsolved technical problems of control and alignment, and every incentive to cede decisions and power to the AI itself. The almost inevitable result would be an intelligence far greater than our own that is not only inherently uncontrollable, but could itself be in charge of the very systems that keep the United States secure and prosperous. Our critical infrastructure – including nuclear and financial systems – would have little protection against such a system. As AI Nobel Laureate Geoff Hinton said last month “Once the artificial intelligences get smarter than we are, they will take control.“

The report is committing scientific fraud by suggesting AGI is almost certainly controllable. More generally, the claim that such a project is in the interest of “national security” disingenuously misrepresents the science and implications of this transformative technology, as evidenced by technical confusions in the report itself – which appears to have been without much input from AI experts. The U.S. should reliably strengthen national security not by losing control of AGI, but by building game-changing Tool AI that strengthens its industry, science, education, healthcare, and defence, and in doing so reinforce U.S leadership for generations to come.

Max Tegmark

President, Future of Life Institute

Why do you care about AI Existential Safety?

Two major focuses of my academic and research interests are environmental protection and intellectual property. In both of them, AI is more and more involved, bringing some fundamental concerns, but also conveying unexpected potentials for fast and significant improvements. In both cases, the ways AI is used and developed are, fundamentally, the issues of existential safety. In other words, almost all my personal and professional interests turn around two major issues: how to preserve the environment and how to make the best possible use of human intellectual potential, by establishing better policies and introducing improved regulatory framework. Both were heavily impacted by the advent of AI. This is why I care about AI existential safety and this is the reason of my wish to cooperate with other academics and researchers, from various fields and of different nationalities, working on AI related issues, and, especially, on AI existential safety.

Please give at least one example of your research interests related to AI existential safety:

I will give three different, but mutually dependent examples of how my recent and ongoing (2021-2025) research activities and interests are related to AI existential safety. The first concerns education on climate change and AI existential safety, the second regulatory and ethical concerns related to copyright infringements made by AI and the third the issue of AI-related threats and potentials for energy transition.

- Education on climate change and AI existential safety – Research project “AI and education on climate change mitigation – from King Midas problem to a golden opportunity?” – Grantee of the Future of Life Institute (May-September 2024) When I started working on this assignment, I was only partially aware of how and to what extent the use of AI can represent an existential threat (but also a huge potential) for global endeavors to mitigate climate change and, even more, to provide meaningful education (ECCM – education on climate change mitigation) on this issue. While the existing, general AI-assisted educational tools are, on the one hand, adaptable to quick societal changes and rapidly changing environment, on the other, they can easily be used for deceptive and manipulative purposes, conveying potentially devastating conspiracy theories. My major conclusion was that what is really needed are new, innovative AI-assisted educational tools adapted to ECCM. Apart from their cross-disciplinarity, ability to better illustrate the complexity of sustainability related issues and efficiency in combatting prejudices, misbelieves and conspiracy theories, the new AI-based educational tools have to respond to the challenges related to AI existential safety.

- Human creativity, intellectual property and AI existential safety – Research project “Regulatory and ethical evaluation of the outputs of AI-based text-to-image software solutions” – Project Leader, Innovation Fund of the Republic of Serbia (October 2023 – May 2025) The aptitude of AI-based tools to “create” various pieces of art has deeply worried not only artists, but many of us. As a lawyer with Ph.D. in intellectual property law (University of Strasbourg, France, 2010), I was particularly concerned for individual artists and smaller creative communities. Not only for them, the issue of intellectual property rights (IPRs) is the issue of existential safety. How to create the best possible regulatory framework, where genuinely human creativity will be protected and remunerated, but also allow the regulated development and use of AI in creative industries? (At least some) answers are not expected before May 2025, when this project should end.

- Energy transition and AI existential safety – Research project “Energy transition through cross-border inter-municipal cooperation” – Grantee of the Institute for Philosophy and Social Theory (July 2022 – June 2023) In this project, I examined how to use AI in energy transition in a safe and ethical way, and in supranational context.

Why do you care about AI Existential Safety?

I believe the development of advanced AI systems is one of the most important challenges facing humanity in the coming decades. While AI has immense potential to help solve global problems, it also poses existential risks if not developed thoughtfully with robust safety considerations. My research background in economics, energy, and global governance has underscored for me the complexity of steering technological progress to benefit society. I’m committed to dedicating my career to research and policy efforts to ensure advanced AI remains under human control and is deployed in service of broadly shared ethical principles. Our generation has a responsibility to proactively address AI risks.

Please give at least one example of your research interests related to AI existential safety:

One of my key research interests is the global governance of AI development, including what international agreements and institutions may be needed to coordinate AI policy and manage AI risks across nations. My background in global economic governance, including research on reform of the Bretton Woods institutions with the Atlantic Council, has heightened my awareness of the challenges of international cooperation on emerging technologies.

I’m particularly interested in exploring global public-private partnerships and multi-stakeholder governance models that could help steer AI progress, for example through joint research initiatives, standards setting, monitoring, and enforcement. Economic incentives and intellectual property regimes will also be crucial levers. I believe applying an international political economy lens, informed by international relations theory and economics, can yield policy insights.

Additional areas of interest include: the impact of AI on global catastrophic and existential risks; the intersection of AI safety with nuclear and other emerging risks; scenario planning for transformative AI; and strengthening societal resilience to possible AI-related disruptions. I’m also keen to explore the economics of AI development, including R&D incentives, labor market impacts, inequality, and growth theory with transformative AI. I believe AI safety research must be grounded in a holistic understanding of socio-economic realities.

Why do you care about AI Existential Safety?

In the security, defense and justice spaces, important questions arise about AI, power and accountability. As AI systems recognize our faces, analyse public procurement and are posited as solutions to unmanageable judicial loads, it is imperative to ask how to deploy and govern these technologies within a framework of Human Rights, the Rule of Law and good governance principles.

On the one hand, the spectres of privacy violation, black box syndrome, wired-in biases, exponential growth in inequalities and even human obsolescence are fearsome prospects. However, the possibility of, inter alia, slashing court case waiting times, identifying corruption cases and exploiting big data to address transnational crime, is tantalizing and must be pursued.

It is in this context that governance actors must contribute to the discussion and policy making, to catch up with technological advances and to explore proactively how AI systems can be used for the benefit of well governed and accountable security sectors.

Please give at least one example of your research interests related to AI existential safety:

The world’s oceans are a source of prosperity but also an arena of maritime crime and insecurity including trafficking, illegal, unregulated and unreported fishing and environmental crime. A major task here is to first track and understand these security problems so that governance can be built to address them. This is made difficult by the sheer size of maritime domain.

Coalitions of international CSOs work on a global level to build accountability and oversight for maritime trafficking and illegal, unreported and unregulated fishing, as well as labour violations on the high seas, offering evidence and policy recommendations to governments on maritime governance. They use AI technology to build vessel-tracking models from a huge data set drawn from satellite data, vessel beacons and onshore registries. The AI technology is used to red-flag vessels against risks of committing maritime crime.

This case study is useful because it directly impinges on maritime security in terms of tracking criminality at sea. It sheds light on the use of AI systems by non-governmental bodies. It is also an interesting case of AI models being used to sift through enormous amounts of data from different data sources (satellite, shore registry, vessel beacons). Furthermore, the tracking effort is done via a coalition of NGOs, which offers useful perspectives on collaborative approaches between technologists and governance specialists.

Why do you care about AI Existential Safety?

We are lucky to be living in a pivotal moment in history. We are witnessing—perhaps without fully realizing—the unfolding of the fifth industrial revolution. I believe that AI will radically change our work, our lives, and even how we relate to one another. It is in our hands to make sure that this transition is done responsibly. However, current AI developments are in mostly driven by for-profit corporations that have specific agendas. In order to make sure that such developments are also aligned with the common interest of everyday people, we need to strengthen the dialogue between AI engineers and policy-makers, as well as raise awareness about the potential risks of AI technologies. We are all aware of the risks that planes, cars, and weapons carry. But the risks of AI, despite being more subtle, do not fall behind. Language, images, videos are our everyday ways of consuming information. If this content is generated massively with specific agendas, it can easily be used to spread misinformation, polarize society, and ultimately undermine democracy. For this reason, ethics and AI should go hand-in-hand, so AI can bring about a safer society instead of being a threat.

Please give at least one example of your research interests related to AI existential safety:

I work at the intersection of control theory and natural language processing. Informally, control theory studies the behavior of dynamical systems, i.e., systems that create a trajectory over time. Using control theory, we design strategies to steer that trajectory into some desired direction. Moreover, we can often mathematically guarantee the safe behavior of the system: staying away some given region, navigating within some constraints, etc. Although control theory was initially developed for the aerospace industry (we didn’t want our planes to crash!), I believe that its principles and mathematical insights are very much suitable to study a different kind of dynamical systems: artificial intelligence systems! And in particular, foundation models. In my work, I carry out different research agendas focused into ensuring safety and controllable behavior of AI systems. One of my research directions is about how to interact with a robot in natural language, in a way that the behavior of the robot can still satisfy safety constraints. Another one of my research directions is concerned with looking at large language models as dynamical systems (systems that builds trajectories in “word” space) and using classical control theoretical techniques to do things like fine-tuning, i.e., steer the system away from undesired behavior, etc. Since control theory provides guarantees on the behavior, our methods also inherit theoretical guarantees as well. In my other research direction, I look into foundation models and using classical control-theoretical models, I try to understand how we can create models that learn more efficiently are less data-hungry: this will imply that we can actually supervise the content that we use for training, so we are not exposing our systems to toxic data; we will require less power and energy and reduce the environmental footprint; and these systems will become more accessible to more modest computational settings like universities and not belong exclusively to big corporations with huge computing power.

Why do you care about AI Existential Safety?

I care about AI existential safety because ensuring the responsible development and deployment of artificial intelligence is crucial for safeguarding humanity’s future. As AI technologies become increasingly integrated into society and impact various aspects of our lives, it’s essential to address the potential risks and ensure that AI systems are designed and deployed in ways that prioritize safety, reliability, and ethical considerations. By advocating for AI existential safety, we can foster public trust in AI, promote transparency and accountability in AI development and deployment, and ultimately ensure that AI serves as a force for good in the world.

Please give at least one example of your research interests related to AI existential safety:

One example of my research interest related to AI existential safety is in the area of responsible generative AI. In particular, I recently have focused on responsible generative AI. I have developed techniques to enable responsible text-to-image generation.

My long-term research goal is to build Responsible AI. Specifically, I am interested in the interpretability, robustness, privacy, and safety of Visual Perception, Foundation Model-based Understanding and Reasoning, Robotic Policy and Planning, and their fusion towards General Intelligence Systems.

Why do you care about AI Existential Safety?

As AI technology increasingly integrates into our daily lives and work, the scenarios it encounters also become more complex and variable. For example, autonomous vehicles must accurately identify pedestrians and other vehicles under complex weather and traffic conditions; medical diagnostic systems need to provide accurate and reliable diagnoses when facing a variety of diseases and patient differences. Therefore, enhancing the robustness and generalization capabilities of AI systems can not only help AI better adapt to new situations and reduce decision-making errors but also significantly improve the accuracy and safety of its decisions, ensuring the effective application of AI technology. Enhancing these capabilities is key to AI safety.

Please give at least one example of your research interests related to AI existential safety:

My research primarily focuses on reinforcement learning, especially its generalization capabilities and robustness against noise disturbances. These characteristics are crucial for the existential safety of artificial intelligence, as reinforcement learning models are often deployed in constantly changing and complex environments, such as autonomous vehicles and smart medical systems. In these applications, the models must adapt to unprecedented situations and environmental noise to ensure the reliability and safety of decisions. Specifically, I study ad-hoc collaborative algorithms, which allow AI systems to flexibly adjust their behavioral strategies according to the specific requirements of different environments, thus adapting to changes in the environment and effectively transferring knowledge learned in one setting to others. Additionally, I am focused on enhancing the robustness of these models in handling changes in input data or operational errors, including developing algorithms capable of automatically adapting to various inputs. These research efforts aim to advance the safety and reliability of reinforcement learning technologies, reduce the potential negative consequences triggered by artificial intelligence, maximize the positive impacts of AI technology, and bring broader benefits to human society.

Why do you care about AI Existential Safety?

AI and Machine learning holds a great promise for advancing healthcare, agriculture, scientific discovery, and more. From developing autonomous robots for monitoring poultry houses to synthesizing antimicrobial peptides for combating antibiotic resistance, to designing verifiable safety certificates for industrial-processes, I have experienced first-hand the wide-ranging impacts of technology on society. While data-driven methods have proven effective in many online tasks, employing them to make safety-critical decisions in unknown environments is still a challenge. I am passionate about applied research that addresses the broader need for mathematical safety guarantees when deploying learning-based autonomous systems in the real-world. During my PhD, I plan on building tools that bridge the gap between academic research and real-world applications.

Please give at least one example of your research interests related to AI existential safety:

During my masters, I developed algorithms for ensuring safety guarantees during the sim2real transfer of control algorithms. Parameters for data-driven algorithms need to be tuned in order to maximize performance on the real system. Bayesian Optimization (BO) has been used to automate this process. However, in case of safety-critical systems, evaluation of unsafe parameters during the optimization process should be avoided. Recently, a safe BO algorithm, SAFEOPT, was proposed ; it employs Gaussian Processes to only evaluate parameters that satisfy safety constraints with high probability. Even so, it is known that BO does not scale to higher dimensions (d > 20). To overcome this limitation, we proposed the SAFEOPT-HD algorithm that identifies relevant domain regions that efficiently trade-off performance and safety, and restricts BO search to this pre-processed domain. By employing cheap (and potentially inaccurate) simulation models, offline computations are performed using Genetic Search Algorithms, to only consider domain subspaces that are likely to contain optimal policies for a given task, thus significantly reducing domain size. When combined with SAFEOPT, we obtain a safe BO algorithm applicable for problems with large input dimensions. To alleviate the issues due to sparsity of the non-uniform preprocessed domain, a method to systematically generate new controller parameters with desirable properties is implemented. To illustrate its effectiveness, we successfully deployed SAFEOPT-HD for optimizing a 48-dimensional control policy to execute full position control of a quadrotor, while guaranteeing safety.

Currently, my research focuses on risk-sensitive reinforcement learning (RL). I argue that the tradition RL objective to maximize the expected return is insufficient to handle decision making in uncertain scenarios. To overcome this limitations, I leverage tools from the financial literature and incorporate risk measures eg conditional value-at-risk and distortion risk metrics to develop safe RL strategies.

Why do you care about AI Existential Safety?

I care about the future of humanity and I want to help build a flourishing future.

Please give at least one example of your research interests related to AI existential safety:

I have several current priorities in my work: I’m trying to understand how scale impacts the safety of AI systems. Do larger models suffer from greater safety risks? Are they harder to align? Do they have more vulnerabilities and are these vulnerabilities harder to remove?I’m interested in predictions about AI timelines. Is the ability to run and train powerful models proliferating? Will new technologies like quantum computing affect AGI timelines? As part of Oxford’s future impact group, I do work looking at the moral welfare and sentience of future AI systems. I’ve done work in interpretability of AI models including mechanistic interpretability of Go models and scaling properties of dictionary learning.

Why do you care about AI Existential Safety?

AI seems like by far the most likely way for humanity to go extinct in the near future. I’ve always been motivated by trying to do as much good as I can in the world, and was initially planning on earning to give, but realizing just how likely an AI extinction was (and in particular reading The Precipice) made me completely change my career direction. By default, I expect things to go poorly for humanity because AI safety is clearly taking a backseat to building shiny AI-powered products, and also because we’ve never before faced a problem where the first critical try is very likely to also be the last. But I also think that, because of how absurdly small this research field is, individuals can have a massive amount of influence on the future of the world by working on this issue.

Please give at least one example of your research interests related to AI existential safety:

I’m currently working on mechanistic interpretability under Neel Nanda. My goal there is to allow us to create white-box model evaluations and inference-time monitoring based on the internal states of models, which we could use to make superalignment safer (since I expect alignment is too difficult to solve “manually”, and therefore needs to be solved by AIs instead). Before that, I worked on theory of safe reinforcement learning and on neuroconnectionism (i.e. using insights from neuroscience for alignment fine-tuning).

Why do you care about AI Existential Safety?

Human intelligence, including its limitations, is foundational to our society. Advanced artificially intelligent systems therefore stand to have foundational impacts on our society, with potential outcomes ranging from enormous benefits to outright extinction, depending on how the systems are designed. I believe that safely navigating this transition is a grand and pressing techno-social challenge facing society. What better motivation could there be?

Please give at least one example of your research interests related to AI existential safety:

The focus of my research is on understanding emergent goal-directedness in learned AI systems. Goal-directed advanced AI systems present risks when their goals are incompatible with our own, causing them to act adversarially towards us. This risk is exacerbated when goal-directedness emerges unexpectedly through a learning process, or if the goals that emerge are different from the goals we would have chosen for a system.

I am pursuing theoretical and empirical research to demonstrate, understand, and control the emergence of goals in deep reinforcement learning systems. This includes identifying robust behavioral definitions of goal-directed behavior, uncovering internal mechanisms implementing goal-directedness within learned systems, and studying the features of the learning pipeline (system architecture, data/environment, learning algorithm) that influence the formation of these structures and behaviors.

Why do you care about AI Existential Safety?

AI safety is a subject of profound importance to me because I am deeply invested in work that maximizes human wellbeing. The rapid advancements in artificial intelligence bring us closer to achieving strong AI, almost certainly within our lifetime. This transformative technology promises to revolutionize every aspect of society, from healthcare and education to transportation and economics. However, with such monumental potential comes significant risk. If we do not approach the development and deployment of AI with careful consideration, the consequences could be dire, impacting the trajectory of human history in unpredictable and harmful ways.

Please give at least one example of your research interests related to AI existential safety:

My research interests lie at the intersection of AI existential safety and various machine learning methods. On the theoretical side, I am deeply interested in understanding how neural networks do what they do, and these interests range from research on how networks learn representations in infinite-width networks through to mechanistic interpretability with sparse auto encoders. On the more practical side, I am interested in how to design robust instruction fine-tuning schemes, perhaps involving Bayesian inference to reason about uncertainty.

The potential for artificial intelligence to pose existential risks to humanity is a critical concern that drives my research interests. One approach to addressing these risks is to understand the operation of these networks, so we can potentially discern how and when they may begin to present safety risks. My work in this area extends back to work on the “deep kernel process/machine” programme, which gives a strong theoretical understanding of how infinite, Bayesian neural networks learn representations. At present, my work in this area extends to extending the sparse auto-encoder approach to mechanistic interpretability to obtain an understanding of the full network, and not just isolated circuits.

In the near term, perhaps the most promising approach to AI safety is instruction tuning. I am deeply interested in improving the accuracy, robustness and generalisation in instruction tuning. One approach that my group is pursuing is to leverage Bayesian uncertainty estimation so that reward models understand the regions where they have lots of data, and can therefore be certain about their reward judgements, from regions where they have little data and can be less certain. These uncertain regions may correspond to regions where generalisation is poor, or even flag adversarial inputs. The fine tuned network can then be steered away from responding in these potentially dangerous regions.

Why do you care about AI Existential Safety?

Clearly, currently we don’t have a good understanding about the mechanisms used by the current models to demonstrate a capability. Further, things have become worse in the era of large language models because these models elicit some surprising capabilities and sometimes such capabilities are demonstrated in very specific scenarios. This makes is extremely difficult to reliably estimate the presence of a capability in these systems.

This is worrying because this could mean that in future the super intelligent systems could learn some extremely dangerous capabilities while successfully hiding them from us in normal scenarios. As a result, if we continue to develop more intelligent systems, they might get better than us in not just possessing better capabilities but also in finding ways of not expressing them. This could prove fatal for the society and we could lose control.

Please give at least one example of your research interests related to AI existential safety:

Currently, I am working on understanding how safety fine-tuning makes a model safe and how different types of jailbreaking attacks are able to bypass the safety mechanism learned by safety fine-tuning. This analysis can help in developing more principled and improved safety training methods. It can also provide a better understanding about why “fine-tuning” is not sufficient to enhance safety of current LLMs.

Why do you care about AI Existential Safety?

Advanced AI systems pose major risks if not developed responsibly. Misuse by governments or corporations could seriously threaten human rights through surveillance, suppressing dissent, algorithmic profiling, and increasing loss of freedoms. Even more alarming, improperly designed superintelligent AI coupled with drones and robots could potentially pose existential risks to humanity. To prevent this, AI development must prioritize human rights, strong oversight and accountability, and extensive safety research to ensure it remains reliably beneficial and aligned with human values

Please give at least one example of your research interests related to AI existential safety:

Broadly, I am interested in issues of AI safety in the context of law enforcement and public governance, with a particular focus on the development of risk management strategies and safeguards for AI development and deployment to prevent the infringement of human and civil rights.