Ben is Communications Director at the Future of Life Institute. Prior roles include Chief Communications Officer at the Responsible Business Initiative for Justice, and Deputy Head of Conferences/External Relations at Chatham House (The Royal Institute of International Affairs). He has an M.A. in Politics and International Relations from the University of Edinburgh, and studied at the Royal Academy of Dramatic Arts. He is a Rising Leaders Fellow of Aspen Institute UK, and sits on the Board of Trustees for the nonprofits Youth Voice Trust and Action on Poverty. He is a recognized commentator and international speaker on issue-led communications, and has had bylines in publications such as PRWeek, The Telegraph and MIT Sloan Management Review.

Hamza is AI and National Security Lead at the Future of Life Institute. Based in Washington DC, he is leading federal government engagement on AI and national security risks, including AI intersection with WMD and cyber capabilities, AI integration into military systems, and compute security.

His work on these issues has been featured in TIME, The Hill, the Bulletin of Atomic Scientists Lancet, Foreign Affairs, and the United Nations, as well as in collaborations with experts the State Department and Department of Homeland Security.

Hamza has served as a Gleitsman Leadership Fellow at Harvard University’s Center for Public Leadership and has completed a Master in Public Policy from the John F. Kennedy School of Government at Harvard. He has previously completed undergraduate and postgraduate degrees in international relations and policy from the London School of Economics.

“We applaud the Committee for seeking the counsel of thoughtful, leading experts. Advanced AI systems have the potential to exacerbate current harms such as discrimination and disinformation, and present catastrophic and even existential risks going forward. These could emerge due to misuse, unintended consequences, or misalignment with our ethics and values. We must regulate to help mitigate such threats and steer these technologies to benefit humanity.

“As Stuart and Yoshua have both said in the past, the capabilities of AI systems have outpaced even the most aggressive estimates by most experts. We are grossly unprepared, and must not wait to act. We implore Congress to immediately regulate these systems before they cause irreparable damage.

Effective oversight would include:

- The legal and technical mechanisms for the federal government to implement an immediate pause on development of AI more powerful than GPT-4

- Requiring registration for large groups of computational resources, which will allow regulators to monitor the development of powerful AI systems

- Establishing a rigorous process for auditing risks and biases of these systems

- Requiring approval and licenses for the deployment of powerful AI systems, which would be contingent upon developers proving their systems are safe, secure, and ethical

- Clear red lines about what risks are intolerable under any circumstances

“Funding for technical AI safety research is also crucial. This will allow us to ensure the safety of our current AI systems, and increase our capacity to control, secure, and align any future systems.

“The world’s leading experts agree that we should pause development of more powerful AI systems to ensure AI is safe and beneficial to humanity, as demonstrated in the March letter coordinated by the Future of Life Institute. The federal government should have the capability to implement such a pause. The public also agrees that we need to put regulations in place: nearly three-quarters of Americans believe that AI should be either somewhat or heavily regulated by the government, and the public favors a pause by a 5:1 margin. These regulations must be urgently and thoroughly implemented – before it is too late.”

Dr Anthony Aguirre, Executive Director, Future of Life Institute

Welcome to the Future of Life Institute newsletter. Every month, we bring 28,000+ subscribers the latest news on how emerging technologies are transforming our world – for better and worse.

If you’ve found this newsletter helpful, why not tell your friends, family and colleagues to subscribe here?

Today’s newsletter is a 6-minute read. We cover:

- FLI releases our most realistic nuclear war simulation yet

- The EU AI Act draft has been passed by the European Parliament

- Leading AI experts debate the existential threat posed by AI

- New research shows how large language models can aid bioterrorism

- A nuclear war close call, from one faulty chip

A Frighteningly Realistic Simulation of Nuclear War

On Thursday, we released our most scientifically realistic simulation of what a nuclear war between Russia and the United States might look like, accompanied by a Time article written by FLI President Max Tegmark.

Here’s what detailed modelling says most major cities around the world will experience in nuclear war:

- An electromagnetic pulse blast knocking out all communications.

- An explosion that melts streets and buildings.

- A shockwave that shatters windows and bones.

- Soot and debris that rises so high into the atmosphere, it shrouds the earth, catastrophically dropping global temperatures and consequently starving 99% of all humans.

The Bulletin of the Atomic Scientists Doomsday Clock has never been closer to midnight as it is now. Learn more about the risks posed by nuclear weapons and find out how you can take action to reduce the risks here.

Additionally, check out our previous and ongoing work on nuclear security:

- We are thrilled to reveal the grantees of our Humanitarian Impacts of Nuclear War program. Find the list of projects here, totalling over $4 million of grants awarded in this round.

- Our video further explaining the nuclear winter consequences of nuclear war: The Story of Nuclear Winter.

- The 2022 Future of Life Award was given to eight individuals for their contributions to the discovery of and raising awareness for nuclear winter. Watch their stories here.

European Lawmakers Agree on Draft AI Act

In mid-June, the European Parliament passed a draft version of the EU AI Act, taking further steps to implement the world’s most comprehensive artificial intelligence legislation to date. We’re hopeful this is the first of similar sets of rules to be established by other governments.

From here, the agreed-upon version of the AI Act moves to negotiations starting in July between the EU Parliament, Commission, and all 27 member states. EU representatives have shared their intention to finalise the Act by the end of 2023.

Wondering how well foundation model providers such as OpenAI, Google, and Meta currently meet the draft regulations? Researchers from the Stanford Center for Research on Foundation Models and Stanford Institute for Human-Centered AI published a paper evaluating how well 10 such companies comply, finding significant gaps in performance. You can find their paper in full here.

Stay in the loop:

As the EU AI Act progresses to its final version, be sure to follow our dedicated AI Act website and newsletter to stay up-to-date with the latest developments.

AI Experts Debate Risk

FLI President Max Tegmark, together with AI pioneer Yoshua Bengio, took part in a Munk Debate supporting the thesis that AI research and development poses an existential threat. AI researcher Melanie Mitchell and Meta’s Chief AI Scientist Yann LeCun opposed the motion.

When polled at the start of the debate, 67% of the audience agreed that AI poses an existential threat, with 33% disagreeing; by the end, 64% agreed while 36% disagreed with the thesis.

It’s also worth noting that the Munk Debate voting application didn’t work at the end of the debate, so final results reflect audience polling via email within 24 hours of the debate, instead of immediately following its conclusion.

You can find the full Munk Debate recording here, or listen to it as a podcast here.

Governance and Policy Updates

AI policy:

▶ U.S. Senate Majority Leader Chuck Schumer announced his “SAFE Innovation” framework to guide the first comprehensive AI regulations in the U.S.; he also announced “AI Insight Forums” which would convene AI experts on a number of AI-related concerns.

▶ The Association of Southeast Asian Nations, covering a 10-member, 668 million person region, is set to establish “guardrails” on AI by early 2024.

▶ U.S. President Joe Biden met with civil society tech experts to discuss AI risk mitigation. Meanwhile, the 2024 election approaches with no clarity on how the use of AI in election ads and related media is to be controlled.

Climate change:

▶ A report released Wednesday by the UK’s Climate Change Committee expressed concern about the British Government’s ability to meet its Net Zero goals, citing its lack of urgency.

▶ In more hopeful news, Spain is significantly raising its clean energy production targets over the next decade, now expecting to have 81% of power produced by renewables by 2030.

Updates from FLI

▶ FLI President Max Tegmark spoke on NPR about the use of autonomous weapons in battle, and the need for a binding international treaty on their development and use.

▶ FLI’s Dr. Emilia Javorsky took part in a panel discussing the intersection between artificial intelligence and creativity, put on by Hollywood, Health & Society at the ATX TV Festival in early June. The recording is available here.

▶ At the 7th Annual Center for Human-Compatible AI Workshop, FLI board members Victoria Krakovna and Max Tegmark, along with external advisor Stuart Russell, participated in a panel on aligning large language models to avoid harm.

▶ Our podcast host Gus Docker interviewed Dr. Roman Yampolskiy about objections to AI safety, Dan Hendrycks (director of the Center for AI Safety) on his evolutionary perspective of AI development, and Joe Carlsmith on how we change our minds about AI risk.

New Research: Could Large Language Models Cause a Pandemic?

AI meets bio-risk: A concerning new paper outlines how, after just one hour of interaction, large language models (LLMs), like the AI system behind ChatGPT, were able to provide MIT undergraduates with a selection of four pathogens that could be weaponised, and even tangible strategies to engineer such pathogens into a deadly pandemic.

Why this matters: While AI presents many possible risks on its own, AI converging with biotechnology is another area of massive potential harm. The ability for LLMs to be leveraged in this way creates new opportunities for bad actors to create biological weapons, requiring far less expertise than ever before.

What We’re Reading

▶ Categorizing Societal-Scale AI Risks: AI researchers Andrew Critch and Stuart Russell developed an exhaustive taxonomy, centred on accountability, of societal-scale and extinction-level risks to humanity from AI.

▶ Looking Back: Writer Scott Alexander presents a fascinating retrospective of AI predictions and timelines, comparing survey results from 352 AI experts in 2016 to the current status of AI in 2023.

▶ FAQ on Catastrophic AI Risk: AI expert Yoshua Bengio posted an extensive discussion of frequently asked questions about catastrophic AI risks.

▶ What We’ll Be Watching: On 10 July, Netflix is releasing its new “Unknown: Killer Robots” documentary on autonomous weapons, as part of its “Unknown” series. FLI’s Dr. Emilia Javorsky features in it – you can watch the newly-released trailer here.

Hindsight is 20/20

In the early hours of 3 June 1980, the message that usually read “0000 ICBMs detected 0000 SLBMs detected” on missile detection monitors at U.S. command centres suddenly changed, displaying varying numbers of incoming missiles.

U.S. missiles were prepared for launch and nuclear bomber crews started their engines, amongst other preparatory initiatives. Thankfully, before any action was taken, the displayed numbers were reassessed. There had been a glitch.

The same false alarm happened three days later, before the faulty computer chip was finally discovered. The entire system – on which world peace was dependent – was effectively unreliable for several days because of one defective component.

In this case, and in so many other close calls, human reasoning helped us narrowly avoid nuclear war. How many more close calls will we accept?

FLI is a 501(c)(3) non-profit organisation, meaning donations are tax exempt in the United States. If you need our organisation number (EIN) for your tax return, it’s 47-1052538. FLI is registered in the EU Transparency Register. Our ID number is 787064543128-10.

Stephen William Hawking was an English theoretical physicist, cosmologist, and author who was born on January 8, 1942, in Oxford, England. At the time of his death on March 14, 2018, he was the director of research at the Centre for Theoretical Cosmology at the University of Cambridge. Between 1979 and 2009, he was the Lucasian Professor of Mathematics at the University of Cambridge, widely viewed as one of the most prestigious academic posts in the world. During his lifetime, Hawking made revolutionary contributions to our understanding of the nature of the universe.

As the Communications Strategist at the Future of Life Institute, Maggie supports the development and execution of FLI’s communications and outreach strategy, along with managing FLI’s social media presence and monthly newsletter. Prior to joining FLI, she worked in American politics. Maggie holds an Honours degree in Political Science and Communication Studies from McGill University.

Welcome to the Future of Life Institute newsletter. Every month, we bring 27,000+ subscribers the latest news on how emerging technologies are transforming our world – for better and worse.

If you’ve found this newsletter helpful, why not tell your friends, family and colleagues to subscribe here?

Today’s newsletter is a 6-minute read. We cover:

- Progress on the EU AI Act

- Pioneering AI scientists speak out about existential risk

- Best Practices for AGI Safety and Governance

- How a 1967 solar flare nearly led to nuclear war

The AI Act Clears Another Hurdle!

The Internal Market Committee and the Civil Liberties Committee of the European Parliament have passed their version of the AI Act. We’re delighted that this draft included General Purpose AI Systems – in line with our 2021 position paper and more recent recommendations.

Why this matters: GPAIS can perform many distinct tasks, including those they were not intentionally trained for. Left unchecked, actors can use these models – think GPT-4 or Stable Diffusion – for harmful purposes, including disinformation, non-consensual pornography, privacy violations, and more.

What’s next? This version of the AI Act will first be voted on in the European Parliament in June, followed by a the three-way negotiation between lawmakers, EU member states, and the Commission in July.

There’s still someways to go before we know what the final shape of this legislation will look like, but we’ll continue to engage with this process! Meanwhile, check out our policy work in Europe and follow our dedicated AI Act website and newsletter to stay up-to-date with the latest developments.

Are We Finally Looking Up?

Last month, FLI President Max Tegmark wrote that the current discourse on AI safety reminded him of the movie ‘Don’t Look Up’ – a satirical film about how easily our societies can dismiss existential risks.

We’re seeing signs that this is now changing. The general public is certainly concerned: two recent surveys by YouGov America and Reuters/Ipsos both confirmed that the majority of American’s were concerned about the risk powerful AI systems pose to human societies.

Experts are adding their voice to this issue as well: Geoffrey Hinton and Yoshua Bengio – two AI scientists who won the Turing Award for their pioneering work on deep learning – have both sounded the alarm over the past few weeks.

And on May 30, the Centre for AI Safety published a statement, signed by the world’s leading AI scientists, business leaders, and politicians, which calls on all stakeholders to take the existential risk from AI as seriously as they would the risks from nuclear weapons or climate change.

We’re thrilled to see this issue finally receiving the attention it deserves, and offer a set of next steps to take in light of the statement.

Governance and Policy Updates

AI policy:

▶ The US Senate Committee on the Judiciary conducted a hearing about AI oversight rules, with OpenAI’s Sam Altman, IBM’s Christina Montgomery and NYU’s Gary Marcus testifying as witnesses.

▶ The leaders of the G7 countries called for ‘guardrails’ on the development of AI systems.

▶ The second session of the 2023 Government Group of Experts on Autonomous Weapons concluded in Geneva.

Nuclear security:

▶ US lawmakers want to prevent the government from delegating nuclear launch decisions to AI systems.

Climate change:

▶ A new report from the World Meteorological Organisation (WMO) reveals that global temperatures will likely breach the 1.5⁰C limit in the next five years.

Updates from FLI

▶ FLI President Max Tegmark recently spoke to Stiftung Neue Verantwortung about our open letter calling for a pause on giant AI experiments and how Europe should deal with potentially powerful and risky AI models.

▶ FLI’s Anna Hehir was at the UN in Geneva for the CCW this week to shed light on the proliferation and escalation risks of autonomous weapons. Her message: These weapons are unpredictable, unreliable, and unexplainable. They are a grave threat to global security.

▶ FLI’s Director of US Policy Landon Klein was quoted by Reuters in a report about their most recent poll which found that 61% of Americans think of AI as an existential risk.

▶ Our podcast host Gus Docker interviewed venture capitalist Maryanna Saenko about the future of innovation and Nathan Labenz, host of the Cognitive Revolution podcast, about red teaming for OpenAI.

New Research: Best Practices for AGI Safety

Best practices: The Centre for the Governance of AI (GovAI) published a survey of 51 experts about their thoughts on best practices, standards, and regulations that AGI labs should follow.

Why this matters: The stated goals of many labs is to build AGI. The science and politics of this goal are severely disputed. This survey by GovAI sheds light on what experts in the field think are necessary steps to ensure that more powerful AI systems are built with safety considerations coming first.

What we’re reading

▶ Rogue AI: Turing Award winner and deep learning pioneer Yoshua Bengio presents ‘a set of definitions, hypotheses and resulting claims about AI systems which could harm humanity’ and then discusses ‘the possible conditions under which such catastrophes could arise.’

▶ AI Safety Research: The White House’s updated National AI Research and Development plan acknowledges the existential risk from AI. On pg. 17, the report notes that ‘Long-term risks remain, including the existential risk associated with the development of artificial general intelligence through self-modifying AI or other means.’

▶ Near Misses: In a special edition focusing on close calls humanity has had with catastrophe, the Bulletin of the Atomic Scientists interviewed Susan Solomon – to whom we presented the Future of Life Award in 2021 – about her role in helping to heal the Ozone layer.

Dive deeper: Watch our video on how a dedicated group of scientists bureaucrats and diplomats saved the Ozone layer here:

Hindsight is 20/20

On 23 May 1967, a powerful solar storm led the US Air Force to believe that the Soviets had jammed American early warning surveillance radars. Jamming surveillance radars was then considered an act of war, and the US began preparing nuclear-weapon-equipped aircraft for launch.

Fortunately, scientists at the North American Aerospace Defense Command (NORAD) and elsewhere figured out that the flare, not the Soviets, had disrupted the radars.

According to an article in the National Geographic, the practice of monitoring solar flares had only picked up a few years prior to the 67′ incident. What if no one was monitoring solar flares and the US believed that the Soviets were preparing to strike?

We’ve had several close calls prior to and since this incident. How often will we roll the dice? Check out our timeline of close calls to learn more about such incidents.

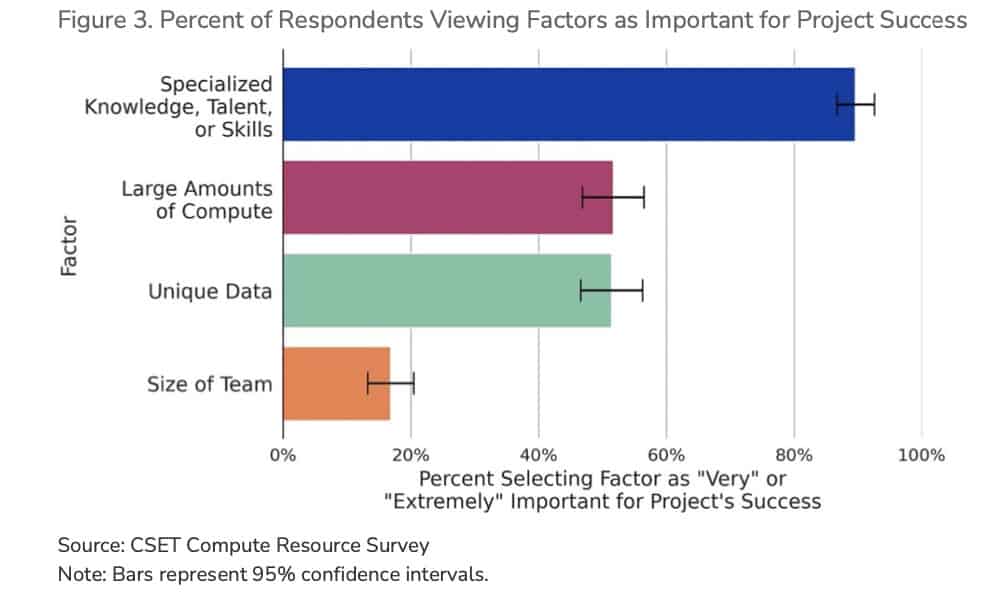

Chart of the Month

Data, algorithms, and compute drive progress in AI. But a survey of 400 AI researchers and experts by the Centre for Security and Emerging Technology found that the most valued resource was still human talent.

FLI is a 501(c)(3) non-profit organisation, meaning donations are tax exempt in the United States. If you need our organisation number (EIN) for your tax return, it’s 47-1052538. FLI is registered in the EU Transparency Register. Our ID number is 787064543128-10.

The view that “mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war” is now mainstream, with that statement being endorsed by a who’s who of AI experts and thought leaders from industry, academia, and beyond.

Although FLI did not develop this statement, we strongly support it, and believe the progress in regulating nuclear technology and synthetic biology is instructive for mitigating AI risk. FLI therefore recommends immediate action to implement the following recommendations.

Recommendations:

- Akin to the Nuclear Non-Proliferation Treaty (NPT) and the Biological Weapons Convention (BWC), develop and institute international agreements to limit particularly high-risk AI proliferation and mitigate the risks of advanced AI, including track 1 diplomatic engagements between nations leading AI development, and significant contributions from non-proliferating nations that unduly bear risks of technology being developed elsewhere.

- Develop intergovernmental organizations, akin to the International Atomic Energy Agency (IAEA), to promote peaceful uses of AI while mitigating risk and ensuring guardrails are enforced.

- At the national level, establish rigorous auditing and licensing regimes, applicable to the most powerful AI systems, that place the burden of proving suitability for deployment on the developers of the system. Specifically:

- Require pre-training auditing and documentation of a developer’s sociotechnical safety and security protocols prior to conducting large training runs, akin to the biocontainment precautions established for research and development that could pose a risk to biosafety.

- Similar to the Food and Drug Administration’s (FDA) approval process for the introduction of new pharmaceuticals to the market, require the developer of an AI system above a specified capability threshold to obtain prior approval for the deployment of that system by providing evidence sufficient to demonstrate that the system does not present an undue risk to the wellbeing of individuals, communities, or society, and that the expected benefits of deployment outweigh risks and harmful side effects.

- After approval and deployment, require continued monitoring of potential safety, security, and ethical risks to identify and correct emerging and unforeseen risks throughout the lifetime of the AI system, similar to pharmacovigilance requirements imposed by the FDA.

- Prohibit the open-source publication of the most powerful AI systems unless particularly rigorous safety and ethics requirements are met, akin to constraints on the publication of “dual-use research of concern” in biological sciences and nuclear domains.

- Pause the development of extremely powerful AI systems that significantly exceed the current state-of-the-art for large, general-purpose AI systems.

The success of these actions is neither impossible nor unprecedented: the last decades have seen successful projects at the national and international levels to avert major risks presented by nuclear technology and synthetic biology, all without stifling the innovative spirit and progress of academia and industry. International cooperation has led to, among other things, adoption of the NPT and establishment of the IAEA, which have mitigated the development and proliferation of dangerous nuclear weapons and encouraged more equitable distribution of peaceful nuclear technology. Both of these achievements came during the height of the Cold War, when the United States, the USSR, and many others prudently recognized that geopolitical competition should not be prioritized over humanity’s continued existence.

Only five years after the NPT went into effect, the BWC came into force, similarly establishing strong international norms against the development and use of biological weapons, encouraging peaceful innovation in bioengineering, and ensuring international cooperation in responding to dangers resulting from violation of those norms. Domestically, the United States adopted federal regulations requiring extreme caution in the conduct of research and when storing or transporting materials that pose considerable risk to biosafety. The Centers for Disease Control and Prevention (CDC) also published detailed guidance establishing biocontainment precautions commensurate to different levels of biosafety risk. These precautions are monitored and enforced at a range of levels, including through internal institutional review processes and supplementary state and local laws. Analogous regulations have been adopted by nations around the world.

Not since the dawn of the nuclear age has a new technology so profoundly elevated the risk of global catastrophe. FLI’s own letter called on “all AI labs to immediately pause for at least six months the training of AI systems more powerful than GPT-4.” It also stated that “If such a pause cannot be enacted quickly, governments should step in and institute a moratorium.”

Now, two months later – despite discussions at the White House, Senate hearings, widespread calls for regulation, public opinion strongly in favor of a pause, and an explicit agreement by the leaders of most advanced AI efforts that AI can pose an existential risk – there has been no hint of a pause, or even a slowdown. If anything, the breakneck pace of these efforts has accelerated and competition has intensified.

The governments of the world must recognize the gravity of this moment, and treat advanced AI with the care and caution it deserves. AI, if properly controlled, can usher in a very long age of abundance and human flourishing. It would be foolhardy to jeopardize this promising future by charging recklessly ahead without allowing the time necessary to keep AI safe and beneficial.