US Senate Hearing ‘Oversight of AI: Principles for Regulation’: Statement from the Future of Life Institute

Contents

“We applaud the Committee for seeking the counsel of thoughtful, leading experts. Advanced AI systems have the potential to exacerbate current harms such as discrimination and disinformation, and present catastrophic and even existential risks going forward. These could emerge due to misuse, unintended consequences, or misalignment with our ethics and values. We must regulate to help mitigate such threats and steer these technologies to benefit humanity.

“As Stuart and Yoshua have both said in the past, the capabilities of AI systems have outpaced even the most aggressive estimates by most experts. We are grossly unprepared, and must not wait to act. We implore Congress to immediately regulate these systems before they cause irreparable damage.

Effective oversight would include:

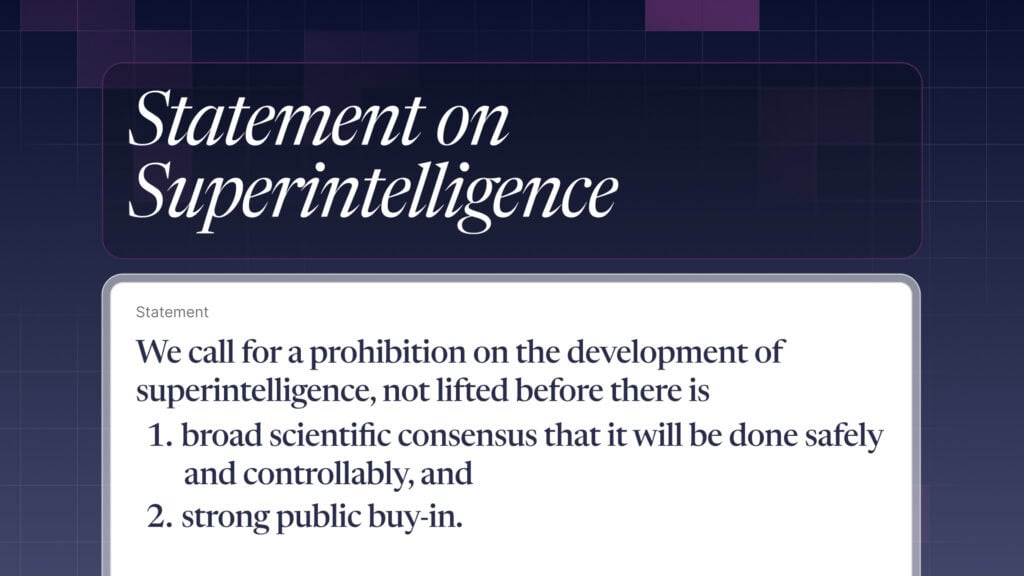

- The legal and technical mechanisms for the federal government to implement an immediate pause on development of AI more powerful than GPT-4

- Requiring registration for large groups of computational resources, which will allow regulators to monitor the development of powerful AI systems

- Establishing a rigorous process for auditing risks and biases of these systems

- Requiring approval and licenses for the deployment of powerful AI systems, which would be contingent upon developers proving their systems are safe, secure, and ethical

- Clear red lines about what risks are intolerable under any circumstances

“Funding for technical AI safety research is also crucial. This will allow us to ensure the safety of our current AI systems, and increase our capacity to control, secure, and align any future systems.

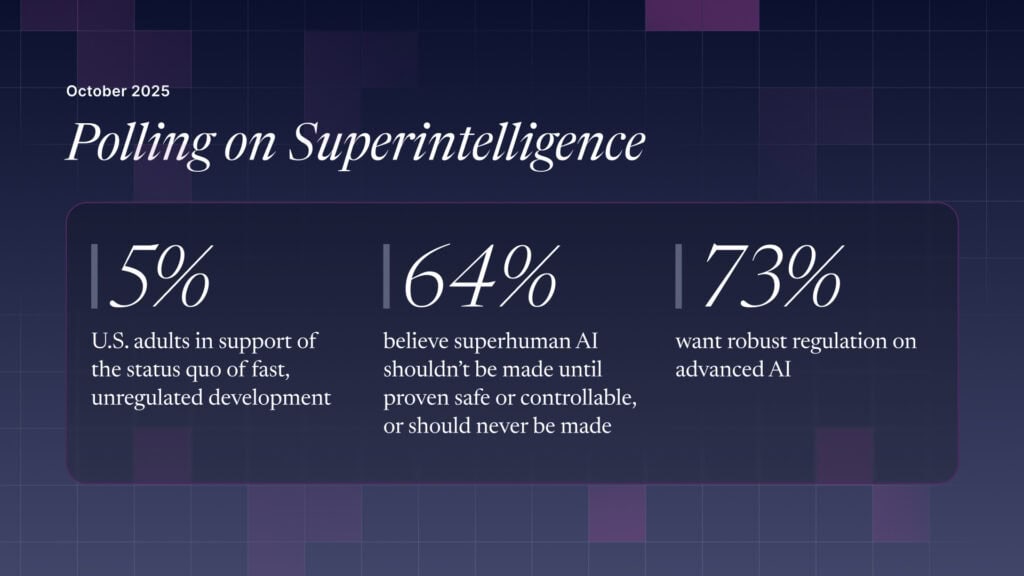

“The world’s leading experts agree that we should pause development of more powerful AI systems to ensure AI is safe and beneficial to humanity, as demonstrated in the March letter coordinated by the Future of Life Institute. The federal government should have the capability to implement such a pause. The public also agrees that we need to put regulations in place: nearly three-quarters of Americans believe that AI should be either somewhat or heavily regulated by the government, and the public favors a pause by a 5:1 margin. These regulations must be urgently and thoroughly implemented – before it is too late.”

Dr Anthony Aguirre, Executive Director, Future of Life Institute

About the Future of Life Institute

The Future of Life Institute (FLI) is a global think tank with a team of 20+ full-time staff operating across the US and Europe. FLI has been working to steer the development of transformative technologies towards benefitting life and away from extreme large-scale risks since its founding in 2014. Find out more about our mission or explore our work.

Related content

Other posts about AI, AI Policy, AI Safety Principles, Policy, Recent News

The U.S. Public Wants Regulation (or Prohibition) of Expert‑Level and Superhuman AI

Michael Kleinman reacts to breakthrough AI safety legislation

Some of our Policy & Research projects

Control Inversion