Highlights From NeurIPS 2018

Contents

The Top Takeaway from Google’s Attempt to Remove Racial Biases From AI

By Jolene Creighton

Algorithms don’t just decide what posts you see in your Facebook newsfeed. They make millions of life-altering decisions every day. They help decide who moves to the next stage of a job interview, who can take out a loan, and even who’s granted parole.

When one stops to consider the well-known biases that exist in these algorithms, the role that they play in our decision-making processes becomes somewhat concerning.

Ultimately, bias is a problem that stems from the unrepresentative datasets that our systems are trained on. For example, when it comes to images, most of the training data is Western-centric — it depicts caucasian individuals taking part in traditionally Western activities. Consequently, as Google research previously revealed, if we give an AI system an image of a caucasian bride in a Western dress, it correctly labels the image as “wedding,” “bride,” and “women.” If, however, we present the same AI system with an image of a bride of Asian descent, is produces results like “clothing,” “event,” and “performance art.”

Of course, this problem is not exclusively a Western one. In 2011, a study found that AI developed in Eastern Asia have more difficulty distinguishing between Caucasian faces than Asian faces.

That’s why, in September of 2018, Google partnered with the NeurIPS confrence to launch the Inclusive Images Competition, an event that was created to help encourage the development of less biased AI image classification models.

For the competition, individuals were asked to use Open Images, a image dataset collected from North America and Europe, to train a system that can be evaluated on images collected from a different geographic region.

At this week’s NeurIPS conference, Pallavi Baljekar, a Google Brain researcher, spoke about the success of the project. Notably, the competition was only marginally successful. Although the leading models maintained relatively high accuracy in the first stages of the competition, four out of five top models didn’t predict the “bride” label when applied to the original two bride images.

However, that’s not to say that progress wasn’t made. Baljekar noted that the competition proved that, even with a small and diverse set of data, “we can improve performance on unseen target distributions.”

And in an interview, Pavel Ostyakov, a Deep Learning Engineer at Samsung AI Center and the researcher who took first place in the competition, added that demanding an entirely unbiased AI may be asking for a bit too much. Ultimately, our AI need to be able to “stereotype” to some degree in order to make their classifications. “The problem was not solved yet, but I believe that it is impossible for neural networks to make unbiased predictions,” he said. Ultimately, the need to retain some biases are sentiments that have been echoed by other AI researchers before.

Consequently, it seems that making unbiased AI systems is going to be a process that requires continuous improvement and tweaking. Yet, despite the fact that we can’t make entirely unbiased AI, we can do a lot more to make them less biased.

With this in mind, today, Google announced Open Images Extended. It’s an extension of Google’s Open Images and is intended to be a dataset that better represents the global diversity we find on our planet. The first set to be added is seeded with over 470,000 images.

On this very long road we’re traveling, it’s a step in the right direction.

The Reproducibility Problem: AI Agents Should be Trained in More Realistic Environments

By Jolene Creighton

Our world is a complex and vibrant place. It’s also remarkably dynamic, existing in a state of near constant change. As a result, when we’re faced with a decision, there are thousands of variables that must be considered.

According to Joelle Pineau, an Associate Professor at McGill University and lead of Facebook’s Artificial Intelligence Research lab in Montreal, this poses a bit of a problem when it comes to our AI agents.

During her keynote speech at the 2018 NeurIPS conference, Pineau stated that many AI researchers aren’t training their machine learning systems in proper environments. Instead of using dynamic worlds that mimic what we see in real life, much of the work that’s currently being done takes place in simulated worlds that are static and pristine, lacking the complexity of realistic environments.

According to Pineau, although these computer-constructed worlds help make research more reproducible, they also make the results less rigorous and meaningful. “The real world has incredible complexity, and when we go to these simulators, that complexity is completely lost,” she said.

Pineau continued by noting that, if we hope to one day create intelligent machines that are able to work and react like humans — artificial general intelligences (AGIs) — we must go beyond the static and limited worlds that are created by computers and begin tackling real world scenarios. “We have to break out of these simulators…on the roadmap to AGI, this is only the beginning,” she said.

Ultimately, Pineau also noted that we will never achieve a true AGI unless we begin testing our systems on more diverse training sets and forcing our intelligent agents to tackle more complex problems. “The world is your test set,” she said, concluding, “I’m here to encourage you to explore the full spectrum of opportunities…this means using separate tasks for training and testing.”

Teaching a Machine to Reason

Pineau’s primary critique was on an area of machine learning that is known as reinforcement learning (RL). RL systems allow intelligent agents to improve their decision-making capabilities through trial and error. Over time, these agents are able to learn the rules that govern good and bad choices by interacting with their environment and receiving numerical reward signals that are based on the actions that they take.

Ultimately, RL systems are trained to maximize the numerical reward signals that they receive, so their decisions improve as they try more things and discover what actions yield the most reward. But unfortunately, most simulated worlds have a very limited number of variables. As a result, RL systems have very few things that they can interact with. This means that, although intelligent agents may know what constitutes good decision-making in a simulated environment, when they’re deployed in a realistic environment, they quickly become lost amidst all the new variables.

According to Pineau, overcoming this issue means creating more dynamic environments for AI systems to train on.

To showcase one way of accomplishing this, Pineau turned to Breakout, a game launched by Atari in 1976. The game’s environment is simplistic and static, consisting of a background that is entirely black. In order to inject more complexity into this simulated environment, Pineau and her team inserted videos, which are an endless source of natural noise, into the background.

Pineau argued that, by adding these videos into the equation, the team was able to create an environment that includes some of the complexity and variability of the real world. And by ultimately training reinforcement learning systems to operate in such multifaceted environments, researchers obtain more reliable findings and better prepare RL systems to make decisions in the real world.

In order to help researchers better comprehend exactly how reliable and reproducible their results currently are — or aren’t — Pineau pointed to The 2019 ICLR Reproducibility Challenge during her closing remarks.

The goal of this challenge is to have members of the research community try to reproduce the empirical results submitted to the International Conference on Learning Representations. Then, once all of the attempts have been made, the results are sent back to the original authors. Pineau noted that, to date, the challenge has had a dramatic impact on the findings that are reported. During the 2018 challenge, 80% of authors that received reproducibility reports stated that they changed their papers as a result of the feedback.

You can download a copy of Pineau’s slides here.

Montreal Declaration on Responsible AI May Be Next Step Toward the Development of AI Policy

By Ariel Conn

Over the last few years, as concerns surrounding artificial intelligence have grown, an increasing number of organizations, companies, and researchers have come together to create and support principles that could help guide the development of beneficial AI. With FLI’s Asilomar Principles, IEEE’s treatise on the Ethics of Autonomous and Intelligent Systems, the Partnership on AI’s Tenets, and many more, concerned AI researchers and developers have laid out a framework of ethics that almost everyone can agree upon. However, these previous documents weren’t specifically written to inform and direct AI policy and regulations.

On December 4, at the NeurIPS conference in Montreal, Canadian researchers took the next step, releasing the Montreal Declaration on Responsible AI. The Declaration builds on the current ethical framework of AI, but the architects of the document also add, “Although these are ethical principles, they can be translated into political language and interpreted in legal fashion.”

Yoshua Bengio, a prominent Canadian AI researcher and founder of one of the world’s premiere machine learning labs, described the Declaration saying, “Its goal is to establish a certain number of principles that would form the basis of the adoption of new rules and laws to ensure AI is developed in a socially responsible manner.”

“We want this Declaration to spark a broad dialogue between the public, the experts and government decision-makers,” said UdeM’s rector, Guy Breton. “The theme of artificial intelligence will progressively affect all sectors of society and we must have guidelines, starting now, that will frame its development so that it adheres to our human values and brings true social progress.”

The Declaration lays out ten principles: Well-Being, Respect for Autonomy, Protection of Privacy and Intimacy, Solidarity, Democratic Participation, Equity, Diversity, Prudence, Responsibility, and Sustainable Development.

The primary themes running through the Declaration revolve around ensuring that AI doesn’t disrupt basic human and civil rights and that it enhances equality, privacy, diversity, and human relationships. The Declaration also suggests that humans need to be held responsible for the actions of artificial intelligence systems (AIS), and it specifically states that AIS cannot be allowed to make the decision to take a human life. It also includes a section on ensuring that AIS is designed with the climate and environment in mind, such that resources are sustainably sourced and energy use is minimized.

The Declaration is the result of deliberation that “occurred through consultations held over three months, in 15 different public spaces, and sparked exchanges between over 500 citizens, experts and stakeholders from every horizon.” That it was formulated in Canada is especially relevant given Montreal’s global prominence in AI research.

In his article for the Conversation, Bengio explains, “Because Canada is a scientific leader in AI, it was one of the first countries to see all its potential and to develop a national plan. It also has the will to play the role of social leader.”

He adds, “Generally speaking, scientists tend to avoid getting too involved in politics. But when there are issues that concern them and that will have a major impact on society, they must assume their responsibility and become part of the debate.”

Making an Impact: What Role Should Scientists Play in Creating AI Policy?

By Jolene Creighton

Artificially intelligent systems are already among us. They fly our planes, drive our cars, and even help doctors make diagnoses and treatment plans. As AI continues to impact daily life and alter society, laws and policies will increasingly have to take it into account. Each day, more and more of the world’s experts call on policymakers to establish clear, international guidelines for the governance of AI.

This week, at the 2018 NeurIPS conference, Edward W. Felten, Professor of Computer Science and Public Affairs at Princeton University, took up the call.

During his opening remarks, Felten noted that AI is poised to radically change everything about the way we live and work, stating that this technology is “extremely powerful and represents a profound change that will happen across many different areas of life.” As such, Felten noted that we must work quickly to amend our laws and update our policies so we’re ready to confront the changes that this new technology brings.

However, Felten argued that policy makers cannot be left to dictate this course alone — members of the AI research community must engage with them.

“Sometimes it seems like our world, the world of the research lab or the developer’s or data scientist’s cubicle, is a million miles from public policy…however, we have not only an opportunity but also a duty to be actively participating in public life,” he said.

Guidelines for Effective Engagement

Felton noted that the first step for researchers is to focus on and understand the political system as a whole. “If you look only at the local picture, it might look irrational. But, in fact, these people are operating inside a system that is big and complicated,” he said. To this point, Felten stated that researchers must become better informed about political processes so that they can participate in policy conversations more effectively.

According to Felten, this means the AI community needs to recognize that policy work is valid and valuable, and this work should be incentivized accordingly. He also called on the AI community to create career paths that encourage researchers to actively engage with policymakers by blending AI research and policy work.

For researchers who are interested in pursuing such work, Felten outlined the steps they should take to start an effective dialogue:

- Combine knowledge with preference: As a researcher, work to frame your expertise in the context of the policymaker’s interests.

- Structure the decision space: Based on the policymaker’s preferences, give a range of options and explain their possible consequences.

- Follow-up: Seek feedback on the utility of the guidance that you offered and the way that you presented your ideas.

If done right, Felton said, this protocol allows experts and policy makers to build productive engagement and trust over time.

About the Future of Life Institute

The Future of Life Institute (FLI) is a global think tank with a team of 20+ full-time staff operating across the US and Europe. FLI has been working to steer the development of transformative technologies towards benefitting life and away from extreme large-scale risks since its founding in 2014. Find out more about our mission or explore our work.

Related content

Other posts about AI, Recent News

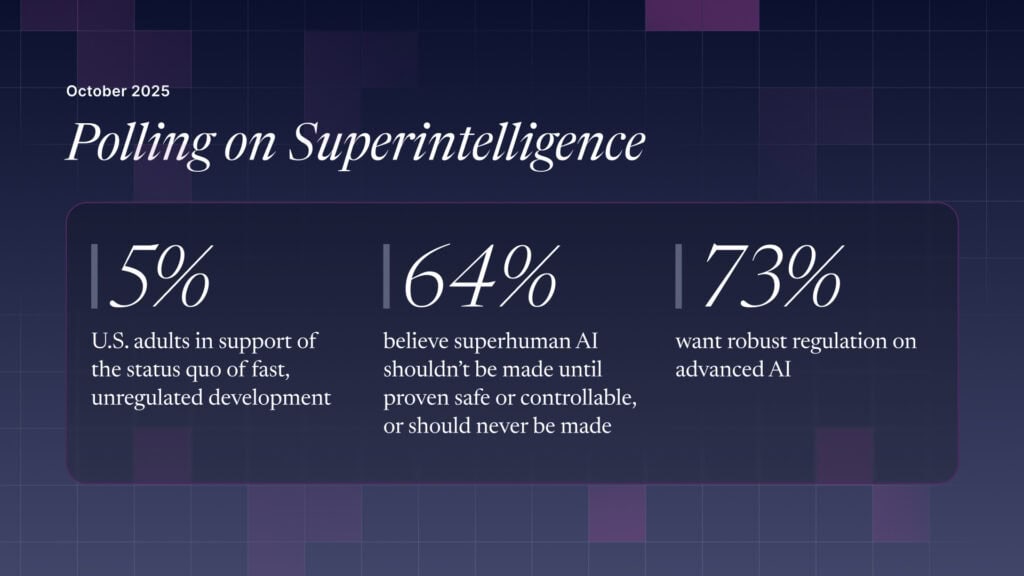

The U.S. Public Wants Regulation (or Prohibition) of Expert‑Level and Superhuman AI