Analysis: Clopen AI – Openness in Different Aspects of AI Development

Contents

Clopen AI – which aspects of artificial intelligence research should be open, and which aspects should be closed, to keep AI safe and beneficial to humanity?

There has been a lot of discussion about the appropriate level of openness in AI research in the past year – the OpenAI announcement, the blog post Should AI Be Open?, a response to the latter, and Nick Bostrom’s thorough paper Strategic Implications of Openness in AI development.

There is disagreement on this question within the AI safety community as well as outside it. Many people are justifiably afraid of concentrating power to create AGI and determine its values in the hands of one company or organization. Many others are concerned about the information hazards of open-sourcing AGI and the resulting potential for misuse. In this post, I argue that some sort of compromise between openness and secrecy will be necessary, as both extremes of complete secrecy and complete openness seem really bad. The good news is that there isn’t a single axis of openness vs secrecy – we can make separate judgment calls for different aspects of AGI development, and develop a set of guidelines.

Information about AI development can be roughly divided into two categories – technical and strategic. Technical information includes research papers, data, source code (for the algorithm, objective function), etc. Strategic information includes goals, forecasts and timelines, the composition of ethics boards, etc. Bostrom argues that openness about strategic information is likely beneficial both in terms of short- and long-term impact, while openness about technical information is good on the short-term, but can be bad on the long-term due to increasing the race condition. We need to further consider the tradeoffs of releasing different kinds of technical information.

Sharing papers and data is both more essential for the research process and less potentially dangerous than sharing code, since it is hard to reconstruct the code from that information alone. For example, it can be difficult to reproduce the results of a neural network algorithm based on the research paper, given the difficulty of tuning the hyperparameters and differences between computational architectures.

Releasing all the code required to run an AGI into the world, especially before it’s been extensively debugged, tested, and safeguarded against bad actors, would be extremely unsafe. Anyone with enough computational power could run the code, and it would be difficult to shut down the program or prevent it from copying itself all over the Internet.

However, releasing none of the source code is also a bad idea. It would currently be impractical, given the strong incentives for AI researchers to share at least part of the code for recognition and replicability. It would also be suboptimal, since sharing some parts of the code is likely to contribute to safety. For example, it would make sense to open-source the objective function code without the optimization code, which would reveal what is being optimized for but not how. This could make it possible to verify whether the objective is sufficiently representative of society’s values – the part of the system that would be the most understandable and important to the public anyway.

It is rather difficult to verify to what extent a company or organization is sharing their technical information on AI development, and enforce either complete openness or secrecy. There is not much downside to specifying guidelines for what is expected to be shared and what isn’t. Developing a joint set of openness guidelines on the short and long term would be a worthwhile endeavor for the leading AI companies today.

(Cross-posted from my blog. Thanks to Jelena Luketina and Janos Kramar for their detailed feedback on this post.)

Note from FLI: Among our objectives is to inspire discussion and a sharing of ideas. As such, we post op-eds that we believe will help spur discussion within our community. Op-eds do not necessarily represent FLI’s opinions or views.

Image source: http://brownsharpie.courtneygibbons.org/wp-content/comics/2007-01-04-clopen-for-business.jpg

About the Future of Life Institute

The Future of Life Institute (FLI) is a global think tank with a team of 20+ full-time staff operating across the US and Europe. FLI has been working to steer the development of transformative technologies towards benefitting life and away from extreme large-scale risks since its founding in 2014. Find out more about our mission or explore our work.

Related content

Other posts about AI, Recent News

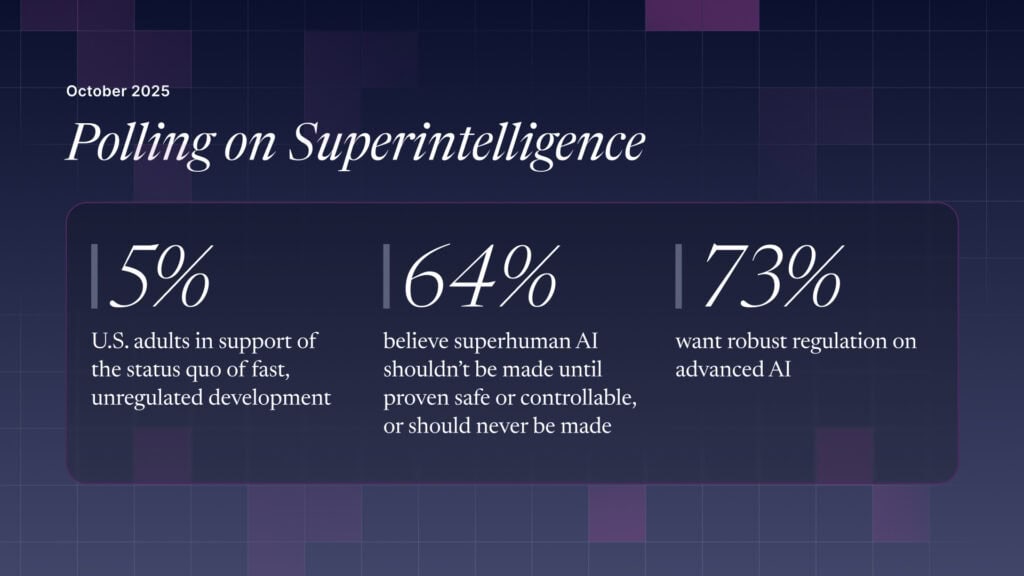

The U.S. Public Wants Regulation (or Prohibition) of Expert‑Level and Superhuman AI