Effective Altruism and Existential Risks: a talk with Lucas Perry

Contents

What are the greatest problems of our time? And how can we best address them?

FLI’s Lucas Perry recently spoke at Duke University and Boston College to address these questions. Perry presented two major ideas in these talks – effective altruism and existential risk – and explained how they work together.

As Perry explained to his audiences, effective altruism is a movement in philanthropy that seeks to use evidence, analysis, and reason to take actions that will do the greatest good in the world. Since each person has limited resources, effective altruists argue it is essential to focus resources where they can do the most good. As such, effective altruists tend to focus on neglected, large-scale problems where their efforts can yield the greatest positive change.

Effective altruists focus on issues including poverty alleviation, animal suffering, and global health through various organizations. Nonprofits such as 80,000 Hours help people find jobs within effective altruism, and charity evaluators such as GiveWell investigate and rank the most effective ways to donate money. These groups and many others are all dedicated to using evidence to address neglected problems that cause, or threaten to cause, immense suffering.

Some of these neglected problems happen to be existential risks – they represent threats that could permanently and drastically harm intelligent life on Earth. Since existential risks, by definition, put our very existence at risk, and have the potential to create immense suffering, effective altruists consider these risks extremely important to address.

Perry explained to his audiences that the greatest existential risks arise due to humans’ ability to manipulate the world through technology. These risks include artificial intelligence, nuclear war, and synthetic biology. But Perry also cautioned that some of the greatest existential threats might remain unknown. As such, he and effective altruists believe the topic deserves more attention.

Perry learned about these issues while he was in college, which helped redirect his own career goals, and he wants to share this opportunity with other students. He explains, “In order for effective altruism to spread and the study of existential risks to be taken seriously, it’s critical that the next generation of thought leaders are in touch with their importance.”

College students often want to do more to address humanity’s greatest threats, but many students are unsure where to go. Perry hopes that learning about effective altruism and existential risks might give them direction. Realizing the urgency of existential risks and how underfunded they are – academics spend more time on the dung fly than on existential risks – can motivate students to use their education where it can make a difference.

As such, Perry’s talks are a small effort to open the field to students who want to help the world and also crave a sense of purpose. He provided concrete strategies to show students where they can be most effective, whether they choose to donate money, directly work with issues, do research, or advocate.

By understanding the intersection between effective altruism and existential risks, these students can do their part to ensure that humanity continues to prosper in the face of our greatest threats yet.

As Perry explains, “When we consider what existential risks represent for the future of intelligent life, it becomes clear that working to mitigate them is an essential part of being an effective altruist.”

About the Future of Life Institute

The Future of Life Institute (FLI) is a global think tank with a team of 20+ full-time staff operating across the US and Europe. FLI has been working to steer the development of transformative technologies towards benefitting life and away from extreme large-scale risks since its founding in 2014. Find out more about our mission or explore our work.

Related content

Other posts about Existential Risk, Recent News

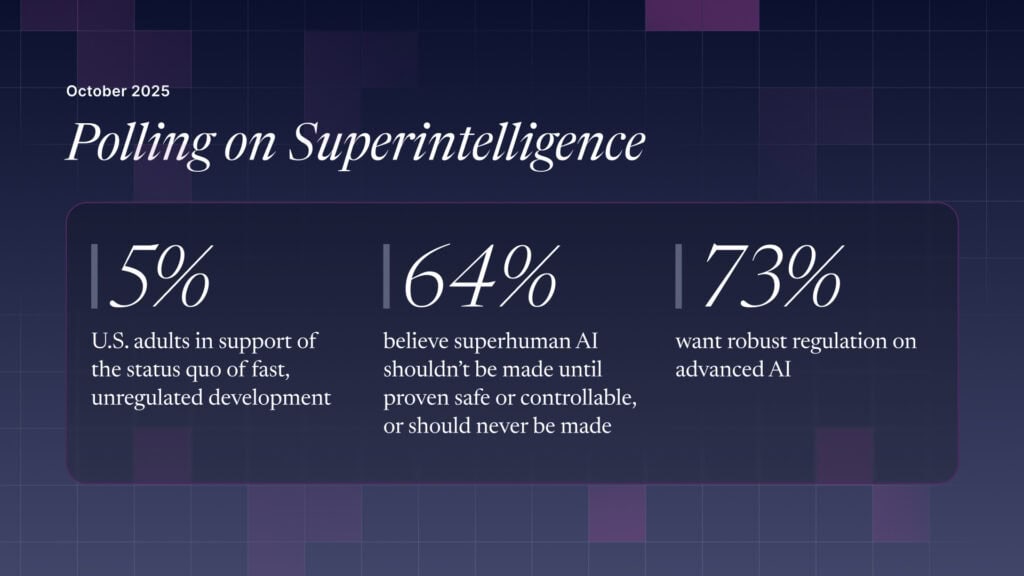

The U.S. Public Wants Regulation (or Prohibition) of Expert‑Level and Superhuman AI

Are we close to an intelligence explosion?