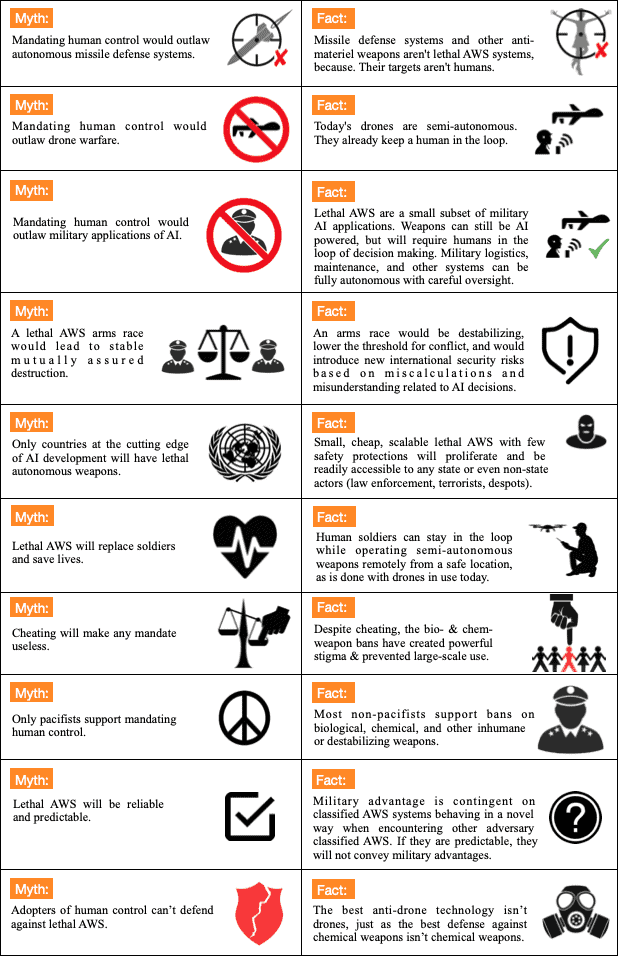

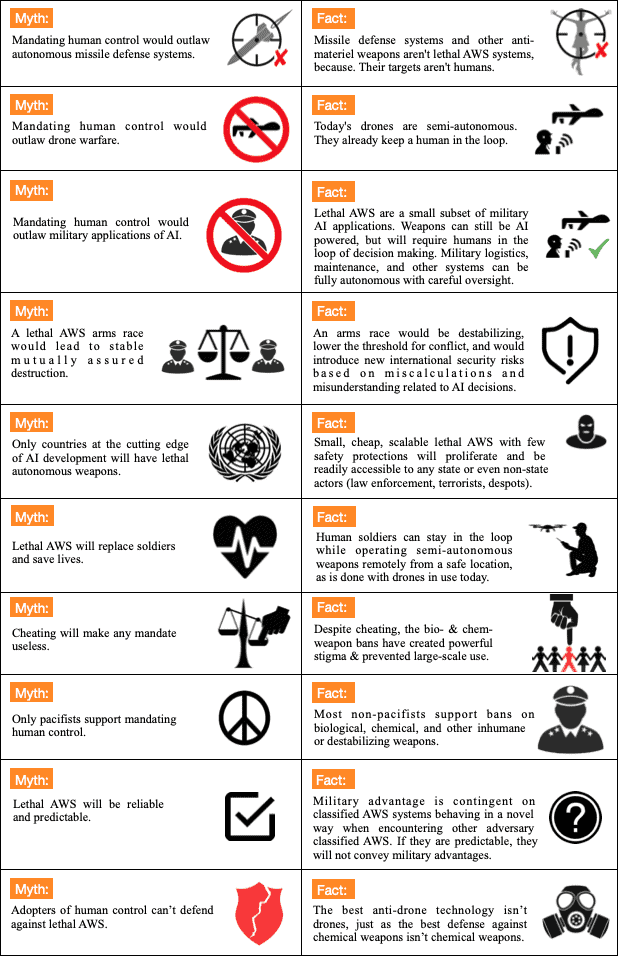

Top Myths and Facts on Human Control of Autonomous Weapons

Contents

There are a number of myths surrounding the issue of human control over autonomous weapons.

Many people who wish to ensure the safe emergence of autonomous weapons believe that it is necessary to enforce a ‘mandate of human control’ over autonomous weapons in order to mitigate some of the risks associated with this new technology

This page debunks some of the most commons myths on the mandate of human control.

1 – Defense systems

Myth: Mandating human control would outlaw autonomous missile defense systems.

Fact: Missile defense systems and other anti-materiel weapons aren’t lethal AWS systems. Their targets are not humans.

Lethal AWS systems refer to a narrow subset of autonomous weapons systems where the target of the weapon system is a human. Autonomous weapons systems designed to defend against incoming missiles, or other anti-materiel targets, would not be subject to the mandate.

2 – Drone warfare

Myth: Mandating human control would outlaw drone warfare.

Fact: Today’s drones are semi-autonomous. They already keep a human in the loop.

Current drone warfare often employs AI for many of its functions, human decision making is maintained across the life cycle of identifying, selecting and engaging a target. The presence of this human-machine interaction is what characterizes the semi-autonomous systems in use today. Mandating human control would not affect current military practice in drone warfare, which keeps a human in the loop.

3 – Military applications

Myth: Mandating human control would outlaw military applications of AI.

Fact: Lethal AWS are a very small subset of military AI applications. They can still be AI powered, but will require humans in the loop of decision making.

AI is already widely used in the military and has many benefits such as improving precision, accuracy, speed, situational awareness, detecting and tracking functions of weapons. These functions can help commanders to make more informed decisions and minimize civilian casualties. The semi-autonomous weapons systems in drones today are an example of how AI can be used to enhance human decision making. Hence, mandating human control over lethal AWS, would not limit the use of AI in weapons system.

4 – Mutually-Assured Destruction

Myth: A lethal AWS arms race would lead to stable mutually assured destruction.

Fact: An arms race would be destabilizing, lower the threshold for conflict, and would introduce new international security risks.

Many see lethal AWS as conferring a decisive strategic advantage, similar to nuclear weapons, and

see the inevitable endpoint of an arms race as one of stable mutually assured destruction. However, in contrast, lethal AWS systems do not require expensive raw materials, extensive expertise and will likely be highly scalable, making them a cheap and accessible weapon of mass destruction. Furthermore, lethal AWS have serious vulnerabilities in terms of the reliability of their performance and their vulnerability to hacking. It is more likely that the endpoint of an arms race would be catastrophically destabilizing.

5 – Exclusiveness

Myth: Only countries at the cutting edge of AI development will have lethal autonomous weapons.

Fact: Small, cheap, scalable lethal AWS would proliferate and be readily accessible to any state or even non-state actors (law enforcement, terrorists, despots).

The ability to easily scale is an inherent property of software. Small, cheap, variants of lethal AWS could easily be mass produced and proliferate not only to any state globally, but also to non state-actors. Hence, lethal AWS could pose a significant national security risk beyond military applications, being used by terrorist groups, as weapons of assassination, or by law-enforcement or border patrol.

6 – Replace soldiers

Myth: Lethal AWS will replace soldiers and save lives.

Fact: Human soldiers can stay in the loop while operating weapons remotely from a safe location, as is done with drones in use today.

Semi-autonomous systems already enable the removal of the human from the battlefield, saving soldiers lives and allowing for drones to be operated remotely. Keeping humans in the loop also can help to save civilian lives, as robust human-machine decision-making can minimize the errors and improve situational awareness, thereby helping to reduce non-combatant casualties.

7 – Cheating

Myth: Cheating will make any mandate useless.

Fact: Despite cheating, the bio- & chem-weapon bans have created powerful stigma& prevented large-scale use.

The chemical weapons ban has robust verification and certifications mechanisms, but other bans, such as biological weapons, do not have any such mechanism. In both cases, to date, there has not been a large scale violation of these bans. Arguably, it is not the legal authority of a weapons ban that has prevented large-scale use and cheating, but the powerful stigma that they have created. Like lethal AWS, chemical and biological weapons can be created at scale and without exotic or expensive materials, but this largely hasn’t happened due to the powerful stigma against these weapons.

8 – Pacifists

Myth: Only pacifists support mandating human control.

Fact: Most non-pacifists support bans on biological, chemical, and other inhumane or destabilizing weapons.

Banning the use of inhumane and destabilizing weapons is a fairly non-controversial topic, as few would argue in favor of the development of biological or chemical weapons. While many groups from the pacifist community are in favor of mandating human control, many of the most vocal advocates for human control are those from the technology, science, and military communities. The FLI Pledge in support of banning lethal autonomous weapons has been signed by over 3,000 individuals, many of whom are leading researchers and developers of AI.

9 – Compliant

Myth: Lethal AWS will be reliable, predictable, and always comply with the human commander’s intent.

Fact: Military advantage is contingent on AWS systems behaving autonomously and unpredictably, especially when encountering other AWS.

Lethal autonomous weapons systems are inherently unpredictable, as by definition they are designed to have autonomous decision making authority to respond to unforeseen and rapidly evolving environments. This is especially relevant in context of two adversarial weapon systems, where unpredictability is desired to avoid being defeated by another system. Thus, unpredictability is encouraged as it confers a strategic advantage.

10 – Defenseless

Myth: Adopters of human control can’t defend against lethal AWS.

Fact: The best anti-drone technology isn’t drones, just as the best defense against chemical weapons isn’t chemical weapons.

The defense systems required for lethal AWS are likely to be different technologies than lethal AWS. Furthermore, if a technology is developed using AI to defend against lethal AWS, much like missile defense systems, it would not be subject to any requirement for human control as the target of the weapon is another weapon as opposed to a human target.

Infographic

You can view all of these myths and facts as an infographic, available to view and download here.

About the Future of Life Institute

The Future of Life Institute (FLI) is a global think tank with a team of 20+ full-time staff operating across the US and Europe. FLI has been working to steer the development of transformative technologies towards benefitting life and away from extreme large-scale risks since its founding in 2014. Find out more about our mission or explore our work.

Related content

Other posts about Autonomous Weapons

Future of Life Institute Statement on the Pope’s G7 AI Speech

An introduction to the issue of Autonomous Weapons

10 Reasons Why Autonomous Weapons Must be Stopped