The Ethics of Value Alignment Workshop

Contents

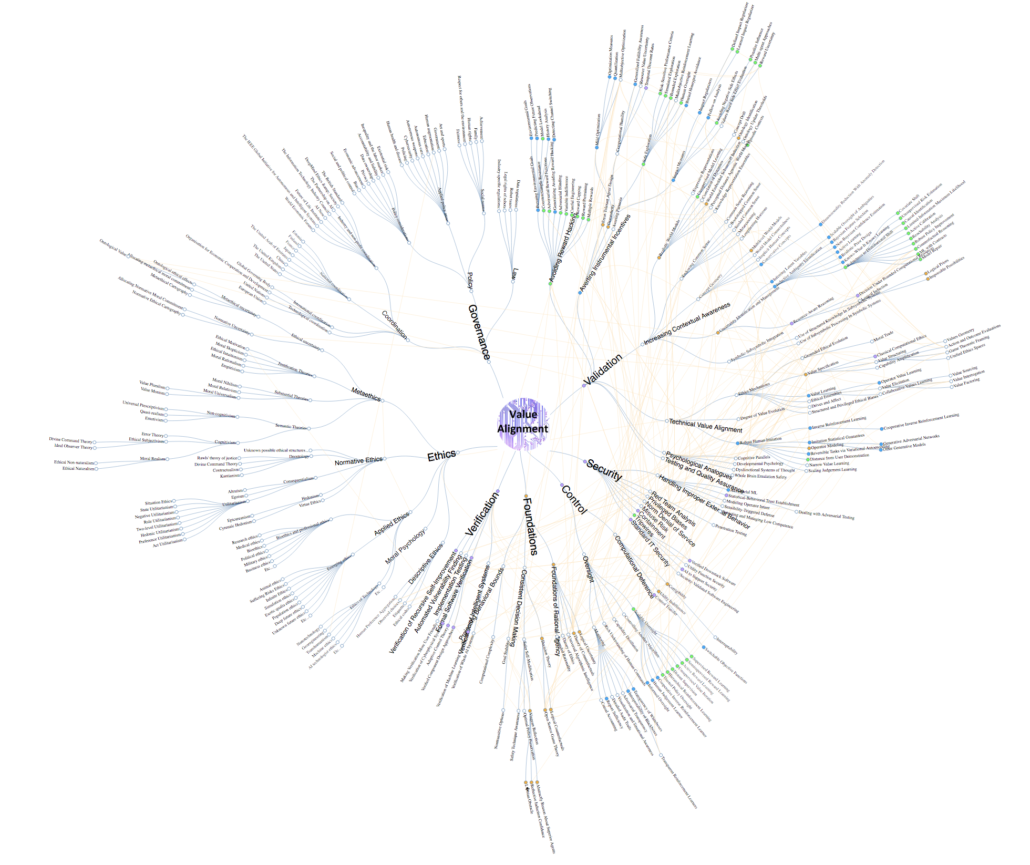

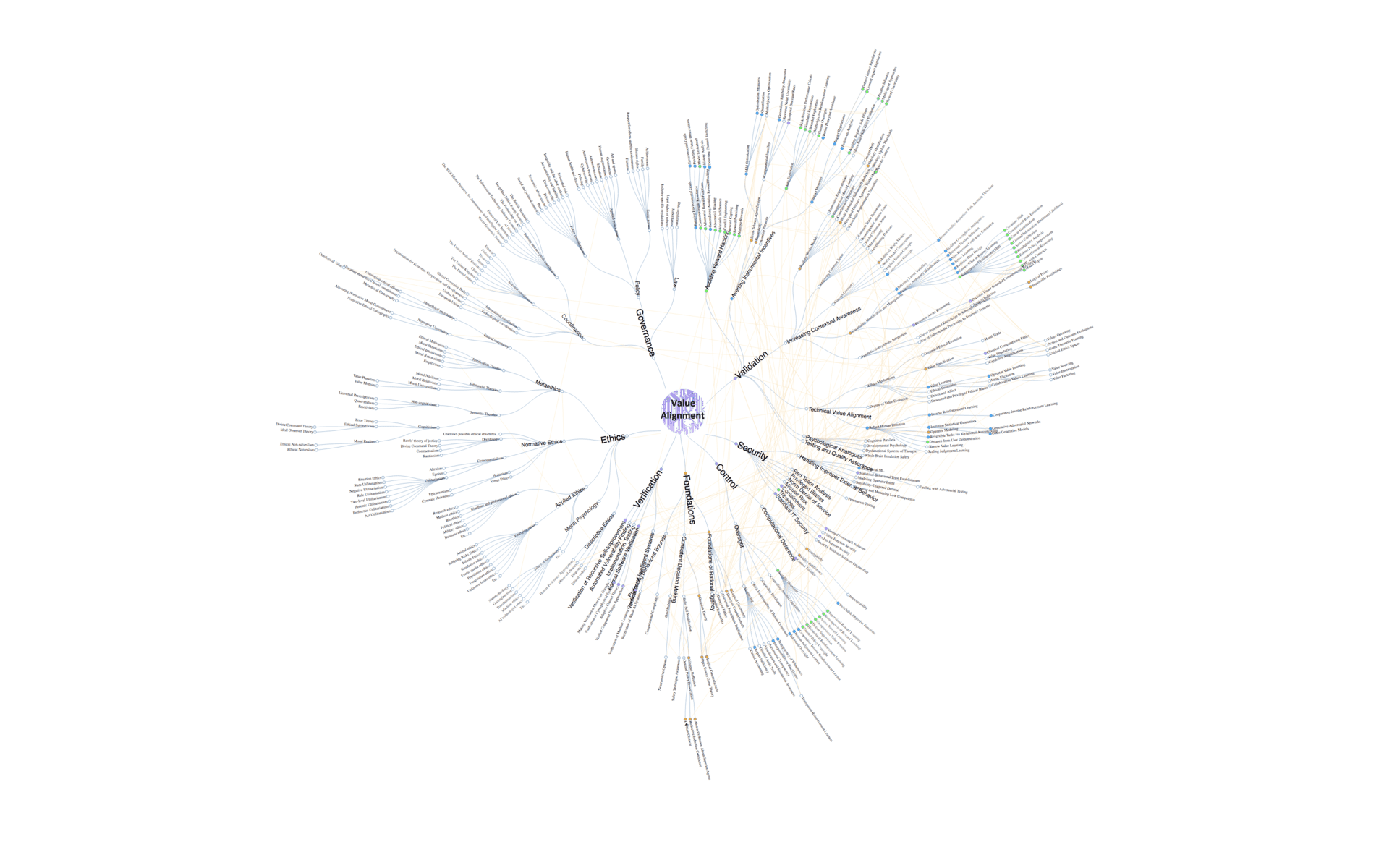

Value alignment has thus far been primarily conceived as an area of AI safety research that focuses on aligning AI’s actions and goals with human values or what is deemed to be “the good”. It is the study of creating complex machine learning processes which inquire about and converge on better and better ethics and values. Much of the current discourse has been focused on explorations in inverse reinforcement learning and other machine learning techniques needed to teach AIs values. Thus, the conversation has so far primarily been one between technical AI researchers. Yet, by its very nature, the problem of value alignment extends into essentially all domains of human life and inquiry. So, what has been largely ignored are very deep and important social, political, and philosophical questions that have crucial implications in technical AI safety research itself and the implementation of value aligned AIs. Even if all of the technical issues surrounding value alignment were solved in the next few years, we would still face deeply challenging questions whose answers will have tremendous consequences for life on Earth: Whose values do we align to? How do we socially and politically determine who is included in preference aggregation and who is not? What does value alignment demand of our own ethical evolution? How are humans to co-evolve with AI systems? Are there such things as moral facts? What ought we to value? How is value alignment to be done given moral uncertainty? How should we proceed pragmatically given imperfect answers to the many of the most difficult social, political, and moral questions surrounding value alignment?

The goal of this workshop is to bring together researchers and thought leaders from various disciplines such as computer science, social sciences, philosophy, law etc. to discuss the ethical considerations and implications of aligning AI to human values. Our intention is to have an interdisciplinary exchange and to bring together people, theories, methods, tools and approaches from multiple disciplines, some of which have not yet been properly engaged in this important and timely conversation. This workshop will also generate a collection of research problems that require an interdisciplinary approach to address.

Long Beach, CA

Saturday, December 9

7:30 PM – Welcome Reception/Dinner

L’Opera – 101 Pine Ave, Long Beach, CA 90802

Sunday, December 10

8:00 AM- 9:00 AM Breakfast

9:00 AM- 9:30 AM

Welcome: Max Tegmark, Craig Calhoun, and John Hepburn

Opening Remarks: Nils Gilman

Introduction to the conceptual landscape: Lucas Perry

Each of the sessions below opens with a handful of (very brief) lightning talks relevant to their topic, followed by moderated group discussion, structured to tackle key topics sequentially.

9:30 AM-12:00 PM (coffee break halfway through)

Session 1: Value challenges and alignment in the short term (Facilitator: Meia Chita-Tegmark)

- What ethical challenges are posed by near-term technology such as autonomous vehicles, autonomous weapons, and big data systems? How should we deal with bias/discrimination, privacy, transparency, fairness, economic impact, and accountability, and how are AI researchers dealing with such issues today?

- How can machines learn value systems from us, especially for adjudicating unanticipated situations? What would be the values ‘we’ –– a term to be defined –– want AI to learn?

- Is it possible to produce clear procedural definitions of the ethical challenges AI is producing?

Lightning talks:

Francesca Rossi: Embedding values in both data-driven and rule-based decision making

Susan and Michael Anderson: An example of implementing ethical behavior in machines

Dylan Hadfield-Menell: The ethics of AI manipulation of humans

Stephanie Dinkins: AI and social equity

Wendell Wallach: Agile ethical/legal framework for international and national governance of AI and robotics

12:00 PM-13:00 PM Lunch

13:00 PM-15:30 PM

Session 2: Value challenges and alignment in the long term. (Facilitator: Max Tegmark)

- If superintelligence is built, then what values should it have and how should they evolve?

- Through what process should this be determined? What societal or political processes and structures are most appropriate for ethically aligning superintelligence?

- Is it possible to construct a rigorous and sophisticated moral epistemology? How?

- How should we think about the co-evolution of AIs and humans if the latter gradually become more capable than us and technology enhances and transforms our own capabilities and goals?

Lightning talks:

Max Tegmark: Framing value alignment in the long term

Nick Bostrom: Reflections on the ethical challenges for superintelligence value alignment

Viktoriya Krakovna: How technical challenges affect the ethical options for value alignment

Andrew Critch: The morality of human survival/extinction

Nate Fast: Moral attitudes toward transhumanism

Joshua Greene: Interpretability: Ensuring that we understand the values of an AGI

15:30 PM-16:00 PM Coffee Break

16:00 PM-18:30 PM

Session 3: Whose values? Which Values? (Facilitator: Tobias Rees)

- What are the ethical responsibilities of AI researchers? To whom do they owe these responsibilities?

- Who should be included in conversations about which values AI should incorporate?

- When there are value conflicts, different risk sensitivities, or serious uncertainties, who should have decision rights?

- Through what social or political processes and structures should the values/the we be determined? What forums –– or formats –– would work best?

Lightning talks:

Thomas Metzinger: Five variants of the value alignment problem

Sam Harris: How the distinction between “facts” and “values” is misleading

Joshua Cohen: Aligning with equality

Patrick Lin: Moral gray spaces

18:30 PM Dinner

The Sky Room – 40 S. Locust Avenue, Long Beach, CA 90802

Bios for all of the attendees can be found here.

About the Future of Life Institute

The Future of Life Institute (FLI) is a global think tank with a team of 20+ full-time staff operating across the US and Europe. FLI has been working to steer the development of transformative technologies towards benefitting life and away from extreme large-scale risks since its founding in 2014. Find out more about our mission or explore our work.

Related content

Other posts about AI