$2 Million Donated to Keep Artificial General Intelligence Beneficial and Robust

Contents

$2 million has been allocated to fund research that anticipates artificial general intelligence (AGI) and how it can be designed beneficially. The money was donated by Elon Musk to cover grants through the Future of Life Institute (FLI). Ten grants have been selected for funding.

Said Tegmark, “I’m optimistic that we can create an inspiring high-tech future with AI as long as we win the race between the growing power of AI and the wisdom with which the manage it. This research is to help develop that wisdom and increasing the likelihood that AGI will be best rather than worst thing to happen to humanity.”

Today’s artificial intelligence (AI) is still quite narrow. That is, it can only accomplish narrow sets of tasks, such as playing chess or Go, driving a car, performing an Internet search, or translating languages. While the AI systems that master each of these tasks can perform them at superhuman levels, they can’t learn a new, unrelated skill set (e.g. an AI system that can search the Internet can’t learn to play Go with only its search algorithms).

These AI systems lack that “general” ability that humans have to make connections between disparate activities and experiences and to apply knowledge to a variety of fields. However, a significant number of AI researchers agree that AI could achieve a more “general” intelligence in the coming decades. No one knows how AI that’s as smart or smarter than humans might impact our lives, whether it will prove to be beneficial or harmful, how we can design it safely, or even how to prepare society for advanced AI. And many researchers worry that the transition could occur quickly.

Anthony Aguirre, co-founder of FLI and physics professor at UC Santa Cruz, explains, “The breakthroughs necessary to have machine intelligences as flexible and powerful as our own may take 50 years. But with the major intellectual and financial resources now being directed at the problem it may take much less. If or when there is a breakthrough, what will that look like? Can we prepare? Can we design safety features now, and incorporate them into AI development, to ensure that powerful AI will continue to benefit society? Things may move very quickly and we need research in place to make sure they go well.”

Grant topics include: training multiple AIs to work together and learn from humans about how to coexist, training AI to understand individual human preferences, understanding what “general” actually means, incentivizing research groups to avoid a potentially dangerous AI race, and many more. As the request for proposals stated, “The focus of this RFP is on technical research or other projects enabling development of AI that is beneficial to society and robust in the sense that the benefits have some guarantees: our AI systems must do what we want them to do.”

FLI hopes that this round of grants will help ensure that AI remains beneficial as it becomes increasingly intelligent. The full list of FLI recipients and project titles includes:

Some of the grant recipients offered statements about why they’re excited about their new projects:

“The team here at the Governance of AI Program are excited to pursue this research with the support of FLI. We’ve identified a set of questions that we think are among the most important to tackle for securing robust governance of advanced AI, and strongly believe that with focused research and collaboration with others in this space, we can make productive headway on them.” -Allan Dafoe

“We are excited about this project because it provides a first unique and original opportunity to explicitly study the dynamics of safety-compliant behaviours within the ongoing AI research and development race, and hence potentially leading to model-based advice on how to timely regulate the present wave of developments and provide recommendations to policy makers and involved participants. It also provides an important opportunity to validate our prior results on the importance of commitments and other mechanisms of trust in inducing global pro-social behavior, thereby further promoting AI for the common good.” -The Ahn Han

“We are excited about the potentials of this project. Our goal is to learn models of humans’ preferences, which can help us build algorithms for AGIs that can safely and reliably interact and collaborate with people.” -Dorsa Sadigh

This is FLI’s second grant round. The first launch in 2015, and a comprehensive list of papers, articles and information from that grant round can be found here. Both grant rounds are part of the original $10 million that Elon Musk pledged to AI safety research.

FLI cofounder, Viktoriya Krakovna, also added: “Our previous grant round promoted research on a diverse set of topics in AI safety and supported over 40 papers. The next grant round is more narrowly focused on research in AGI safety and strategy, and I am looking forward to great work in this area from our new grantees.”

Learn more about these projects here.

About the Future of Life Institute

The Future of Life Institute (FLI) is a global think tank with a team of 20+ full-time staff operating across the US and Europe. FLI has been working to steer the development of transformative technologies towards benefitting life and away from extreme large-scale risks since its founding in 2014. Find out more about our mission or explore our work.

Related content

Other posts about AI, FLI projects, Recent News

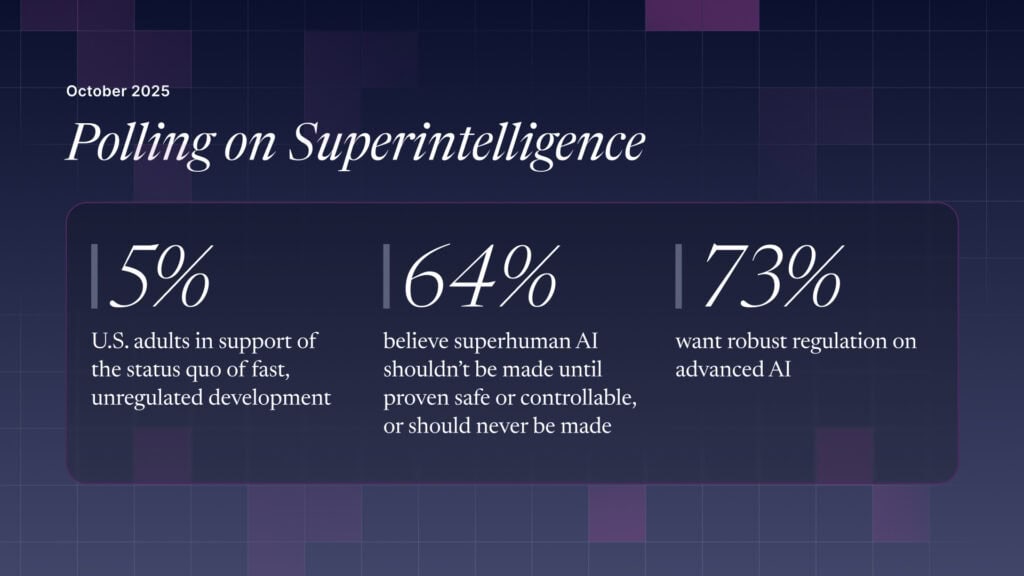

The U.S. Public Wants Regulation (or Prohibition) of Expert‑Level and Superhuman AI