Artificial Intelligence and Income Inequality

Contents

Click here to see this page in other languages: Chinese ![]()

Shared Prosperity Principle: The economic prosperity created by AI should be shared broadly, to benefit all of humanity.

Income inequality is a well recognized problem. The gap between the rich and poor has grown over the last few decades, but it became increasingly pronounced after the 2008 financial crisis. While economists debate the extent to which technology plays a role in global inequality, most agree that tech advances have exacerbated the problem.

In an interview with the MIT Tech Review, economist Erik Brynjolfsson said, “My reading of the data is that technology is the main driver of the recent increases in inequality. It’s the biggest factor.”

Which begs the question: what happens as automation and AI technologies become more advanced and capable?

Artificial intelligence can generate great value by providing services and creating products more efficiently than ever before. But many fear this will lead to an even greater disparity between the wealthy and the rest of the world.

AI expert Yoshua Bengio suggests that equality and ensuring a shared benefit from AI could be pivotal in the development of safe artificial intelligence. Bengio, a professor at the University of Montreal, explains, “In a society where there’s a lot of violence, a lot of inequality, [then] the risk of misusing AI or having people use it irresponsibly in general is much greater. Making AI beneficial for all is very central to the safety question.”

In fact, when speaking with many AI experts across academia and industry, the consensus was unanimous: the development of AI cannot benefit only the few.

Broad Agreement

“It’s almost a moral principle that we should share benefits among more people in society,” argued Bart Selman, a professor at Cornell University. “I think it’s now down to eight people who have as much as half of humanity. These are incredible numbers, and of course if you look at that list it’s often technology pioneers that own that half. So we have to go into a mode where we are first educating the people about what’s causing this inequality and acknowledging that technology is part of that cost, and then society has to decide how to proceed.”

Guruduth Banavar, Vice President of IBM Research, agreed with the Shared Prosperity Principle, but said, “It needs rephrasing. This is broader than AI work. Any AI prosperity should be available for the broad population. Everyone should benefit and everyone should find their lives changed for the better. This should apply to all technology – nanotechnology, biotech – it should all help to make life better. But I’d write it as ‘prosperity created by AI should be available as an opportunity to the broadest population.’”

Francesca Rossi, a research scientist at IBM, added, “I think very important. And it also ties in with the general effort and commitment by IBM to work a lot on education and re-skilling people to be able to engage with the new technologies in the best way. In that way people will be more able to take advantage of all the potential benefits of AI technology. That also ties in with the impact of AI on the job market and all the other things that are being discussed. And they are very dear to IBM as well, in really helping people to benefit the most out of the AI technology and all the applications.”

Meanwhile, Stanford’s Stefano Ermon believes that research could help ensure greater equality. “It’s very important that we make sure that AI is really for everybody’s benefit,” he explained, “that it’s not just going to be benefitting a small fraction of the world’s population, or just a few large corporations. And I think there is a lot that can be done by AI researchers just by working on very concrete research problems where AI can have a huge impact. I’d really like to see more of that research work done.”

A Big Challenge

“AI is having incredible successes and becoming widely deployed. But this success also leads to a big challenge,” said Dan Weld, a professor at the University of Washington. “ its impending potential to increase productivity to the point where many people may lose their jobs. As a result, AI is likely to dramatically increase income disparity, perhaps more so than other technologies that have come about recently. If a significant percentage of the populace loses employment, that’s going to create severe problems, right? We need to be thinking about ways to cope with these issues, very seriously and soon.”

Berkeley professor, Anca Dragan, summed up the problem when she asked, “If all the resources are automated, then who actually controls the automation? Is it everyone or is it a few select people?”

“I’m really concerned about AI worsening the effects and concentration of power and wealth that we’ve seen in the last 30 years,” Bengio added.

“It’s a real fundamental problem facing our society today, which is the increasing inequality and the fact that prosperity is not being shared around,” explained Toby Walsh, a professor at UNSW Australia.

“This is fracturing our societies and we see this in many places, in Brexit, in Trump,” Walsh continued. “A lot of dissatisfaction within our societies. So it’s something that we really have to fundamentally address. But again, this doesn’t seem to me something that’s really particular to AI. I think really you could say this about most technologies. … although AI is going to amplify some of these increasing inequalities. If it takes away people’s jobs and only leaves wealth in the hands of those people owning the robots, then that’s going to exacerbate some trends that are already happening.”

Kay Firth-Butterfield, the Executive Director of AI-Austin.org, also worries that AI could exacerbate an already tricky situation. “AI is a technology with such great capacity to benefit all of humanity,” she said, “but also the chance of simply exacerbating the divides between the developed and developing world, and the haves and have nots in our society. To my mind that is unacceptable and so we need to ensure, as Elon Musk said, that AI is truly democratic and its benefits are available to all.”

“Given that all the jobs (physical and mental) will be gone, [shared prosperity] is the only chance we have to be provided for,” added University of Louisville professor, Roman Yampolskiy.

What Do You Think?

Given current tech trends, is it reasonable to assume that AI will exacerbate today’s inequality issues? Will this lead to increased AI safety risks? How can we change the societal mindset that currently discourages a greater sharing of wealth? Or is that even a change we should consider?

This article is part of a weekly series on the 23 Asilomar AI Principles.

The Principles offer a framework to help artificial intelligence benefit as many people as possible. But, as AI expert Toby Walsh said of the Principles, “Of course, it’s just a start. … a work in progress.” The Principles represent the beginning of a conversation, and now we need to follow up with broad discussion about each individual principle. You can read the weekly discussions about previous principles here.

About the Future of Life Institute

The Future of Life Institute (FLI) is a global think tank with a team of 20+ full-time staff operating across the US and Europe. FLI has been working to steer the development of transformative technologies towards benefitting life and away from extreme large-scale risks since its founding in 2014. Find out more about our mission or explore our work.

Related content

Other posts about AI, AI Safety Principles, Recent News

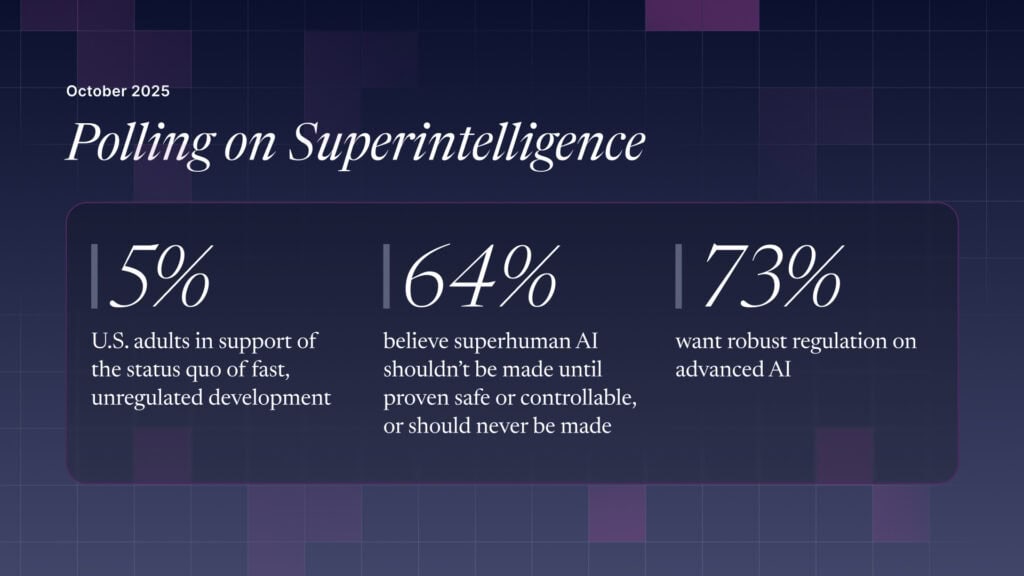

The U.S. Public Wants Regulation (or Prohibition) of Expert‑Level and Superhuman AI