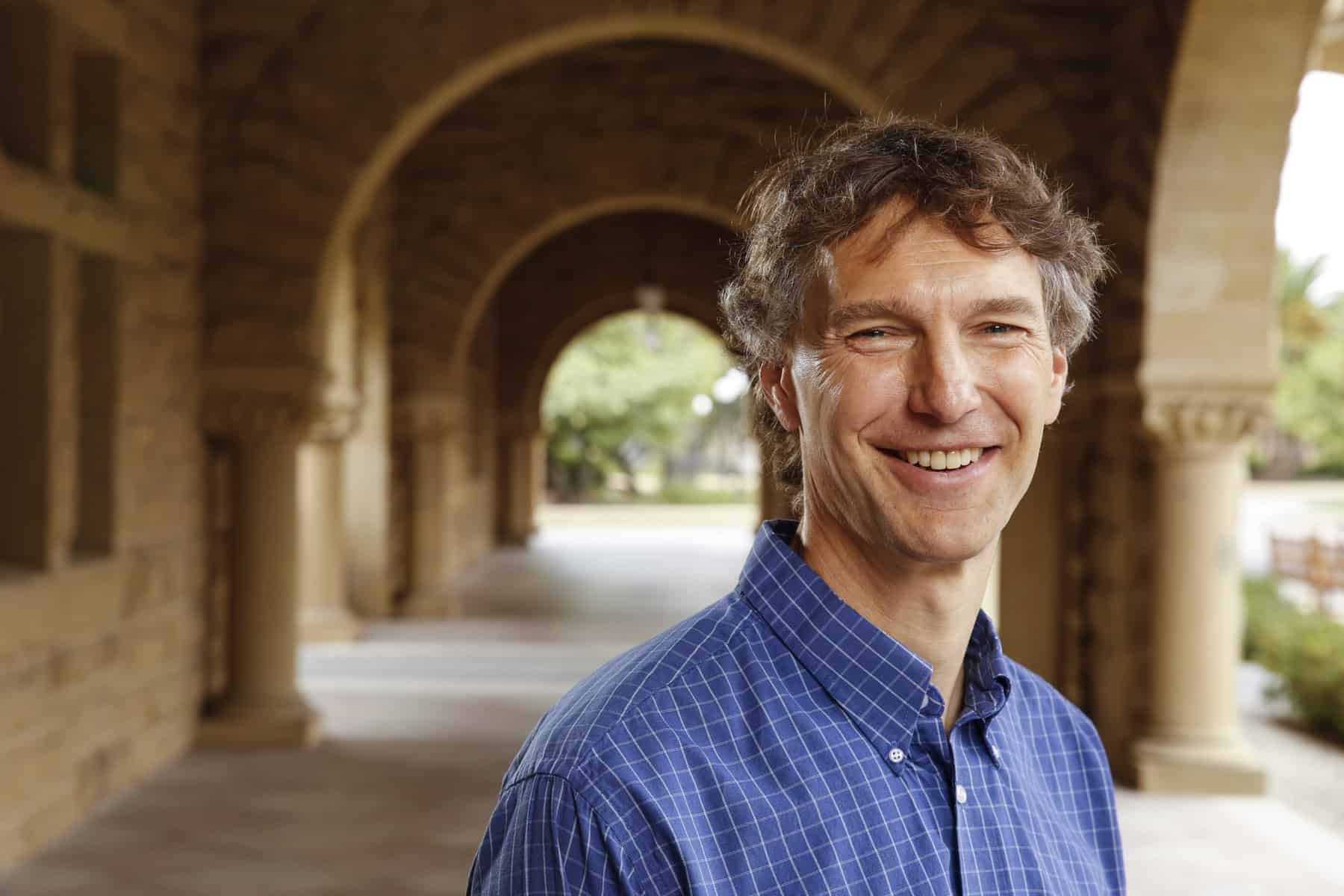

AI Researcher Alex Aiken

Contents

AI Safety Research

Alex Aiken

Alcatel-Lucent Professor, Tencent Chair of Computer Science

Stanford University

Project Handled: Verifying Deep Mathematical Properties of AI Systems

Amount Recommended: $100,813

Project Summary

Artificial Intelligence (AI) is a broad and open-ended research area, and the risks that AI systems will pose in the future are extremely hard to characterize. However, it seems likely that any AI system will involve substantial software complexity, will depend on advanced mathematics in both its implementation and justification, and will be naturally flexible and seem to degrade gracefully in the presence of many types of implementation errors. Thus we face a fundamental challenge in developing trustworthy AI: how can we build and maintain complex software systems that require advanced mathematics in order to implement and understand, and which are all but impossible to verify empirically? We believe that it will be possible and desirable to formally state and prove that the desired mathematical properties hold with respect to the underlying programs, and to maintain such proofs as part of the software artifacts themselves. We propose to demonstrate the feasibility of this methodology by building a system that takes beliefs about the world in the form of probabilistic models, synthesizes inference algorithms to update those beliefs in the presence of observations, and provides formal proofs that the inference algorithms are correct with respect to the laws of probability.

Technical Abstract

It seems likely that any AI system will involve substantial software complexity, will depend on advanced mathematics in both its implementation and justification, and will be naturally flexible and seem to degrade gracefully in the presence of many types of implementation errors. Thus we face a fundamental challenge in developing trustworthy AI: how can we build and maintain complex software systems that require advanced mathematics in order to implement and understand, and which are all but impossible to verify empirically? We believe that it will be possible and desirable to formally state and prove that the desired mathematical properties hold with respect to the underlying programs, and to maintain and evolve such proofs as part of the software artifacts themselves. We propose to demonstrate the feasibility of this methodology by implementing several different certified inference algorithms for probabilistic graphical models, including the Junction Tree algorithm, Gibbs sampling, Mean Field, and Loopy Belief Propagation. Each such algorithm has a very different notion of correctness that involves a different area of mathematics. We will develop a library of the relevant formal mathematics, and then for each inference algorithm, we will formally state its specification and prove that our implementation satisfies it.

About the Future of Life Institute

The Future of Life Institute (FLI) is a global think tank with a team of 20+ full-time staff operating across the US and Europe. FLI has been working to steer the development of transformative technologies towards benefitting life and away from extreme large-scale risks since its founding in 2014. Find out more about our mission or explore our work.

Related content

Other posts about AI Researcher Profile

AI Researcher Jacob Steinhardt

AI Researcher Bas Steunebrink

AI Researcher Moshe Vardi