The White House Considers the Future of AI

Contents

Artificial intelligence may be on the verge of changing the world forever. In many ways, just the automation and computer-science precursors to AI have already fundamentally changed how we interact, how we do our jobs, how we enjoy our free time, and even how we fight our wars. In the near future, we can expect self-driving cars, automated medical diagnoses, and AI programs predicting who will commit a crime. But our current federal system is woefully unprepared to deal with both the benefits and challenges these advances will bring.

To address these concerns, the White House formed a new Subcommittee on Machine Learning and Artificial Intelligence, which will monitor advances and milestones of AI development for the National Science and Technology Council. The subcommittee began with two conferences about the various benefits and risks that AI poses.

The first conference addressed the legal and governance issues we’ll likely face in the near term, while the second looked at how AI can be used for social good.

While many of the speakers, especially in the first conference, emphasized the need to focus on short-term concerns over long-term concerns of artificial general intelligence (AGI), the issues they discussed are also some of those we’ll need to address in order to ensure beneficial AGI.

For example, Oren Etzioni kicked off the conferences by arguing that we have ample time to address the longer term concerns about AGI in the future, and should therefore focus our current efforts on issues like jobs and privacy. But in response to a question from the audience, he expressed a more nuanced view: “As software systems become more complex … we will see more unexpected behavior … The software that we’ve been increasingly relying on will behave unexpectedly.” He also pointed out that we need to figure out how to deal with people who will do bad things with good AI systems and not just worry about AI that goes bad.

This viewpoint set the tone for the rest of the first conference.

Kate Crawford talked about the need for accountability and considered how difficult transparency can be for programs that essentially act as black boxes. Almost all of the speakers expressed concern about maintaining privacy, but Pedro Domingos added that privacy concerns are more about control:

“Who controls data about me?”

Another primary concern among all researchers was about the misuse of data and the potential for bad people to intentionally misuse AI. Bryant Walker Smith wondered who would decide when an AI was safe enough to be unleashed to the general public, and many of the speakers wondered how we should deal with an AI system that doesn’t behave as intended or that learns bad behavior from its new owners. Domingos mentioned that learning systems are fallible, and they often fail in ways different from people. This makes it even more difficult to predict how an AI system will behave outside of the lab.

Kay Firth-Butterfield, the Chief Officer of the Ethics Advisory Panel for Lucid, attended the conference, and in an email to FLI, she gave an example of how the research presented at these conferences can help us be better prepared as we move toward AGI. She said:

“I think that focus on the short-term benefits and concerns around AI can help to inform the work which needs to be done for understanding our interaction as humans with AGI. One of the short-term issues is transparency of decision making by Machine Learning AI. This is a short-term concern because it affects citizens’ rights if used by the government to assist decision making, see Danielle Citron’s excellent work in this area. Finding a solution to this issue now paves the way for greater clarity of systems in the future.”

While the second conference focused on some of the exciting applications research happening now with AI and big data, the speakers also brought up some of the concerns from the first conference, as well as some new issues.

Bias was a big concern, with White House representatives, Roy Austin and Lynn Overmann, both mentioning challenges they face in using inadvertently biased AI programs to address crime, police brutality and the criminal justice system. Privacy was another issue that came up frequently, especially as the speakers talked about improving the health system, using social media for data mining, and using citizen science. And simply trying to predict where AI would take us, and thus where to spend time and resources was another concern that speakers brought up.

But on the whole, the second conference was very optimistic, offering a taste of how AI can move us toward existential hope.

For example, traffic is estimated to cost the US $121 billion per year in lost time and fuel, while releasing 56 billion pounds of CO2 into the atmosphere. Stephen Smith is working on programs that can improve traffic lights to anticipate traffic, rather than react to it, saving people time and money.

Tom Dietterich discussed two programs he’s working on. TAHMO is a project to better understand weather patterns in Africa, which will, among other things, improve farming operations across the continent. He’s also using volunteer data to track bird migration, which can amount to thousands of data points per day. That’s data which can then be used to help coastal birds whose habitats will be destroyed as sea levels rise.

Milind Tambe created algorithms based on game theory to improve security at airports and shipping ports, and now he’s using similar programs to help stop poaching in places like Uganda and Malaysia.

Tanya Berger Wolf is using crowdsourcing for conservation. Her project relies on pictures uploaded by thousands of tourists to track various animals and herds to better understand their lifestyles and whether or not the animals are at risk. The AI programs she employs can track specific animals via the uploaded images, just based on small variations of visible patterns on the skin and fur.

Erik Elster explained that each one of us will likely be misdiagnosed at least once because doctors still rely on visual diagnosis. He’s working to leverage machine learning to make more effective use of big data in medical science and procedures to improve diagnosis and treatment.

Henry Kautz collected data from social media to start predicting who would be getting sick, before they started showing any signs of a flu or cold.

Eric Horvitz discussed ten fields that could see incredible benefits from AI advancements, from medicine to agriculture to education to overall wellbeing.

Yet even while highlighting all the amazing ways AI can help improve our lives, these projects also shed some light on problems that might be minor nuisances in the short-term, but could become severe if we don’t solve them before strong AI is developed.

Kautz talked about how difficult accurate information is to come by, given that people are so reticent to share their health data with strangers. Smith mentioned that one of the problems his group ran into during an early iteration of their traffic lights project was forgetting to take pedestrians into account. These are minor issues now, but forgetting to take into account a major component — like pedestrians — could become a much larger problem as AI advances and lives are potentially on the line.

In an email, Dietterich summed up the hopes and concerns of the second conference:

“At the AI for Social Good meeting, several people reported important ways that AI could be applied to address important problems. However, people also raised the concern that naively applying machine learning algorithms to biased data will lead to biased outcomes. One example is that volunteer bird watchers only go where they expect to find birds and police only patrol where they expect to find criminals. This means that their data are biased. Fortunately, in machine learning we have developed algorithms for explicitly modeling the bias and then correcting for it. When the bias is extreme, nothing can be done, but when there is enough variation in the data, we can remove the bias and still produce useful results.”

Up to a point, artificial intelligence should be able to self-correct. But we need to recognize and communicate its limitations just as much as we appreciate its abilities.

Hanna Wallach also discussed her research which looked at the problems that arose because computer scientists and social scientists so often talk past each other. It’s especially important for computer scientists to collaborate with social scientists in order to understand the implications of their models. She explained, “When data points are human, error analysis takes on a new level of importance.”

Overmann ended the day by mentioning that, as much as her team needs AI researchers, the AI researchers need the information her group has gathered as well. AI programs will naturally run better when better data is used to design them.

This last point is symbolic of all of the issues that were brought up about AI. While never mentioned explicitly, an underlying theme of the conferences is that AI research can’t just occur in a bubble: It must take into account all of the complexities and problems of the real world, and society as a whole must consider how we will incorporate AI into our lives.

In her email to FLI, Firth-Butterfield also added,

“I think that it is important to ask the public for their opinion because it is they who will be affected by the growth of AI. It is essential to beneficial and successful AI development to be cognizant of public opinion and respectful of issues such as personal privacy.”

The administration is only halfway through the conference series, so it will be looking at all of this and more as it determines where to focus its support for artificial intelligence research and development.

The next conference, Safety and Control of Artificial Intelligence, will be held on June 28 in Pittsburgh.

About the Future of Life Institute

The Future of Life Institute (FLI) is a global think tank with a team of 20+ full-time staff operating across the US and Europe. FLI has been working to steer the development of transformative technologies towards benefitting life and away from extreme large-scale risks since its founding in 2014. Find out more about our mission or explore our work.

Related content

Other posts about AI, Recent News

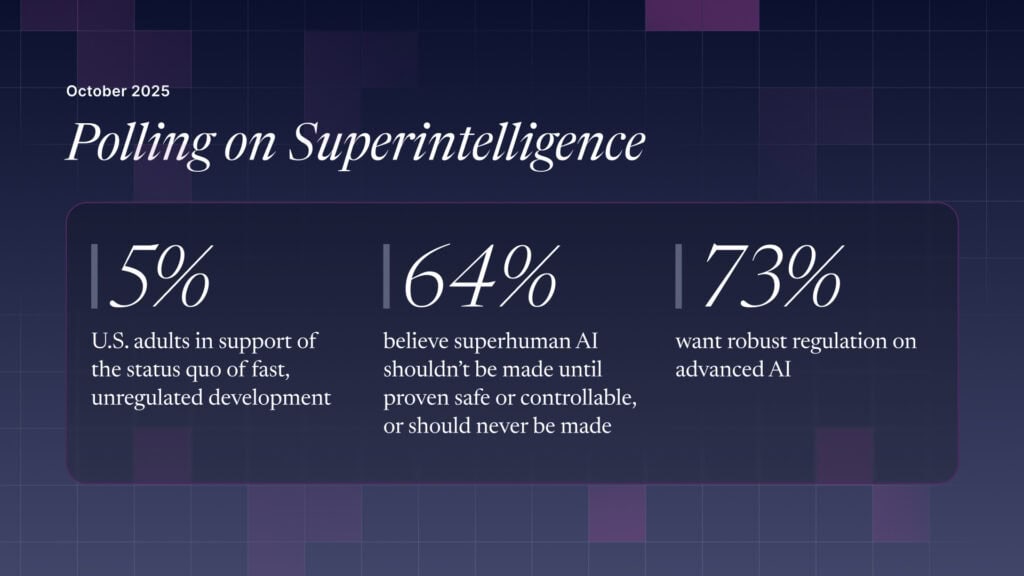

The U.S. Public Wants Regulation (or Prohibition) of Expert‑Level and Superhuman AI