The AI Wars: The Battle of the Human Minds to Keep Artificial Intelligence Safe

Contents

For all the media fear mongering about the rise of artificial intelligence in the future and the potential for malevolent machines, a battle of the AI war has already begun. But this one is being waged by some of the most impressive minds within the realm of human intelligence today.

At the start of 2015, few AI researchers were worried about AI safety, but that all changed quickly. Throughout the year, Nick Bostrom’s book, Superintelligence: Paths, Dangers, Strategies, grew increasingly popular. The Future of Life Institute held its AI safety conference in Puerto Rico. Two open letters regarding artificial intelligence and autonomous weapons were released. Countless articles came out, quoting AI concerns from the likes of Elon Musk, Stephen Hawking, Bill Gates, Steve Wozniak, and other luminaries of science and technology. Musk donated $10 million in funding to AI safety research through FLI. Fifteen million dollars was granted to the creation of the Leverhulme Centre for the Future of Intelligence. And most recently, the nonprofit AI research company, OpenAI, was launched to the tune of $1 billion, which will allow some of the top minds in the AI field to address safety-related problems as they come up.

In all, it’s been a big year for AI safety research. Many in science and industry have joined the AI-safety-research-is-needed camp, but there are still some stragglers of equally staggering intellect. So just what does the debate still entail?

OpenAI was the big news of the past week, and its launch coincided (probably not coincidentally) with the Neural Information Processing Systems conference, which attracts some of the best-of-the-best in machine learning. Among the attractions at the conference was the symposium, Algorithms Among Us: The Societal Impacts of Machine Learning, where some of the most influential people in AI research and industry debated their thoughts and concerns about the future of artificial intelligence.

From session 2 of the Algorithms Among Us symposium: Murray Shanahan, Shane Legg, Andrew Ng, Yann LeCun, Tom Dietterich, and Gary Marcus

What is AGI and should we be worried about it?

Artificial general intelligence (AGI) is the term given to artificial intelligence that would be, in some sense, equivalent to human intelligence. It wouldn’t solve just a narrow, specific task, as AI does today, but would instead solve a variety of problems and perform a variety of tasks, with or without being programmed to do so. That said, it’s not the most well defined term. As the director of Facebook’s AI research group, Yann LeCun stated, “I don’t want to talk about human-level intelligence because I don’t know what that means really.”

If defining AGI is difficult, predicting if or when it will exist is nearly impossible. Some of the speakers, like LeCun and Andrew Ng, didn’t want to waste time considering the possibility of AGI since they consider it to be so distant. Both referenced the likelihood of another AI winter, in which, after all this progress, scientists will hit a research wall that will take some unknown number of years or decades to overcome. Ng, a Stanford professor and Chief Scientist of Baidu, compared concerns about the future of human-level AI to far-fetched worries about the difficulties surrounding travel to the star system Alpha Centauri.

LeCun pointed out that we don’t really know what a superintelligent AI would look like. “Will AI look like human intelligence? I think not. Not at all,” he said. He then went on to explain why human intelligence isn’t nearly as general as we like to believe. “We’re driven by basic instincts […] They (AI) won’t have the drives that make humans do bad things to each other.” He added that there would be no reason he can think of to build preservation instincts or curiosity into machines.

However, many of the participants disagreed with LeCun and Ng, emphasizing the need to be prepared in advance of problems, rather than trying to deal with them as they arise.

Shane Legg, co-founder of Google’s DeepMind, argued that the benefit of starting safety research now is that it will help us develop a framework that will allow researchers to move in a positive direction toward the development of smarter AI. “In terms of AI safety, I think it’s both overblown and underemphasized,” he said, commenting on how profound – both positively and negatively – the societal impact of advanced AI could be. “If we are approaching a transition of this magnitude, I think it’s only responsible that we start to consider, to whatever extent that we can in advance, the technical aspects and the societal aspects and the legal aspects and whatever else […] Being prepared ahead of time is better than trying to be prepared after you already need some good answers.”

Gary Marcus, Director of the NYU Center for Language and Music, added, “In terms of being prepared, we don’t just need to prepare for AGI, we need to prepare for better AI […] Already, issues of security and risk have come forth.”

Even Ng agreed that AI safety research certainly wasn’t a bad thing, saying, “I’m actually glad there are other parts of society studying ethical parts of AI. I think this is a great thing to do.” Though he also admitted it wasn’t something he wanted to spend his own time on.

It’s the economy…

Among all of the AI issues debated by researchers, the one agreed upon by almost everyone who took the stage at the symposium was the detrimental impact AI could have on the job market. Erik Brynjolfsson, co-author of The Second Machine Age: Work, Progress, and Prosperity in a Time of Brilliant Technologies, set the tone for the discussion with his presentation which highlighted some of the issues that artificial intelligence will have on the economy. He explained that we’re in the midst of incredible technological advances, which could be highly beneficial, but our skills, organizations and institutions aren’t keeping up. Because of the huge gap in pace, business as usual won’t work.

As unconcerned about the future of AGI as Ng was, he quickly became the strongest advocate for tackling the economics issue that will pop up in the near future. “I think the biggest challenge is the challenge of unemployment,” Ng said.

The issue of unemployment is one that is already starting to appear, even with the very narrow AI that exists today. Around the world, low- and middle-skilled workers are getting displaced by robots or software, and that trend is expected to continue at rapid rates.

LeCun argued that the world overcame the massive job loss that resulted from the new technologies associated with the steam engine too, but both Brynjolfsson and Ng disagreed with that argument, citing the much more rapid pace of technology today. “Technology has always been destroying jobs, and it’s always been creating jobs,” Brynjolfsson admitted, but he also explained how difficult it is to predict which technologies will impact us the most and when they’ll kick in. The current exponential rate of technological progress is unlike anything we’ve ever experienced before in history.

Bostrom mentioned that the rise of thinking machines will be more analogous to the rise of the human species than to the steam engine or the industrial revolution. He reminded the audience that if a superintelligent AI is developed, it will be the last invention we ever have to make.

A big concern with the economy is that the job market is changing so quickly that most people can’t develop new skills fast enough to keep up. The possibility of a basic income and paying people to go back to school were both mentioned. However, the psychological toll of being unemployed is one that can’t be overcome even with a basic income, and the effect that mass unemployment might have on people drew concern from the panelists.

Bostrom became an unexpected voice of optimism, pointing out that there have always been groups who were unemployed, such as aristocrats, children and retirees. Each of these groups managed to enjoy their unemployed time by filling it with other hobbies and activities.

However, solutions like basic income and leisure time will only work if political leaders begin to take the initiative soon to address the unemployment issues that near-future artificial intelligence will trigger.

From session 2 of the Algorithms Among Us symposium: Michael Osborne, Finale Doshi-Velez, Neil Lawrence, Cynthia Dwork, Tom Dietterich, Erik Brynjolfsson, and Ian Kerr

Closing arguments

Ideally, technology is just a tool that is not inherently good or bad. Whether it helps humanity or hurts us should depend on how we use the tool. Except if AI develops the capacity to think, this argument isn’t quite accurate. At that point, the AI isn’t a person, but it isn’t just an instrument either.

Ian Kerr, the Research Chair of Ethics, Law, and Technology at the University of Ottawa, spoke early in the symposium about the legal ramifications (or lack thereof) of artificial intelligence. The overarching question for an AI gone wrong is: who’s to blame? Who will be held responsible when something goes wrong? Or, on the flip side, who is to blame if a human chooses to ignore the advice of an AI that’s had inconsistent results, but which later turns out to have been the correct advice?

If anything, one of the most impressive results from this debate was how often the participants agreed with each other. At the start of the year, few AI researchers were worried about safety. Now, though many still aren’t worried, most acknowledge that we’re all better off if we consider safety and other issues sooner rather than later. The most disagreement was over when we should start working on AI safety, not if it should happen. The panelists also all agreed that regardless of how smart AI might become, it will happen incrementally, rather than as the “event” that is implied in so many media stories. We already have machines that are smarter and better at some tasks than humans, and that trend will continue.

For now, as Harvard Professor Finale Doshi-Velez pointed out, we can control what we get out of the machine: if we don’t like or understand the results, we can reprogram it.

But how much longer will that be a viable solution?

Coming soon…

The article above highlights some of the discussion that occurred between AI researchers about whether or not we need to focus on AI safety research. Because so many AI researchers do support safety research, there was also much more discussion during the symposium about which areas pose the most risk and have the most potential. We’ll be starting a new series in the new year that goes into greater detail about different fields of study that AI researchers are most worried about and most excited about.

About the Future of Life Institute

The Future of Life Institute (FLI) is a global think tank with a team of 20+ full-time staff operating across the US and Europe. FLI has been working to steer the development of transformative technologies towards benefitting life and away from extreme large-scale risks since its founding in 2014. Find out more about our mission or explore our work.

Related content

Other posts about AI, Recent News

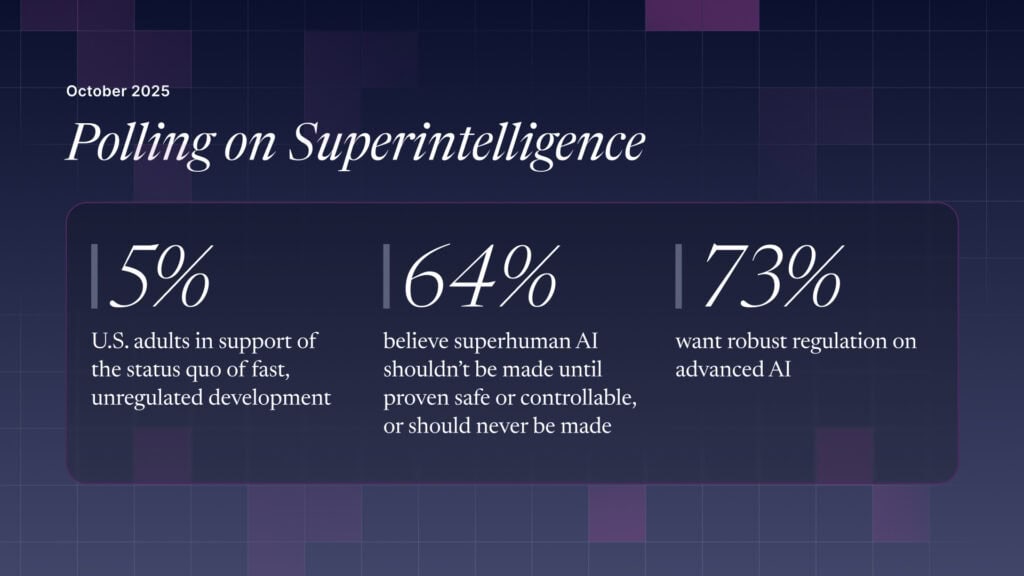

The U.S. Public Wants Regulation (or Prohibition) of Expert‑Level and Superhuman AI